The post The Need for Real-Time Device Tracking appeared first on ScaleOut Software.

]]>Real-Time Device Tracking with In-Memory Computing Can Fill an Important Gap in Today’s Streaming Analytics Platforms

We are increasingly surrounded by intelligent IoT devices, which have become an essential part of our lives and an integral component of business and industrial infrastructures. Smart watches report biometrics like blood pressure and heartrate; sensor hubs on long-haul trucks and delivery vehicles report telemetry about location, engine and cargo health, and driver behavior; sensors in smart cities report traffic flow and unusual sounds; card-key access devices in companies track entries and exits within businesses and factories; cyber agents probe for unusual behavior in large network infrastructures. The list goes on.

The Limitations of Today’s Streaming Analytics

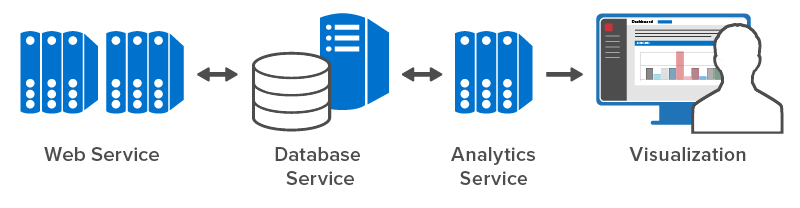

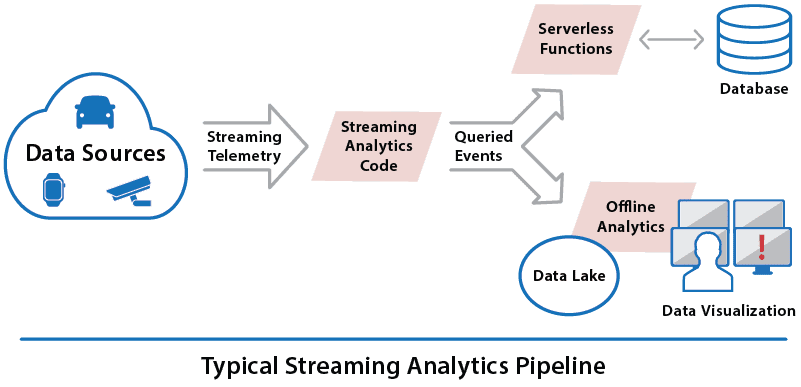

How are we managing the torrent of telemetry that flows into analytics systems from these devices? Today’s streaming analytics architectures are not equipped to make sense of this rapidly changing information and react to it as it arrives. The best they can usually do in real-time using general purpose tools is to filter and look for patterns of interest. The heavy lifting is deferred to the back office. The following diagram illustrates a typical workflow. Incoming data is saved into data storage (historian database or log store) for query by operational managers who must attempt to find the highest priority issues that require their attention. This data is also periodically uploaded to a data lake for offline batch analysis that calculates key statistics and looks for big trends that can help optimize operations.

![]()

What’s missing in this picture? This architecture does not apply computing resources to track the myriad data sources sending telemetry and continuously look for issues and opportunities that need immediate responses. For example, if a health tracking device indicates that a specific person with known health condition and medications is likely to have an impending medical issue, this person needs to be alerted within seconds. If temperature-sensitive cargo in a long haul truck is about to be impacted by an erratic refrigeration system with known erratic behavior and repair history, the driver needs to be informed immediately. If a cyber network agent has observed an unusual pattern of failed login attempts, it needs to alert downstream network nodes (servers and routers) to block the kill chain in a potential attack.

A New Approach: Real-Time Device Tracking

To address these challenges and countless others like them, we need autonomous, deep introspection on incoming data as it arrives and immediate responses. The technology that can do this is called in-memory computing. What makes in-memory computing unique and powerful is its two-fold ability to host fast-changing data in memory and run analytics code within a few milliseconds after new data arrives. It can do this simultaneously for millions of devices. Unlike manual or automatic log queries, in-memory computing can continuously run analytics code on all incoming data and instantly find issues. And it can maintain contextual information about every data source (like the medical history of a device wearer or the maintenance history of a refrigeration system) and keep it immediately at hand to enhance the analysis. While offline, big data analytics can provide deep introspection, they produce answers in minutes or hours instead of milliseconds, so they can’t match the timeliness of in-memory computing on live data.

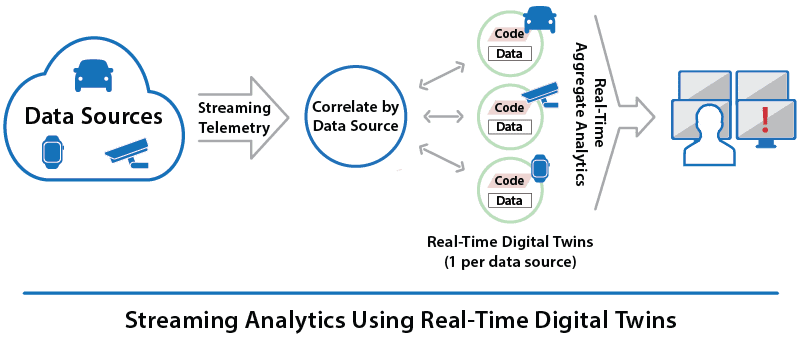

The following diagram illustrates the addition of real-time device tracking with in-memory computing to a conventional analytics system. Note that it runs alongside existing components. It adds the ability to continuously examine incoming telemetry and generate both feedback to the data sources (usually, devices) and alerts for personnel in milliseconds:

![]()

In-Memory Computing with Real-Time Digital Twins

Let’s take a closer look at today’s conventional streaming analytics architectures, which can be hosted in the cloud or on-premises. As shown in the following diagram, a typical analytics system receives messages from a message hub, such as Kafka, which buffers incoming messages from the data sources until they can be processed. Most analytics systems have event dashboards and perform rudimentary real-time processing, which may include filtering an aggregated incoming message stream and extracting patterns of interest. These real-time components then deliver messages to data storage, which can include a historian database for logging and query and a data lake for offline, batch processing using big data tools such as Spark:

![]()

Conventional streaming analytics systems run either manual queries or automated, log-based queries to identify actionable events. Since big data analyses can take minutes or hours to run, they are typically used to look for big trends, like the fuel efficiency and on-time delivery rate of a trucking fleet, instead of emerging issues that need immediate attention. These limitations create an opportunity for real-time device tracking to fill the gap.

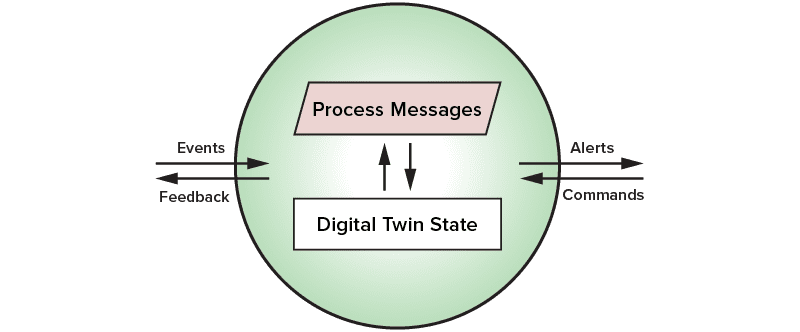

As shown in the following diagram, an in-memory computing system performing real-time device tracking can run alongside the other components of a conventional streaming analytics solution and provide autonomous introspection of the data streams from each device. Hosted on a cluster of physical or virtual servers, it maintains memory-based state information about the history and dynamically evolving state of every data source. As messages flow in, the in-memory compute cluster examines and analyzes them separately for each data source using application-defined analytics code. This code makes use of the device’s state information to help identify emerging issues and trigger alerts or feedback to the device. In-memory computing has the speed and scalability needed to generate responses within milliseconds, and it can evaluate and report aggregate trends every few seconds.

![]()

Because in-memory computing can store contextual data and process messages separately for each data source, it can organize application code using a software-based digital twin for each device, as illustrated in the diagram above. Instead of using the digital twin concept to model the inner workings of the device, a real-time digital twin tracks the device’s evolving state coupled with its parameters and history to detect and predict issues needing immediate attention. This provides an object-oriented mechanism that simplifies the construction of real-time application code that needs to evaluate incoming messages in the context of the device’s dynamic state. For example, it enables a medical application to determine the importance of a change in heart rate for a device wearer based on the individual’s current activity, age, medications, and medical history.

Summing Up

The complex web of communicating devices that surrounds us needs intelligent, real-time device tracking to extract its full benefits. Conventional streaming analytics architectures have not kept up with the growing demands of IoT. With its combination of fast data storage, low-latency processing and ease of use, in-memory computing can fill the gap while complementing the benefits provided by historian databases and data lakes. It can add the immediate feedback that IoT applications need and boost situational awareness to a new level, finally enabling IoT to deliver on its promises.

The post The Need for Real-Time Device Tracking appeared first on ScaleOut Software.

]]>The post Adding New Capabilities for Real-Time Analytics to Azure IoT appeared first on ScaleOut Software.

]]>

The population of intelligent IoT devices is exploding, and they are generating more telemetry than ever. Whether it’s health-tracking watches, long-haul trucks, or security sensors, extracting value from these devices requires streaming analytics that can quickly make sense of the telemetry and intelligently react to handle an emerging issue or capture a new opportunity.

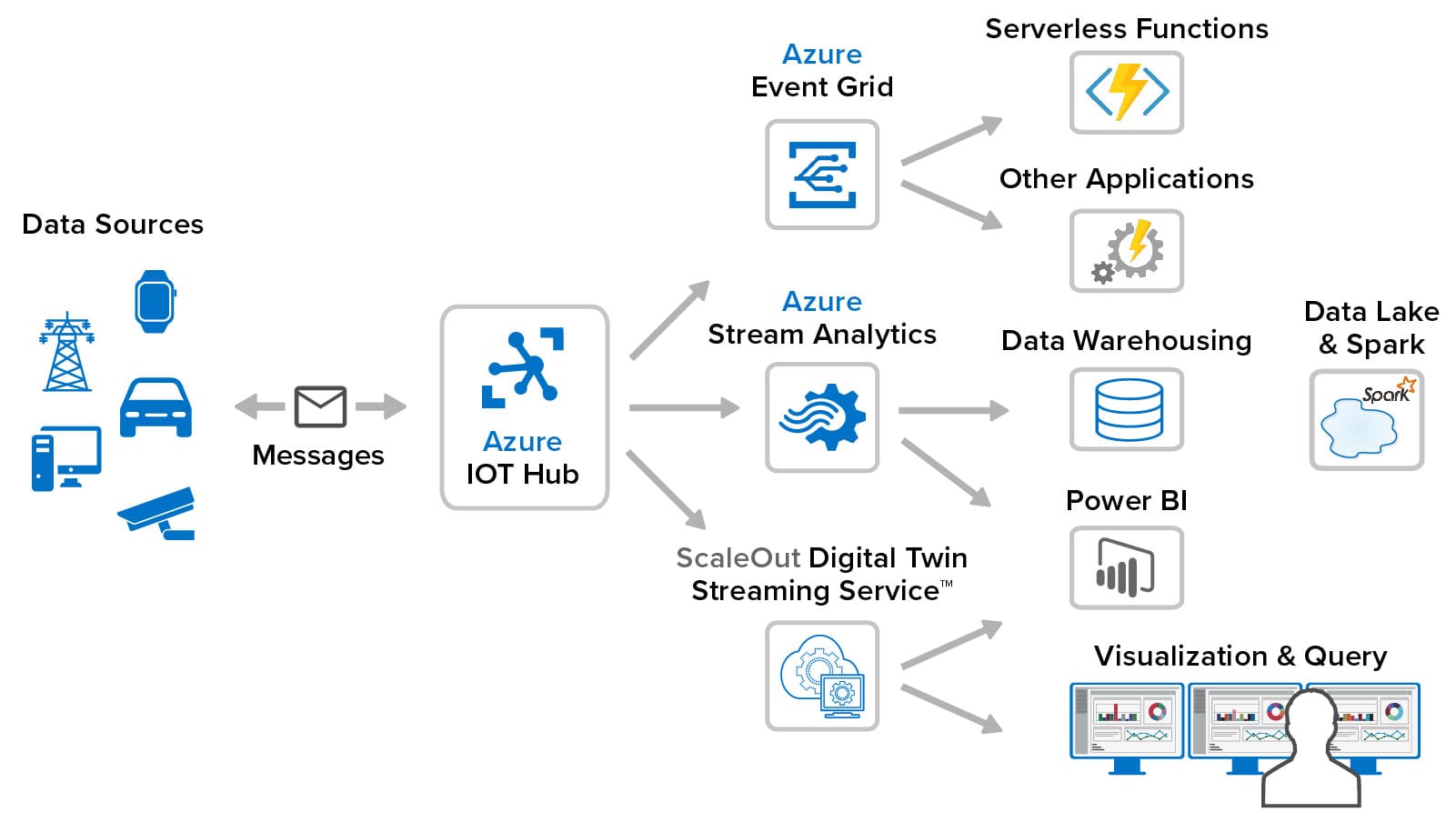

The Microsoft Azure IoT ecosystem offers a rich set of capabilities for processing IoT telemetry, from its arrival in the cloud through its storage in databases and data lakes. Acting as a switchboard for incoming and outgoing messages, Azure IoT Hub forms the core of these capabilities. It provides support for a range of message protocols, buffering, and scalable message distribution to downstream services. These services include:

- Azure Event Grid for routing incoming events to a variety of handlers, including serverless functions, webhooks, storage queues, and other services

- Azure IoT Central for managing devices, visualizing incoming telemetry on a dashboard, triggering alerts, and integrating with line-of-business applications

- Azure Stream Analytics for simultaneously analyzing aggregated telemetry streams using extended SQL queries to extract patterns that can be fed to workflows, including alerts, serverless functions, and data storage with offline processing

- Azure Time Series Insights for storing time-series data and then exploring, modeling, and querying it to gain insights, such as identifying anomalies and trends, with a rich set of analytics tools

- Azure Digital Twins for creating a graphical representation of the assets within an organization using the Digital Twin Definition Language, processing events, and visualizing entity graphs to display and query status

While Azure IoT offers a wide variety of services, it focuses on visualizing entities and events, extracting insights from telemetry streams with queries, and migrating events to storage for more intensive offline analysis. What’s missing is continuous, real-time introspection on the dynamic state of IoT devices to predict and immediately react to significant changes in their state. These capabilities are vitally important to extract the full potential of real-time intelligent monitoring.

For example, here are some scenarios in which stateful, real-time introspection can create important insights. Telemetry from each truck in a fleet of thousands can provide numerous parameters about the driver (such as repeated lateral accelerations at the end of a long shift) that might indicate the need for a dispatcher to intervene. A health tracking device might indicate a combination of signals (blood pressure, blood oxygen, heart rate, etc.) that indicate an emerging medical issue for an individual with a known medical history and current medications. A security sensor in a key-card access system might indicate an unusual pattern of building entries for an employee who has given notice of resignation.

In all of these examples, the event-processing system needs to be able to independently analyze events for each data source (IoT device) within milliseconds, and it needs immediate access to dynamic, contextual information about the data source that it can use to perform real-time predictive analytics. In short, what’s needed is a scalable, in-memory computing platform connected directly to Azure IoT Hub which can ingest and process event messages separately for each data source using memory-based state information maintained for that data source.

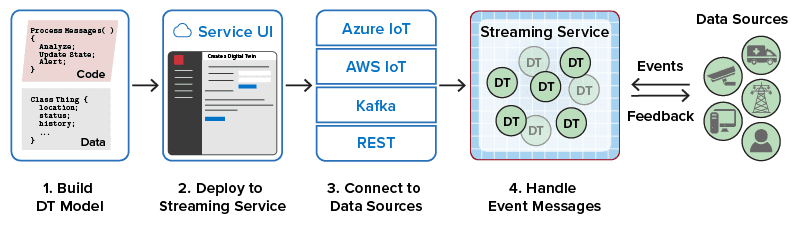

The ScaleOut Digital Twin Streaming Service provides precisely these capabilities. It does this by leveraging the digital twin concept (not to be confused with Azure Digital Twins) to create an in-memory software object for every data source that it is tracking. This object, called a real-time digital twin, holds dynamic state information about the data source and is made available to the application’s event handling code, which runs within 1-2 milliseconds whenever an incoming event is received. Application developers write event handling code in C#, Java, JavaScript, or using a rules engine; this code encapsulates application logic, such as a predictive analytics or machine learning algorithm. Once the real-time digital twin’s model (that is, its state data and event handling code) has been created, the developer can use an intuitive UI to deploy it to the streaming service and connect to Azure IoT Hub.

provides precisely these capabilities. It does this by leveraging the digital twin concept (not to be confused with Azure Digital Twins) to create an in-memory software object for every data source that it is tracking. This object, called a real-time digital twin, holds dynamic state information about the data source and is made available to the application’s event handling code, which runs within 1-2 milliseconds whenever an incoming event is received. Application developers write event handling code in C#, Java, JavaScript, or using a rules engine; this code encapsulates application logic, such as a predictive analytics or machine learning algorithm. Once the real-time digital twin’s model (that is, its state data and event handling code) has been created, the developer can use an intuitive UI to deploy it to the streaming service and connect to Azure IoT Hub.

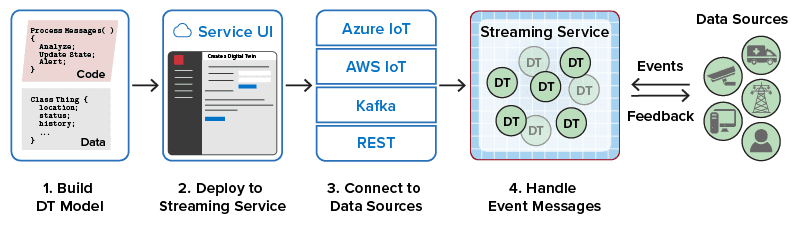

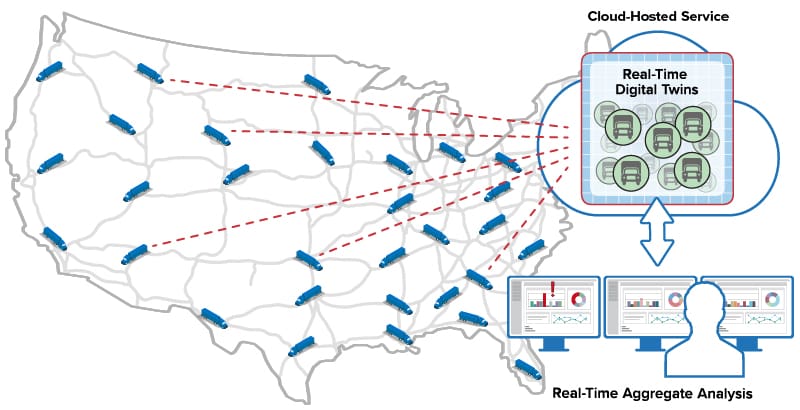

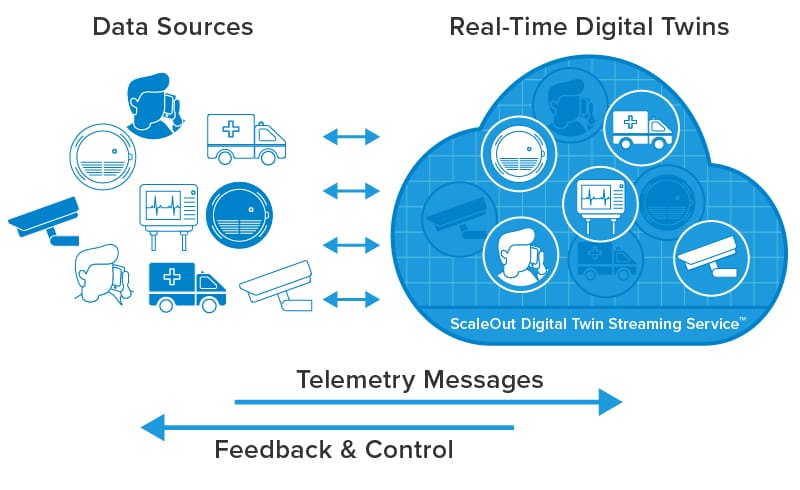

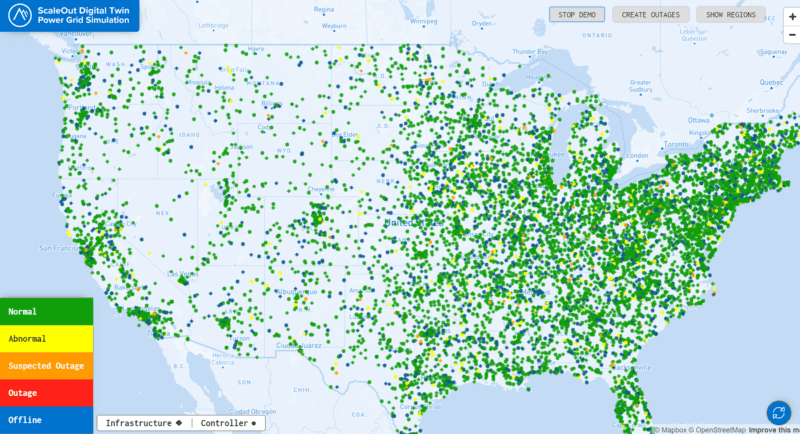

As shown in the following diagram, ScaleOut’s streaming service connects to Azure IoT Hub, runs alongside other Azure IoT services, and provides unique capabilities that enhance the overall Azure IoT ecosystem:

ScaleOut’s streaming service handles all the details of message delivery, data management, code orchestration, and scalable execution. This makes developing streaming analytics code for real-time digital twins fast and easy. The application developer just focuses on writing a single method to process incoming messages, run application-specific analytics, update state information about the data source, and generate alerts as needed. The optional rules engine further simplifies the development process with a UI for specifying state data and a sequential list of business rules for describing analytics code.

How are the streaming service’s real-time digital twins different from Azure digital twins? Both services leverage the digital twin concept by providing a software entity for each IoT device that can track the parameters and state of the device. What’s different is the streaming service’s focus on real-time analytics and its use of an in-memory computing platform integrated with Azure IoT Hub to ensure the lowest possible latency and high scalability. Azure digital twins serve a different purpose. They are intended to maintain a graphical representation of an organization’s entities for management and querying current status; they are not designed to implement real-time analytics using application-defined algorithms.

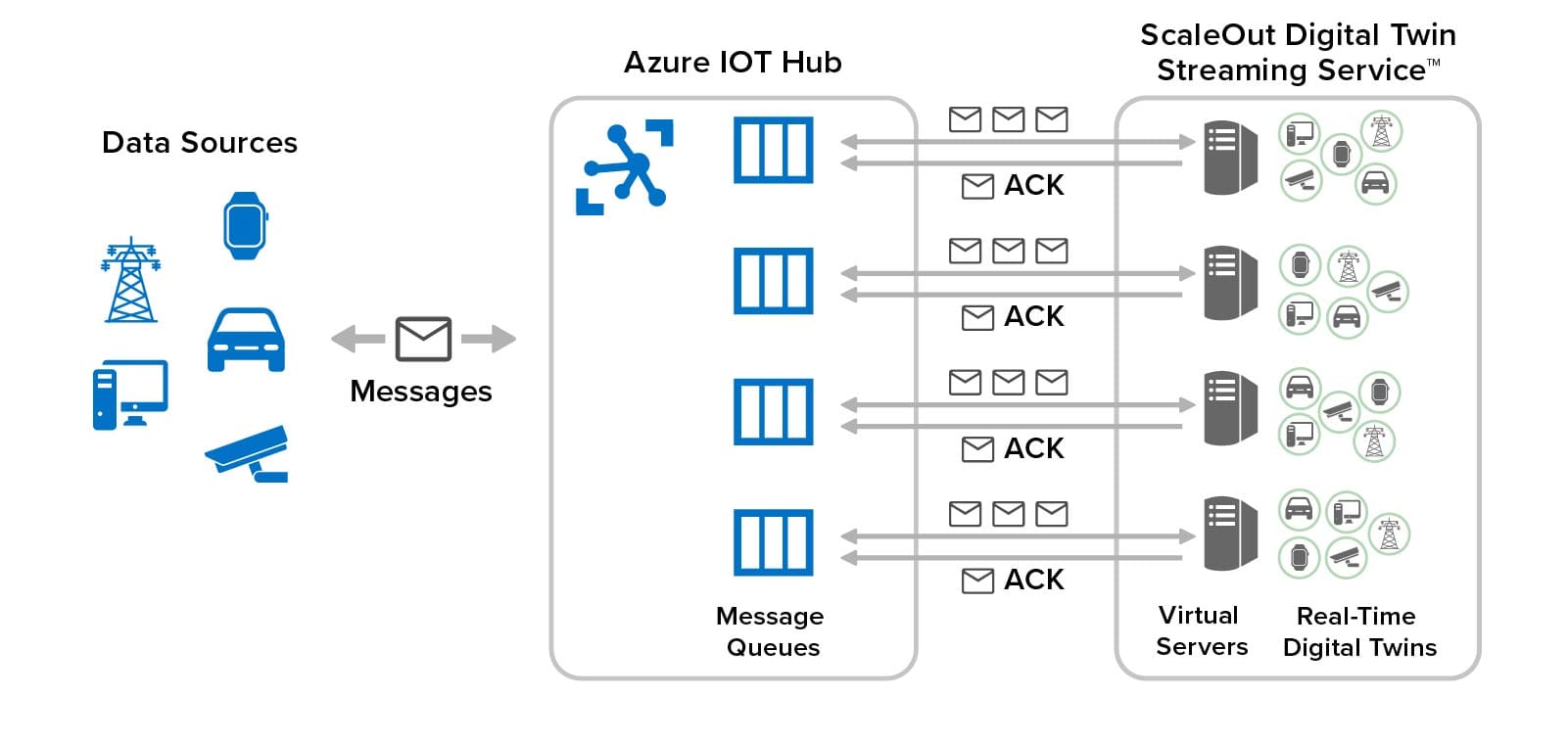

The following diagram illustrates the integration of ScaleOut’s streaming service with Azure IoT Hub to provide fast, scalable event handling with low-latency access to memory-based state for all data sources. It shows how real-time digital twins are distributed across multiple virtual servers organized into an in-memory computing cluster connected to Azure IoT Hub. The streaming service uses multiple message queues in Azure IoT Hub to scale message delivery and event processing:

As IoT devices proliferate and become more intelligent, it’s vital that our cloud-based event-processing systems be able to perform continuous and deep introspection in real time. This enables applications to react quickly, effectively, and autonomously to emerging challenges, such as to security threats and safety issues, as well as to new opportunities, such as real-time ecommerce recommendations. While there is an essential role for query and offline analytics to optimize IoT services, the need for highly granular, real-time analytics continues to grow. ScaleOut’s Digital Twin Streaming Service is designed to meet this need as an integral part of the Azure IoT ecosystem.

To learn more about using the ScaleOut’s Digital Twin Streaming Service in the Microsoft Azure cloud, visit the Azure Marketplace here.

The post Adding New Capabilities for Real-Time Analytics to Azure IoT appeared first on ScaleOut Software.

]]>The post Deploying Real-Time Digital Twins On Premises with ScaleOut StreamServer DT appeared first on ScaleOut Software.

]]>

With the ScaleOut Digital Twin Streaming Service , an Azure-hosted cloud service, ScaleOut Software introduced breakthrough capabilities for streaming analytics using the real-time digital twin concept. This new software model enables applications to easily analyze telemetry from individual data sources in 1-3 milliseconds while maintaining state information about data sources that deepens introspection. It also provides a basis for applications to create key status information that the streaming platform aggregates every few seconds to maximize situational awareness. Because it runs on a scalable, highly available in-memory computing platform, it can do all this simultaneously for hundreds of thousands or even millions of data sources.

, an Azure-hosted cloud service, ScaleOut Software introduced breakthrough capabilities for streaming analytics using the real-time digital twin concept. This new software model enables applications to easily analyze telemetry from individual data sources in 1-3 milliseconds while maintaining state information about data sources that deepens introspection. It also provides a basis for applications to create key status information that the streaming platform aggregates every few seconds to maximize situational awareness. Because it runs on a scalable, highly available in-memory computing platform, it can do all this simultaneously for hundreds of thousands or even millions of data sources.

The unique capabilities of real-time digital twins can provide important advances for numerous applications, including security, fleet telematics, IoT, smart cities, healthcare, and financial services. These applications are all characterized by numerous data sources which generate telemetry that must be simultaneously tracked and analyzed, while maintaining overall situational awareness that immediately highlights problems of concern an/or opportunities of interest. For example, consider some of the new capabilities that real-time digital twins can provide in fleet telematics and vaccine distribution during COVID-19.

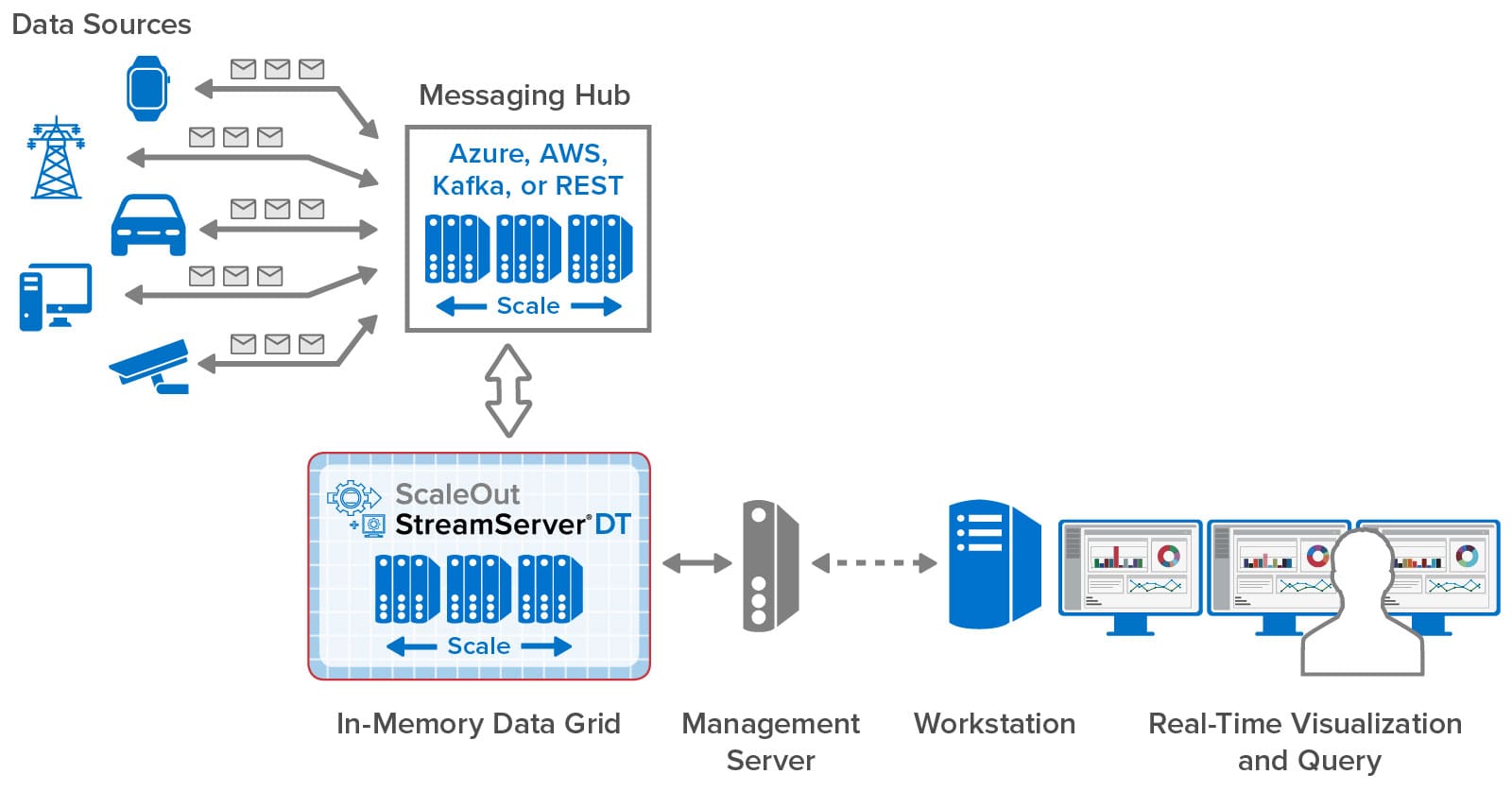

To address security requirements or the need for tight integration with existing infrastructure, many organizations need to host their streaming analytics platform on-premises. Scaleout StreamServer® DT was created to meet this need. It combines the scalable, battle-tested in-memory data grid that powers ScaleOut StreamServer with the graphical user interface and visualization features of the cloud service in a unified, on-premises deployment. This gives users all of the capabilities of the ScaleOut Digital Twin Streaming Service with complete infrastructure control.

As illustrated in the following diagram, ScaleOut StreamServer DT installs its management console on a standalone server that connects to ScaleOut StreamServer’s in-memory data grid. This console hosts the graphical user interface that is securely accessed by remote workstations within an organization. It also deploys real-time digital twin models to the in-memory data grid, which hosts instances of digital twins (one per data source) and runs application-defined code to process incoming messages. Message are delivered to the grid using messaging hubs, such as Azure IoT Hub, AWS IoT Core, Kafka, a built-in REST service, or directly using APIs.

The management console installs as a set of Docker containers on the management server. This simplifies the installation process and ensures portability across operating systems. Once installed, users can create accounts to control access to the console, and all connections are secured using SSL. The results of aggregate analytics and queries performed within the in-memory data grid can then be accessed and visualized on workstations running throughout an organization.

Because ScaleOut’s in-memory data grid runs in an organization’s data center and avoids the requirement to use a cloud-hosted message hub or REST service, incoming messages from data sources can be processed with minimum latency. In addition, application code running in real-time digital twins can access local resources, such as databases and alerting systems, with the best possible performance and security. Use of dedicated computing resources for the in-memory data grid delivers the highest possible throughput for message processing and real-time analytics.

While cloud hosting of streaming analytics as a SaaS (software-as-a-service) offering creates clear advantages in reducing capital costs and providing access to highly elastic computing resources, it may not be suitable for organizations which need to maintain full control of their infrastructures to address security and performance requirements. ScaleOut StreamServer DT was designed to meet these needs and deliver the important, unique benefits of streaming analytics using real-time digital twins to these organizations.

The post Deploying Real-Time Digital Twins On Premises with ScaleOut StreamServer DT appeared first on ScaleOut Software.

]]>The post Real-Time Digital Twins Can Help Expedite Vaccine Distribution appeared first on ScaleOut Software.

]]>Agile In-Memory Software Can Track the Dynamic Rollout of Vaccine Distribution and Delivery to Quickly Spot Problems

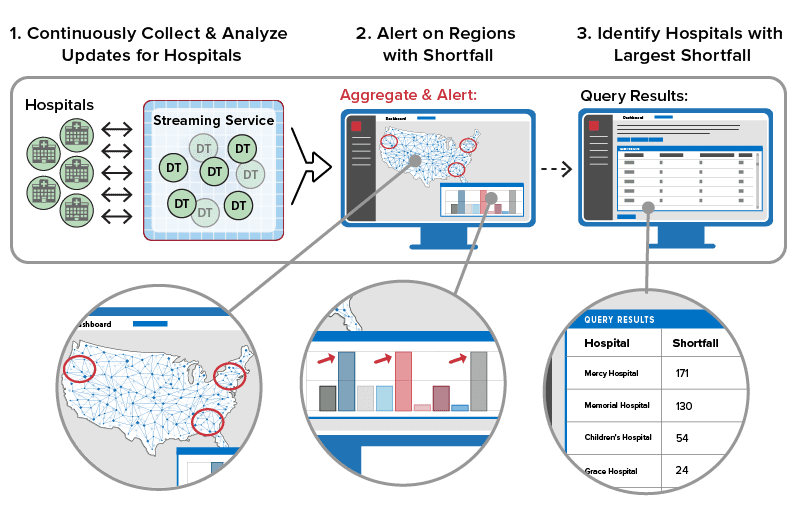

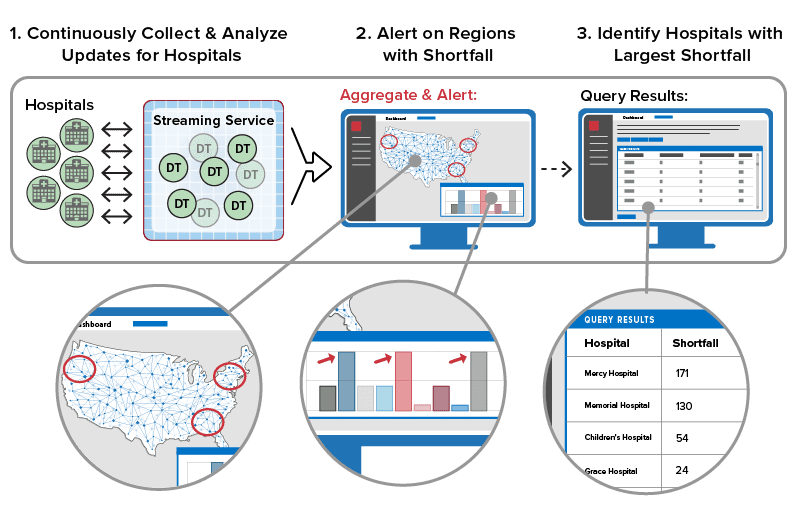

Getting the COVID-19 crisis under control requires that we put in place an effective process for vaccine distribution so that the country can get to herd immunity as fast as possible. We are faced with quickly building a nationwide logistics network and standing up well more than 50,000 vaccination centers. Making all this work smoothly means that managers need accurate, up-to-the-minute information about all aspects of this operation, including:

- Where are all the vaccine shipments right now?

- What is the shortfall in vaccines at each center?

- How many people are waiting for vaccines at each center?

- How many qualified personnel are available at each center?

- Which centers have the most urgent needs and need immediate attention?

- Is vaccine distribution underserving certain regions or population groups?

Given the unique and highly dynamic nature of this challenge, we need software solutions that are agile enough to adapt to evolving needs and scalable enough to quickly handle a daunting amount of fast-changing data. Conventional, enterprise data architectures take months to develop and are complex to change. Is there a simpler, faster way to wrangle this data for crisis managers?

In-Memory Computing with Real Time Digital Twins: Fast and Agile

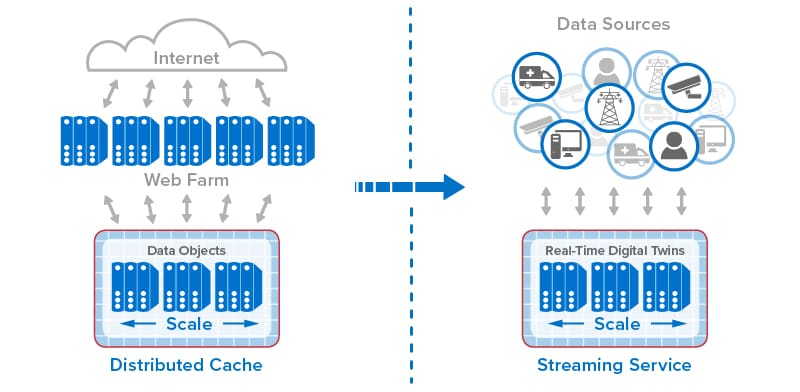

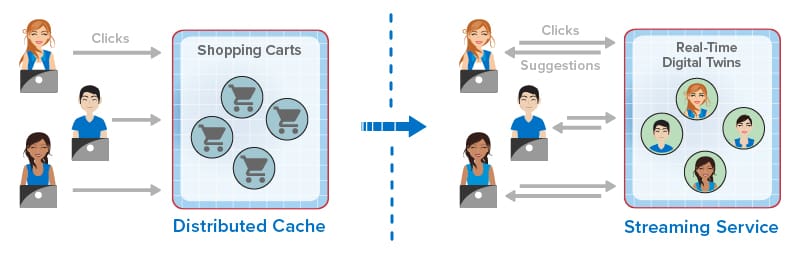

A software technology called in-memory computing has evolved over the last twenty years to grapple with the challenge of tracking and analyzing fast-changing data. Its two core competencies are speed and scalability. Widely used to track ecommerce shopping carts, financial transactions, airline flights and much more, in-memory computing can quickly store, retrieve, and analyze large volumes of live data. This powerful technology may also be just what we need to help tackle the challenge of vaccine distribution.

In the last two years, the concept of real-time digital twins has emerged to let in-memory computing track incoming data streams from hundreds of thousands of data sources, maintain pertinent information about each data source, and immediately alert when unusual conditions are detected. The power of this approach lies in its ability to simplify the problem for application developers. It encapsulates code that just focuses on analyzing messages from a single data source as they flow in, and it maintains an up-to-the second assessment of the data source’s status. Real-time digital twins are both easy to develop and easy to change as needs evolve. The in-memory computing system which hosts them typically runs as a cloud service (such as the ScaleOut Digital Twin Streaming Service) that transparently scales to handle as many data sources as needed.

Real-Time Digital Twins Can Help Expedite Vaccine Distribution

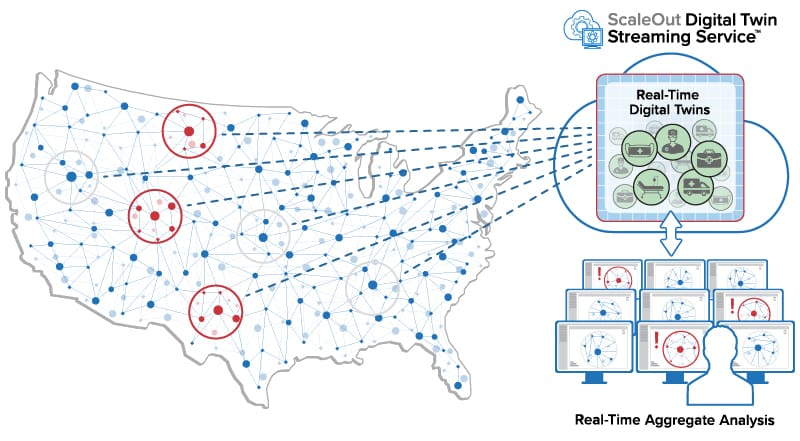

To track the distribution and delivery of COVID-19 vaccines, a real-time digital twin can be deployed for each shipment in transit and for each vaccination center. For shipments, the digital twins can track location, destination, and current condition on a second-by-second basis, allowing managers to instantly know where a shipment is and whether its viability is at risk, for example, due to a temperature change. For vaccination centers, real-time digital twins can track location, the supply of vaccines, current demand (number of recipients), availability of trained personnel to perform injections, and other parameters. Code in the digital twin continuously analyzes incoming messages to determine whether a problem exists or is likely to occur, and it alerts managers to urgent issues within a few milliseconds. This allows managers to keep track of which of the 50,000 centers need immediate assistance.

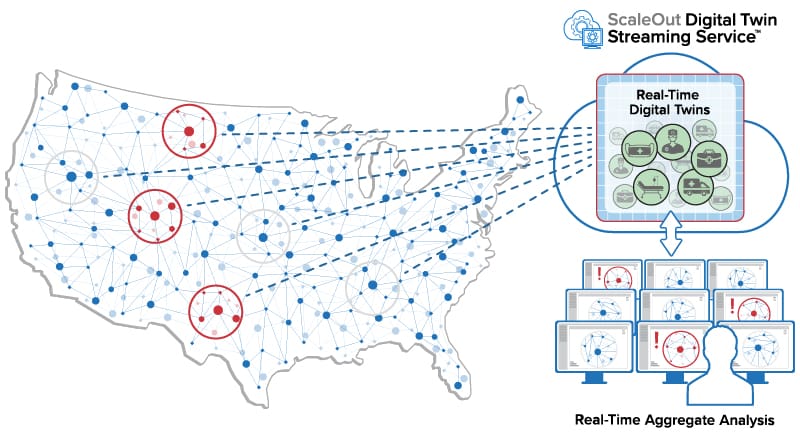

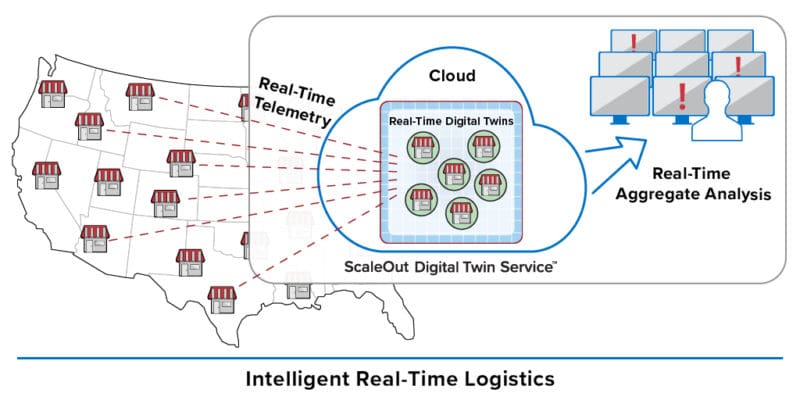

The following diagram illustrates the use of real-time digital twins to track thousands of vaccine shipments and vaccination centers. The red dotted lines depict message streams flowing from data sources located throughout the country over the Internet to their corresponding real-time digital twins hosted in the cloud service.

![]()

Let’s take a closer look at the real-time digital twin for a vaccination center. Using a simple web app, personnel at the vaccination center send periodic messages updating information about supplies, personnel, recipients, and wait times. The real time digital twin for this center records this data and then analyzes it for issues, such as a shortfall in supplies, lack of available personnel, or a surge in incoming recipients. It can then compute an assessment of the urgency for assistance (call it an alert level) which can be compared to other centers to identify which ones have the most urgent issues. If the alert level becomes sufficiently high, the analysis code can immediately notify managers. By analyzing incoming messages, real-time digital twins keep track of the latest status for all vaccination centers.

Here’s an illustration of a vaccination center sending messages to its real-time digital twin running in the cloud. It shows some of the state information that the twin maintains and the code which analyzes incoming messages as they arrive:

![]()

Aggregate Analytics Boost Situational Awareness

When dealing with thousands of dynamic data sources, managers can use real-time digital twins to serve as highly responsive watchdogs that continuously evaluate incoming information for changes that may need attention. This helps managers easily track thousands of data sources and focus on the most pressing concerns.

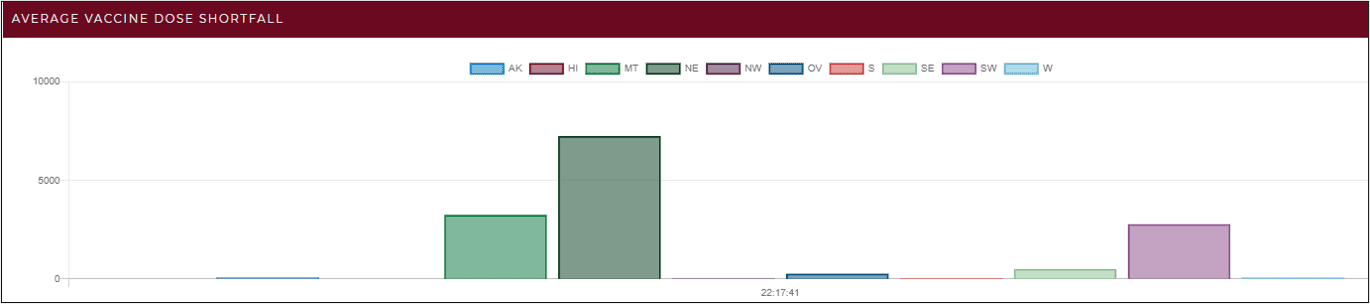

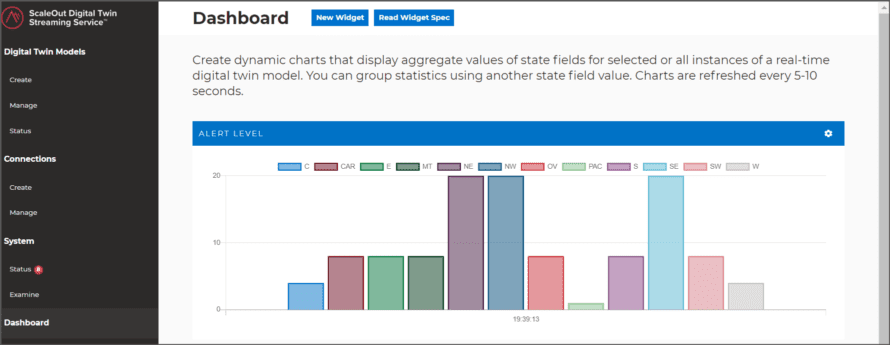

To further boost situational awareness, the in-memory computing platform can group and aggregate data held in the real-time digital twins every few seconds to help surface widespread changes that need strategic responses. For example, the average shortfall in vaccine doses for all centers in each region of the country can be aggregated to track where shortfalls may be occurring. This information can be visualized as shown in the chart below, which is updated every few seconds to provide managers with the most current view of the situation:

Likewise, this technique can be used to aggregate the average wait times for all vaccination centers by county. This can help determine where bottlenecks in vaccine delivery are occurring and enable mangers to render assistance by relocating personnel from less busy centers to overwhelmed ones.

Aggregate analytics of data maintained by real-time digital twins can also be used to track and validate the equitable distribution of vaccines. For example, it can aggregate information collected from each center about the demographics of vaccine recipients, such as age and ethnicity, and characteristics of the centers themselves, such as hospitals vs pharmacies and urban vs rural. This allows key real-time statistics to be tracked, such whether certain groups or regions are being underserved and whether hospitals have shorter wait times than pharmacies.

Summing Up

Without a doubt, distributing and delivering COVID-19 vaccines quickly and effectively over the next few months presents formidable challenges, namely:

- Ensuring that logistics managers get the critical information they need in a timely manner

- Avoiding the complexity and delay required to build custom information management systems that can provide this information

Because it is fast, scalable, and agile, in-memory computing technology with real-time digital twins can serve as a valuable tool for tracking the status of many thousands of vaccination centers and shipments. This innovative software infrastructure can quickly be programmed to analyze vital parameters and statistics in milliseconds and aggregate key data every few seconds. It offers managers a powerful and flexible means for helping ensure fast, efficient vaccine distribution and delivery.

The post Real-Time Digital Twins Can Help Expedite Vaccine Distribution appeared first on ScaleOut Software.

]]>The post Use Digital Twins for the Next Generation in Telematics appeared first on ScaleOut Software.

]]>

Real-Time Digital Twins Can Add Important New Capabilities to Telematics Systems and Eliminate Scalability Bottlenecks

Rapid advances in the telematics industry have dramatically boosted the efficiency of vehicle fleets and have found wide ranging applications from long haul transport to usage-based insurance. Incoming telemetry from a large fleet of vehicles provides a wealth of information that can help streamline operations and maximize productivity. However, telematics architectures face challenges in responding to telemetry in real time. Competitive pressures should spark innovation in this area, and real-time digital twins can help.

Current Telematics Architecture

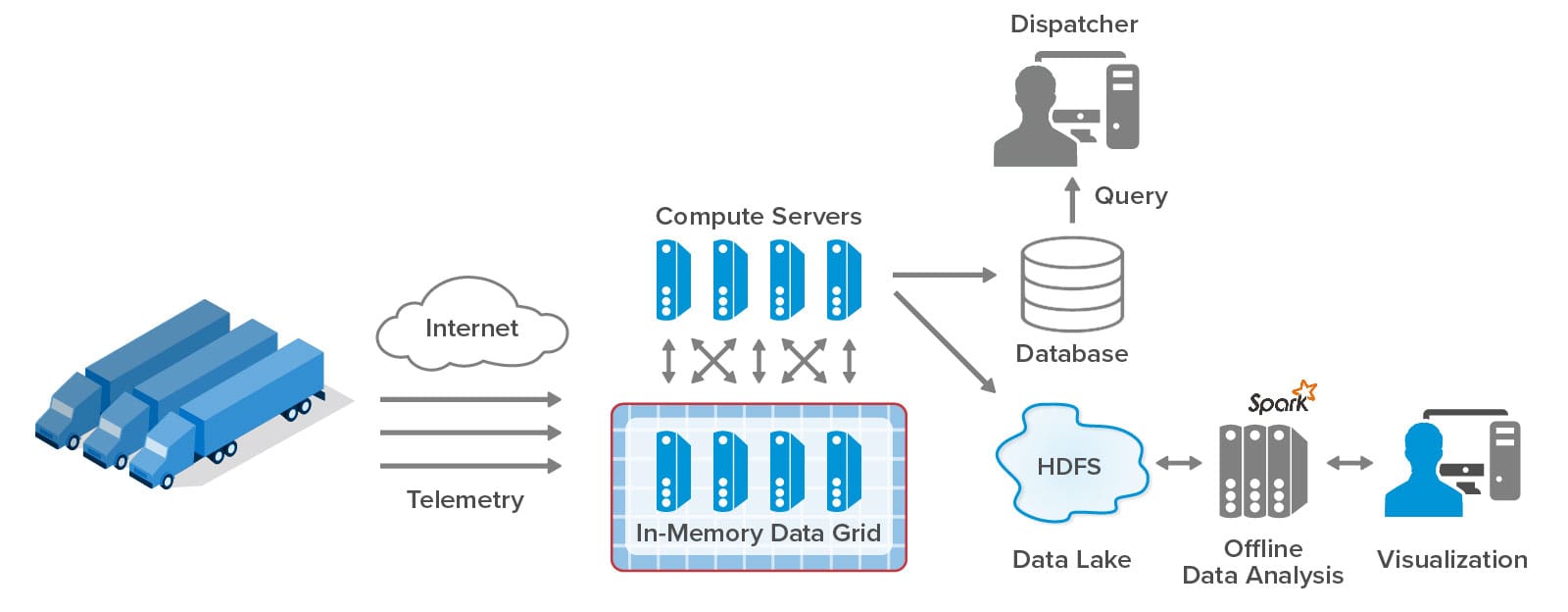

The volume of incoming telemetry challenges current telematics systems to keep up and quickly make sense of all the data. Here’s a typical telematics architecture for processing telemetry from a fleet of trucks:

Each truck today has a microprocessor-based sensor hub which collects key telemetry, such as vehicle speed and acceleration, engine parameters, trailer parameters, and more. It sends messages over the cell network to the telematics system, which uses its compute servers (that is, web and application servers) to store incoming messages as snapshots in an in-memory data grid, also known as a distributed cache. Every few seconds, the application servers collect batches of snapshots and write them to the database where they can be queried by dispatchers managing the fleet. At the same time, telemetry snapshots are stored in a data lake, such as HDFS, for offline batch analysis and visualization using big data tools like Spark. The results of batch analysis are typically produced after an hour’s delay or more. Lastly, all telemetry is archived for future use (not shown here).

This telematics architecture has evolved to handle ever increasing message rates (often reaching 2K messages per second), make up-to-the-minute information available to dispatchers, and feed offline analytics. Using a database, dispatchers can query raw telemetry to determine the information they need to manage the fleet in real time. This enables them to answer questions such as:

- “Where is truck 7563?”

- “How long has the driver been on the road?”

- “Which trucks have abnormally high oil temperature?”

Offline analytics can mine the telemetry for longer term statistics that help managers assess the fleet’s overall performance, such as the average length of delivery or routing delays, the fleet’s change in fuel efficiency, the number of drivers exceeding their allowed shift times, and the number and type of mechanical issues. These statistics help pinpoint areas where dispatchers and other personnel can make strategic improvements.

Challenges for Current Architectures

There are three key limitations in this telematics architecture which impact its ability to provide managers with the best possible situational awareness. First, incoming telemetry from trucks in the fleet arrives too fast to be analyzed immediately. The architecture collects messages in snapshots but leaves it to human dispatchers to digest this raw information by querying a database. What if the system could separately track incoming telemetry for each truck, look for changes based on contextual information, and then alert dispatchers when problems were identified? For example, the system could perform continuous predictive analytics on the engine’s parameters with knowledge of the engine’s maintenance history and signal if an impending failure was detected. Likewise, it could watch for hazardous driving with information about the driver’s record and medical condition. Having the system continuously introspect on the telemetry for each truck would enable the dispatcher to spot problems and intervene more quickly and effectively.

A second key limitation is the lack of real-time aggregate analysis. Since this analysis must be performed offline in batch jobs, it cannot respond to immediate issues and is restricted to assessing overall fleet performance. What if the real-time telemetry tracking for each truck could be aggregated within seconds to spot emerging issues that affect many trucks and require a strategic response? These issues could include:

- Unexpected delays in a region due to highway blockages or weather that indicate the need to inform or reroute several trucks

- An unusually large number of soon-to-be timed-out drivers or impending maintenance issues which require making immediate schedule changes to avoid downtime

- Congregated drivers who are impacting on-time deliveries

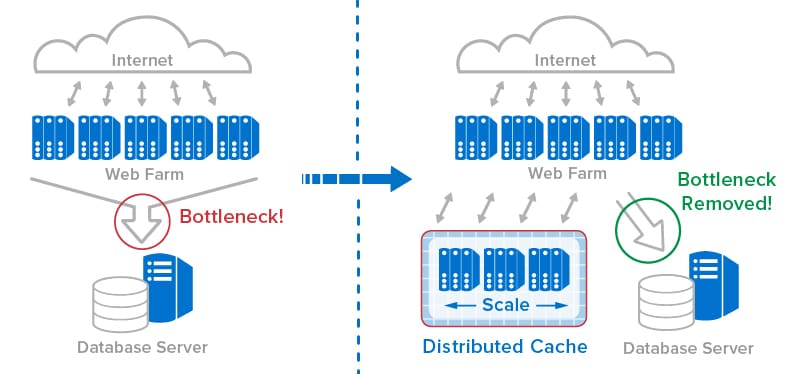

The current telematics architecture also has inherent scalability issues in the form of network bottlenecks. Because all telemetry is stored in the in-memory data grid and accessed by a separate farm of compute servers, the network between the grid and the server farm can quickly bottleneck as the incoming message rate increases. As the fleet size grows and the message rate per truck increases from once per minute to once per second, the telematics system may not be able to handle the additional incoming telemetry.

Solution: Real-Time Digital Twins

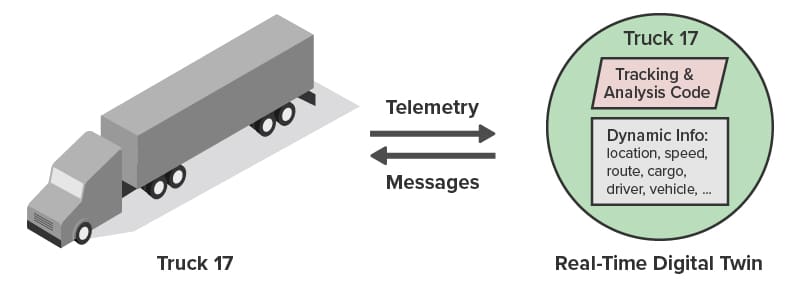

A new software architecture for streaming analytics based on the concept of real-time digital twins can address these challenges and add significant capabilities to telematics systems. This new, object-oriented software technique provides a memory-based orchestration framework for tracking and analyzing telemetry from each data source. It comprises message-processing code and state variables which host dynamically evolving contextual information about the data source. For example, the real-time digital twin for a truck could look like this:

Instead of just snapshotting incoming telemetry, real-time digital twins for every data source immediately analyze it, update their state information about the truck’s condition, and send out alerts or commands to the truck or to managers as necessary. For example, they can track engine telemetry with knowledge of the engine’s known issues and maintenance history. They can track position, speed, and acceleration with knowledge of the route, schedule, and driver (allowed time left, driving record, etc.). Message-processing code can incorporate a rules engine or machine learning to amplify their capabilities.

Real-time digital twins digest raw telemetry and enable intelligent alerting in the moment that assists both drivers and dispatchers in surfacing issues that need immediate attention. They are much easier to develop than typical streaming analytics applications, which have to sift through the telemetry from all data sources to pick out patterns of interest and which lack contextual information to guide them. Because they are implemented using in-memory computing techniques, real-time digital twins are fast (typically responding to messages in a few milliseconds) and transparently scalable to handle hundreds of thousands of data sources and message rates exceeding 100K messages/second.

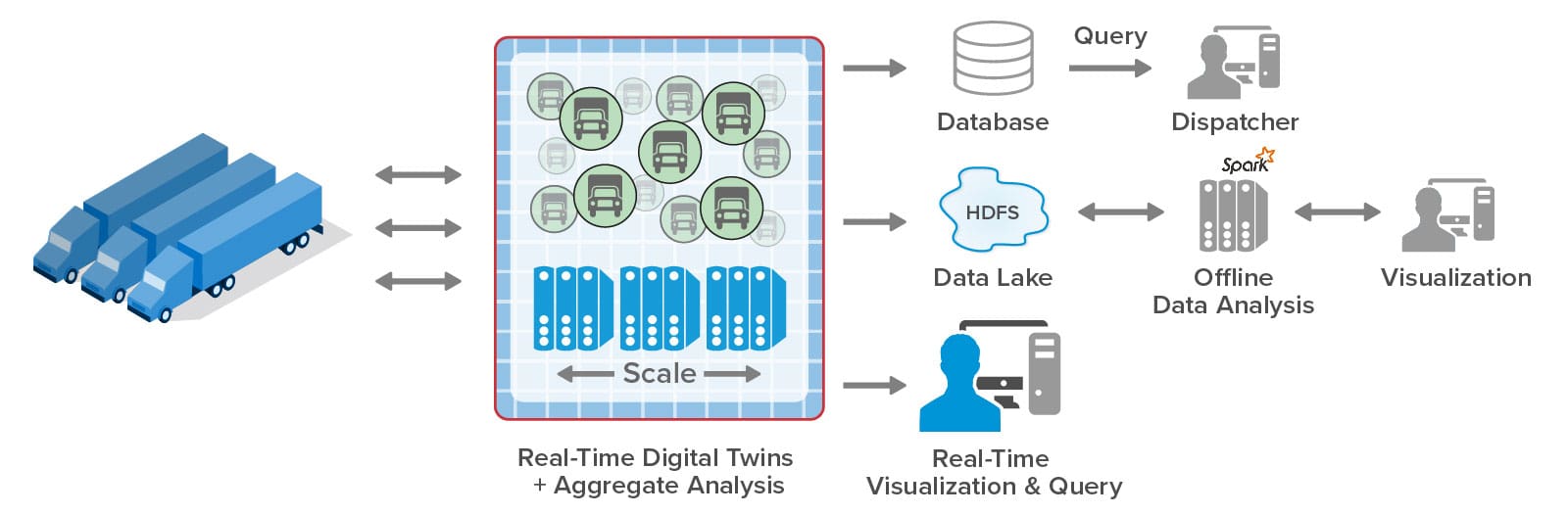

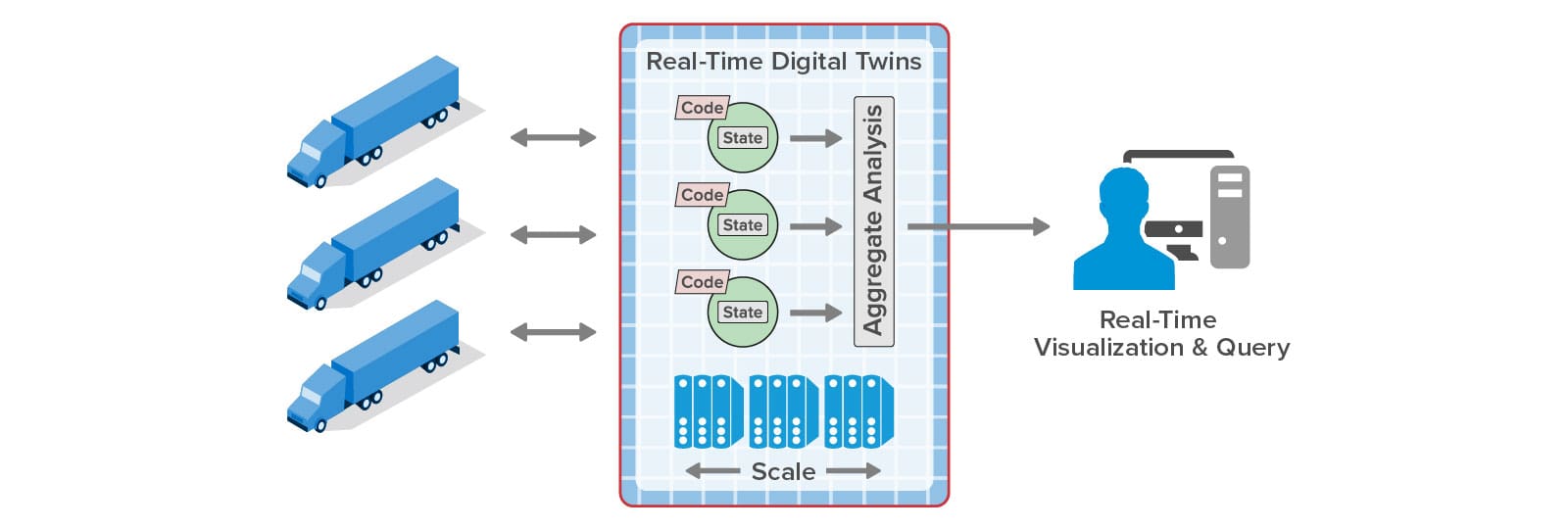

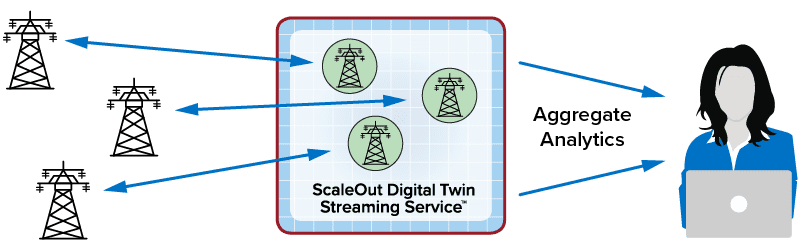

Here’s a depiction of real-time digital twins running within an in-memory data grid in a telematics architecture:

In addition to fitting within an overall architecture that includes database query and offline analytics, real-time digital twins enable built-in aggregate analytics and visualization. They provide curated state information derived from incoming telemetry that can be continuously aggregated and visualized to boost situational awareness for managers, as illustrated below. This opens up an important new opportunity to aggregate performance indicators needed in real time, such as emerging road delays by region or impending scheduling issues due to timed out drivers, that can be acted upon while new problems are still nascent. Real-time aggregate analytics add significant new capabilities to telematics systems.

Summing Up

While telematics systems currently provide a comprehensive feature set for managing fleets, they lack the important ability to track and analyze telemetry from each vehicle in real time and then aggregate derived information to maintain continuous situational awareness for the fleet. Real-time digital twins can address these shortcomings with a powerful, fast, easy to develop, and highly scalable software architecture. This new software technique has the potential to make a major impact on the telematics industry.

To learn more about real-time digital twins in action, take a look at ScaleOut Software’s streaming service for hosting real-time digital twins in the cloud or on-premises here.

The post Use Digital Twins for the Next Generation in Telematics appeared first on ScaleOut Software.

]]>The post ScaleOut Software Releases New Video on Real-Time Digital Twins appeared first on ScaleOut Software.

]]>

Check out this new video which depicts the challenges in using conventional tools for streaming analytics to track and respond to thousands of data sources in a live system. Whether you are keeping track of a fleet of trucks or sensors in a smart city, the overwhelming amount of incoming telemetry from countless data sources can create a “data monster” that threatens your ability to perform real-time monitoring and maintain the necessary situational awareness.

As the video shows, ScaleOut’s real-time digital twins running in the ScaleOut Digital Twin Streaming Service can tame your data monster by separately tracking each data source using dynamic state information. They enable fast introspection on dynamic changes and immediate, focused responses. In addition, real-time digital twins continuously gather information which the streaming service can aggregate and visualize in real time to quickly surface issues and enable strategic responses.

can tame your data monster by separately tracking each data source using dynamic state information. They enable fast introspection on dynamic changes and immediate, focused responses. In addition, real-time digital twins continuously gather information which the streaming service can aggregate and visualize in real time to quickly surface issues and enable strategic responses.

Grab your popcorn and then click on the image below to watch the video:

We hope you enjoyed the video. Here’s how to learn more:

- To learn more about the value of real-time digital twins in streaming analytics, click here.

- To learn more about the ScaleOut Digital Twin Streaming Service, click here.

- For detailed technical information, take a look at the User Guide here.

- To contact us and talk about how real-time digital twins can help tame your data monster, click here.

The post ScaleOut Software Releases New Video on Real-Time Digital Twins appeared first on ScaleOut Software.

]]>The post Using Real-Time Digital Twins for Corporate Contact Tracing appeared first on ScaleOut Software.

]]>

Until a COVID-19 vaccine is widely available, getting back to work means keeping a close watch for outbreaks and quickly containing them when they occur. While the prospects for accomplishing this within large companies seem daunting, tracking contacts between employees may be much easier than for the public at large. This blog post explains how a software application built with a new software construct called real-time digital twins makes this possible.

Tracking Employees Using Real-Time Digital Twins

In an earlier blog post, we saw how real-time digital twins running in the ScaleOut Digital Twin Streaming Service can be used to track employees within a large company using a technique called “voluntary self-tracing.” In this post, we’ll take a closer look at its implementation in a demo application created by ScaleOut Software. We’ll also look at a companion mobile app that allows employees to log contacts with colleagues outside their immediate teams and to notify the company and their contacts if they test positive for COVID-19.

can be used to track employees within a large company using a technique called “voluntary self-tracing.” In this post, we’ll take a closer look at its implementation in a demo application created by ScaleOut Software. We’ll also look at a companion mobile app that allows employees to log contacts with colleagues outside their immediate teams and to notify the company and their contacts if they test positive for COVID-19.

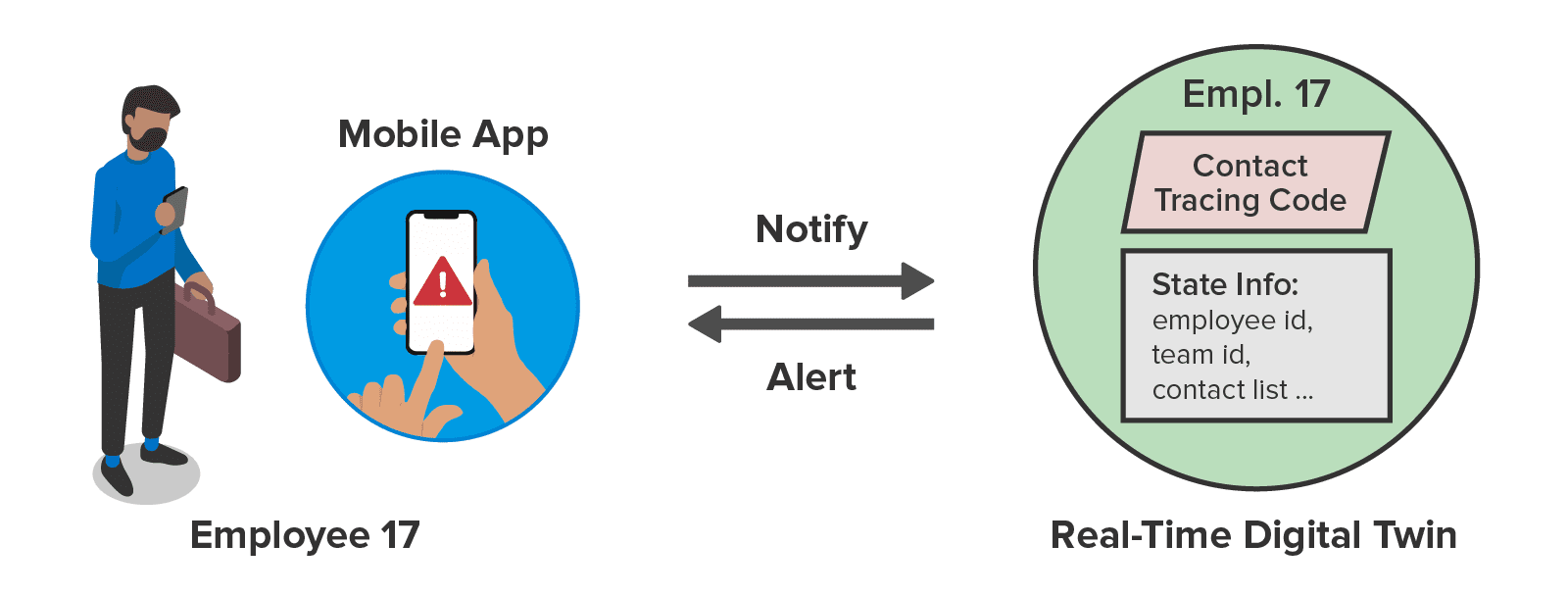

The demo application creates a memory-based real-time digital twin for each employee. Using information from the company’s organizational database, it populates each twin with the employee’s ID, team ID, department type, and location. The twin also keeps a list of the employee’s contacts within the organization (as well as community contacts, discussed below). This allows immediate colleagues and their contacts to be notified if an employee tests positive. The following diagram illustrates an employee’s real-time digital twin and the state data it holds; details about the contact tracing code are explained below:

The twin automatically populates its contact list with the other members of the employee’s team, based on the expectation that team members are in daily contact. Using the mobile app, employees can log one-time and recurring contacts with colleagues in other teams, possibly at different office locations. In addition, they can log contacts outside the company, such as taxi rides, airline flights, and meals at restaurants, so that community members can be notified if an employee was exposed to COVID-19.

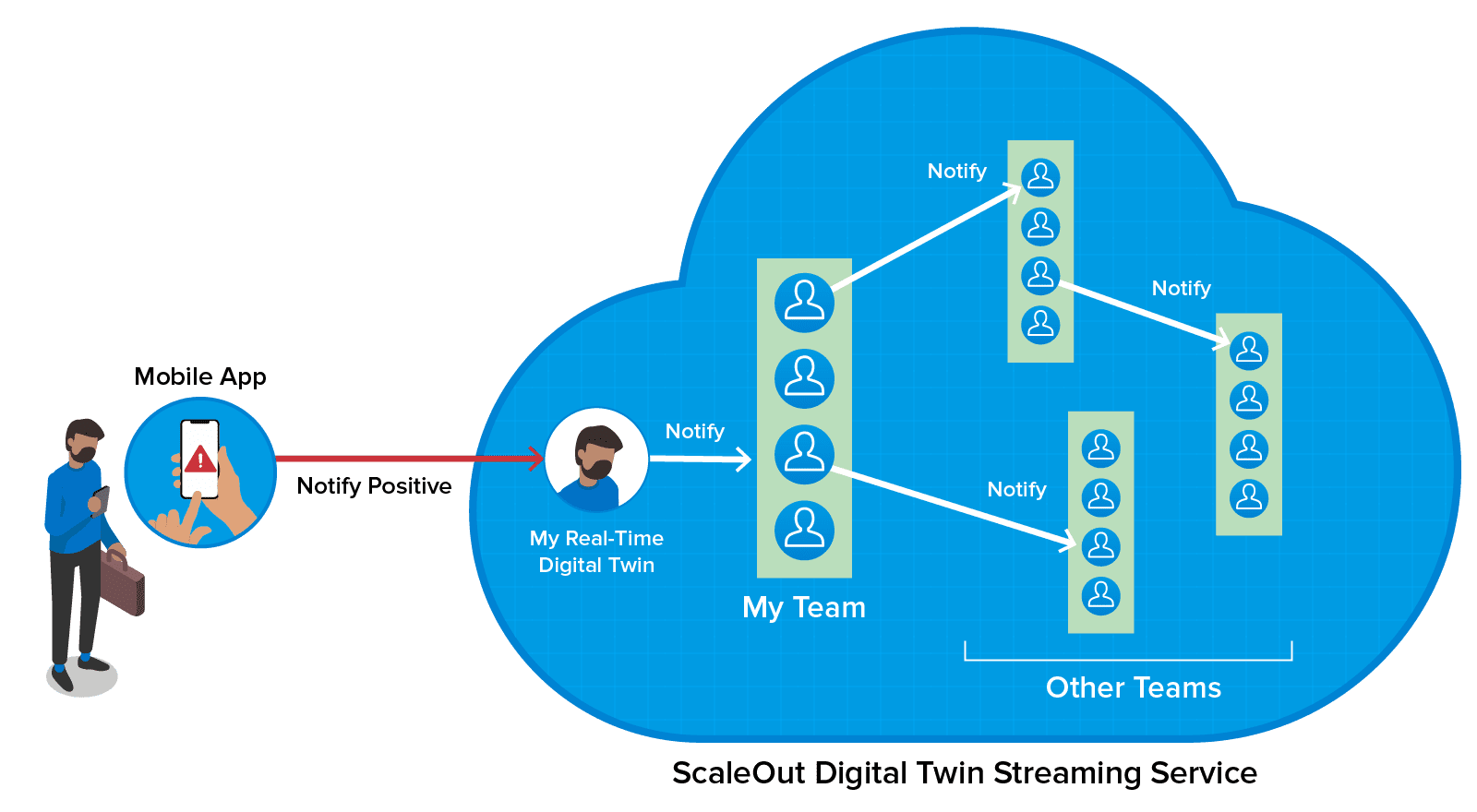

An employee can use the mobile app to notify their real-time digital twin of a positive test for COVID-19. Code running in the twin then sends messages to the real-time digital twins for all contacts in the employee’s list. These twins in turn send messages to their contacts, and so on, until the twins for all contacts have been notified. (The algorithm avoids unnecessary messages to team members and circular paths among twins.) The twin then sends a push notification to each affected employee through the mobile app, alerting them to the possible exposure and the number of intermediate contacts between themselves and the infected person. Because real-time digital twins are hosted in memory, all of this happens within seconds, enabling affected employees to immediately self-quarantine and obtain COVID-19 tests.

Here’s an illustration of the chain of contacts originating with an employee who reports testing positive. (Note that the outbound notifications from the twins to the employees’ mobile devices are not shown here.)

What’s in the Real-Time Digital Twin?

As illustrated in the first diagram, each real-time digital twin hosts two components, state data and a message-processing method. These are defined by the contact tracing application and can be written in C#, Java, or JavaScript. (C# was used for the demo application.) The state data is unique for each employee and contains the employee’s information and contact list, along with useful statistics, such as how often the employee has been alerted about a possible exposure. The message-processing method’s code is shared by all twins. It receives messages from the mobile app or from other twins (each corresponding to a single employee) and uses application-defined code to process these messages.

Messages from the mobile app can request to add or remove a contact from the list. For new contacts, they include parameters such as the employee ID of the contact and whether the contact will be recurring. (Users also can record contacts using calendar events.) Messages from the mobile app can also request the current contact list for display, signal that the employee has tested positive or negative, and request current notifications. Messages from other real-time digital twins signal that the corresponding employees have been exposed and provide additional information, such as the number of intermediate contacts and the location of the initial employee who tested positive.

The application’s message-processing code responds to these messages and implements the spanning-tree notification algorithm that alerts other twins on the contact list. The streaming service handles the rest, namely the details of message delivery, retrieval and updating of state information, and managing the execution platform.

Using the Mobile App

The following animated diagram shows how an employee can add a contact with a company colleague outside of their immediate team or with a community contact during business travel (left screenshot). If the employee tests positive, the employee can use the mobile app to report this to the company (middle screenshot). All employees are then notified using the mobile app, as shown in the right screenshot. Community contacts are reported to managers who communicate with outside points of contact, such as airlines, taxi companies, and restaurants.

Using Aggregate Statistics to Spot Outbreaks

The streaming service has the built-in capability to aggregate state data from all real-time digital twins. The service then displays the results in charts which are recalculated every few seconds. These charts enable managers to identify emerging issues, such as an outbreak within a specific department or site. With this information, they can take immediate steps to contain the outbreak and minimize the number of affected employees.

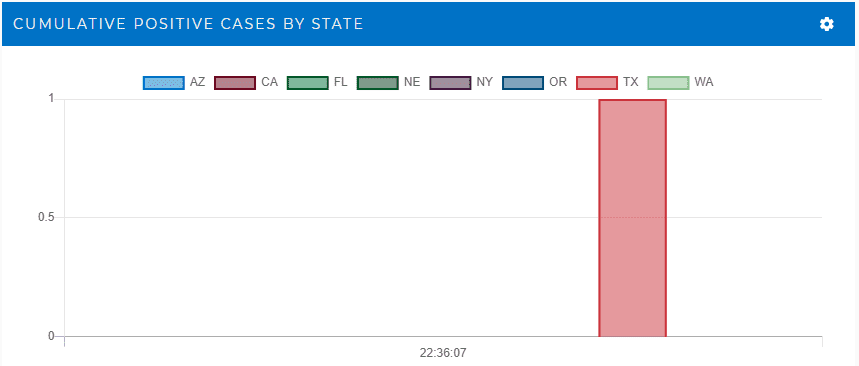

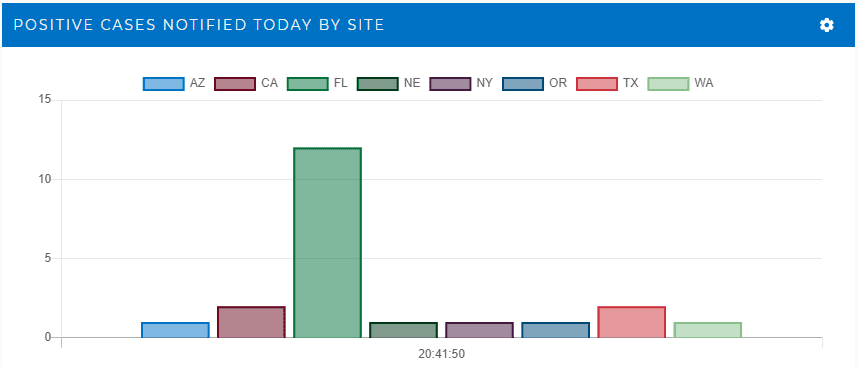

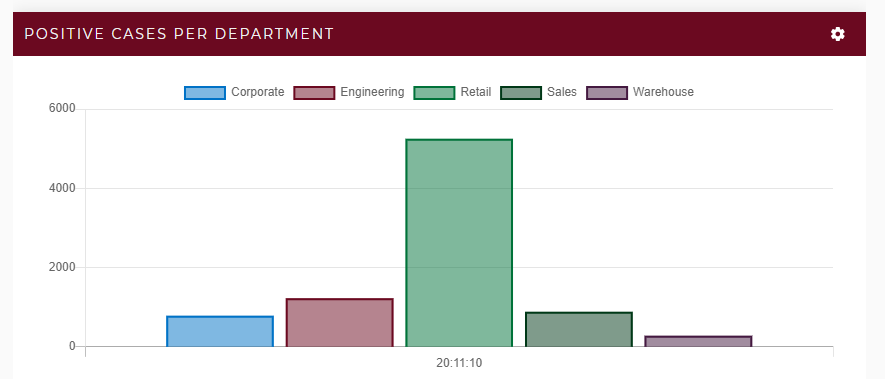

To illustrate the value of aggregate statistics in boosting situational awareness, consider a hypothetical company with 30,000 employees and offices in several states across the U.S. Suppose an employee at the Texas site suddenly tests positive. This could be immediately alerted to managers with the following chart generated and continuously updated by the streaming service, which shows all employees who have tested positive:

Within a few seconds, the real-time digital twins notify all points of contact. Updates to state data are immediately aggregated in another chart that shows the sites where employees have been notified of a positive contact and the number of employees affected at each site:

This chart shows that about 140 employees in three states were notified and possibly exposed directly or indirectly. All of these employees are then immediately quarantined to contain the possible spread of COVID-19. After an investigation by company managers, it is determined that the employee had business travel to Arizona and met with a team that subsequently had business travel to California. Instead of taking hours or days to uncover the scope of a COVID-19 exposure, contact tracing using real-time digital twins alerts managers within seconds.

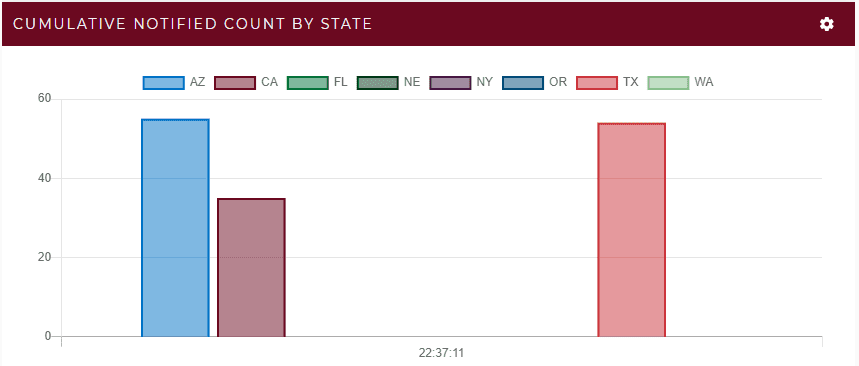

The real-time digital twins can collect additional useful statistics for visualization by the streaming service. Another chart can show the average number of intermediate contacts for all notified employees, which is an indication of how widely employees have been interacting across teams. If this becomes an issue (as it is in the above example), managers can implement policies to further isolate teams. As shown below, a chart can also show the number of notified employees by department so that managers can determine whether certain departments, such as retail outlets, need stricter policies to limit exposure to COVID-19 from outside contacts.

The Benefits of an Integrated Streaming Service

This contact tracing application demonstrates the power of real-time digital twins to enable fast application development with compelling benefits. Because the amount of application code is small, real-time digital twins can be quickly written and tested. (See a recent blog post which describes how to simplify debugging and testing using a mock environment prior to deployment in the cloud.) They also can be easily modified and updated.

The ScaleOut Digital Twin Streaming Service provides the execution platform so that the application code does not have to deal with message distribution, state saving, performance scaling, and high availability. It also includes support for real-time aggregate analytics and visualization integrated with the real-time digital twin model to maximize ease of use.

Compare this approach to the complexity of building out an application server farm, database, analytics application, and visualization to accomplish the same goals at higher cost and lower performance. Cobbling together these diverse technologies would require several skill sets, lengthy development time, and higher operational costs.

Summing Up

This demo contact tracing application was designed to show how companies can take advantage of their organizational structures to track contacts among employees and quickly notify all affected employees when an individual tests positive for COVID-19. By responding quickly to an exposure with immediate, comprehensive information about its extent within the company (and with community contacts), managers can limit the exposure’s impact. The application also shows how the real-time digital twin model enables a quick, agile implementation which can be easily adapted to the specific needs of a wide range of companies.

Please contact us at ScaleOut Software to learn more about this demo application for limiting the impact of COVID-19 and other ways real-time digital twins can help your company monitor and respond to fast-changing events.

The post Using Real-Time Digital Twins for Corporate Contact Tracing appeared first on ScaleOut Software.

]]>The post Developing Real-Time Digital Twins for Cloud Deployment appeared first on ScaleOut Software.

]]>

This blog post explains how a new software construct called a real-time digital twin running in a cloud-hosted service can create a breakthrough for streaming analytics. Development is fast and straightforward using standard object-oriented techniques, and the test/debug cycle is kept short by making use of a mock environment running on the developer’s workstation.

What Are Real-Time Digital Twins?

The ScaleOut Digital Twin Streaming Service offers an exciting new approach to streaming analytics in applications that track large numbers of data sources and need to maximize responsiveness and situational awareness. Examples include tracking a fleet of trucks, analyzing large numbers of banking transactions for potential fraud, managing logistics in the delivery of supplies after a disaster or during a pandemic, recommending products to ecommerce shoppers, and much more.

The key to meeting these challenges is to process incoming telemetry in the context of unique state information maintained for each individual data source. This allows application code to introspect on the dynamic behavior of each data source, maintain synthetic metrics which aid the analysis, and create alerts when conditions require. To make this possible, the Azure-based streaming service hosts a real-time digital twin for each data source. It describes properties to be maintained for the data source and an application-defined algorithm for processing incoming messages from the data source.

Digital twin models used in product lifecycle management (PLM) or in IoT device modeling (for example, Azure Digital Twins) just describe the properties of physical entities, usually to allow querying by business processes. In contrast, real-time digital twins analyze incoming telemetry from each data source and track changes in its state. Their analysis determines whether immediate action needs to be taken to resolve an issue (or identify an opportunity). Real-time digital twins typically employ domain-specific knowledge to analyze incoming messages, maintain relevant state information, and trigger alerts.

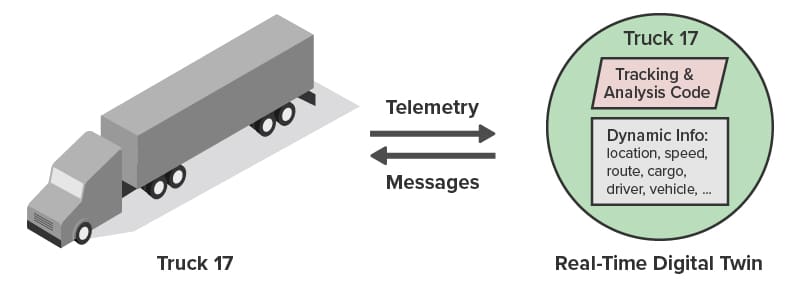

For example, a PLM digital twin of a truck engine might describe the properties of the engine, such as its temperature and oil pressure. A real-time digital twin would take the next step by hosting a predictive analytics algorithm that analyzes changes in these properties. It could use dynamic information about recent usage and service history to determine whether a failure is imminent and the driver should be alerted, as shown below:

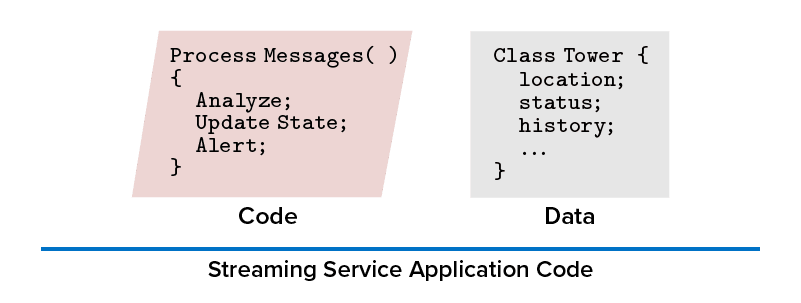

Implementing Real-Time Digital Twins

Real-time digital twins are designed to be easy to develop and modify. They make use of standard object-oriented concepts and languages (such as C#, Java, and JavaScript). A real-time digital twin consists of two components implemented by the application: a state object which defines the properties to be maintained for each data source, and a message-processing method, which defines an algorithm for analyzing incoming messages from a specific data source. The method contains domain-specific knowledge, such as a predictive analytics algorithm for truck engines. These two components are referred to as a real-time digital twin model, which serves as a template for creating instances, one for every data source.

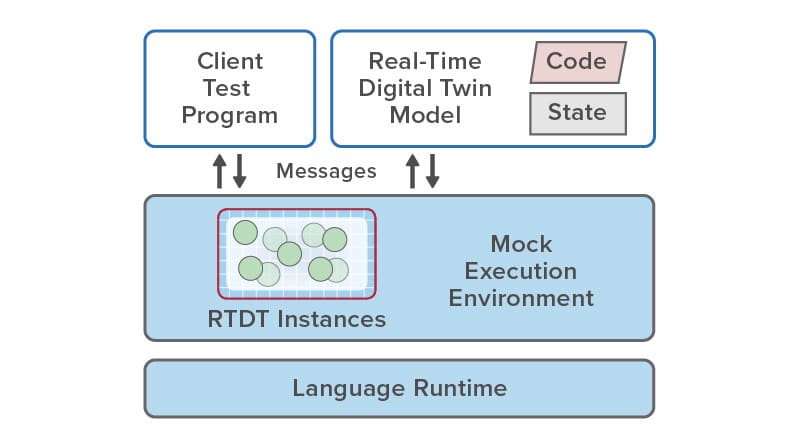

To simplify development, the ScaleOut Digital Twin Streaming Service provides base classes that can be used to create real-time digital twins and then submit them to the cloud service for execution, as illustrated in the following diagram:

Once the real-time digital twin model has been uploaded, the streaming service automatically creates an instance for every data source and forwards incoming messages from that data source to the instance for processing. Data sources can send messages to their corresponding real-time digital twin instances (and receive replies) using message hubs or a built-in REST service.

Debugging with a Mock Environment

Although these models are simple and easy to build, the number of steps in the deployment process can make it cumbersome to catch coding errors in the early stages of model development. To address this challenge and enable rapid model testing within a controlled environment (for example, within Visual Studio running on a development workstation), the streaming service provides an alternative execution platform, called the mock environment, which can be targeted during development. This environment runs standalone on a workstation and allows an application to create a limited number of real-time digital twin instances. It also provides an API for sending messages to instances and for receiving replies using the same message-exchange protocol that would be used with the cloud-based REST service.

The mock development environment is shown below:

Once a model has been created, it can be tested using a client test program that sends messages that simulate the behavior of one or more data sources. This exercises the model’s code and surfaces issues and exceptions, which can be readily examined and resolved in a controlled environment. When the model behaves as expected, it can then be deployed to the streaming service for execution with live data sources. Note that the model’s code can log messages, which are available for reading in the mock environment and are displayed in the streaming service’s web UI after the model is deployed.

Summing Up

Real-time digital twins offer an important new tool for analyzing telemetry streams from large numbers of data sources, providing immediate feedback for each data source, and maintaining real-time aggregate statistics that boost situational awareness. Unlike PLM-style digital twins, which host the properties of a physical data source for downstream analysis, real-time digital twins provide a means to analyze and respond to a data source’s telemetry within milliseconds, while exposing aggregate trends within seconds. Their functionality fills an important gap in streaming analytics, namely tracking thousands or even a million data sources with fast, individualized feedback.

Amazingly, the power of real-time digital twins can be harnessed with a small amount of application code that contains domain-specific knowledge. The streaming service takes care of all the complexities of message delivery, accessing state data, scaling for thousands of data sources, and high availability. By using a mock development environment, application developers can quickly create, debug, and test real-time digital models prior to their deployment in production. This powerful new approach to streaming analytics solves an important unmet need and offers an impressive combination of power and ease of use.

For detailed information on building real-time digital twin models and using a mock environment, please consult the ScaleOut Digital Twin Streaming Service User Guide.

The post Developing Real-Time Digital Twins for Cloud Deployment appeared first on ScaleOut Software.

]]>The post Why Use “Real-Time Digital Twins” for Streaming Analytics? appeared first on ScaleOut Software.

]]>

What Problems Does Streaming Analytics Solve?

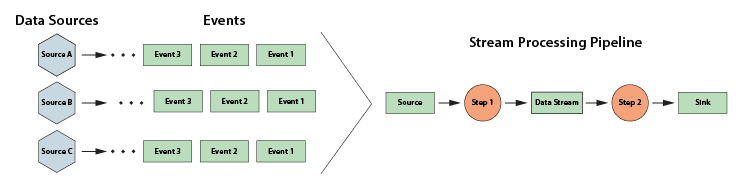

To understand why we need real-time digital twins for streaming analytics, we first need to look at what problems are tackled by popular streaming platforms. Most if not all platforms focus on mining the data within an incoming message stream for patterns of interest. For example, consider a web-based ad-serving platform that selects ads for users and logs messages containing a timestamp, user ID, and ad ID every time an ad is displayed. A streaming analytics platform might count all the ads for each unique ad ID in the latest five-minute window and repeat this every minute to give a running indication of which ads are trending.

Based on technology from the Trill research project, the Microsoft Stream Analytics platform offers an elegant and powerful platform for implementing applications like this. It views the incoming stream of messages as a columnar database with the column representing a time-ordered history of messages. It then lets users create SQL-like queries with extensions for time-windowing to perform data selection and aggregation within a time window, and it does this at high speed to keep up with incoming data streams.

Other streaming analytic platforms, such as open-source Apache Storm, Flink, and Beam and commercial implementations such as Hazelcast Jet, let applications pass an incoming data stream through a pipeline (or directed graph) of processing steps to extract information of interest, aggregate it over time windows, and create alerts when specific conditions are met. For example, these execution pipelines could process a stock market feed to compute the average stock price for all equities over the previous hour and trigger an alert if an equity moves up or down by a certain percentage. Another application tracking telemetry from gas meters could likewise trigger an alert if any meter’s flow rate deviates from its expected value, which might indicate a leak.

What’s key about these stream-processing applications is that they focus on examining and aggregating properties of data communicated in the stream. Other than by observing data in the stream, they do not track the dynamic state of the data sources themselves, and they don’t make inferences about the behavior of the data sources, either individually or in aggregate. So, the streaming analytics platform for the ad server doesn’t know why each user was served certain ads, and the market-tracking application does not know why each equity either maintained its stock price or deviated materially from it. Without knowing the why, it’s much harder to take the most effective action when an interesting situation develops. That’s where real-time digital twins can help.

The Need for Real-Time Digital Twins

Real-time digital twins shift the application’s focus from the incoming data stream to the dynamically evolving state of the data sources. For each individual data source, they let the application incorporate dynamic information about that data source in the analysis of incoming messages, and the application can also update this state over time. The net effect is that the application can develop a significantly deeper understanding about the data source so that it can take effective action when needed. This cannot be achieved by just looking at data within the incoming message stream.

For example, the ad-serving application can use a real-time digital twin for each user to track shopping history and preferences, measure the effectiveness of ads, and guide ad selection. The stock market application can use a real-time digital twin for each company to track financial information, executive changes, and news releases that explain why its stock price starts moving and filter out trades that don’t fit desired criteria.

Also, because real-time digital twins maintain dynamic information about each data source, applications can aggregate this highly curated data instead of just aggregating data in the data stream. This gives users deeper insights into the overall state of all data sources and boosts “situational awareness” that is hard to maintain by just looking at the message stream.

An Example

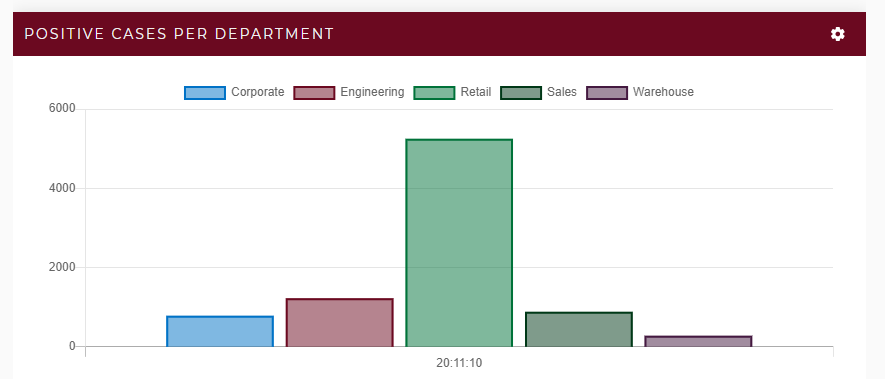

Consider a trucking fleet that manages thousands of long-haul trucks on routes throughout the U.S. Each truck periodically sends telemetry messages about its location, speed, engine parameters, and cargo status (for example, trailer temperature) to a real-time monitoring application at a central location. With traditional streaming analytics, personnel can detect changes in these parameters, but they can’t assess their significance to take effective, individualized action for each truck. Is a truck stopped because it’s at a rest stop or because it has stalled? Is an out-of-spec engine parameter expected because the engine is scheduled for service or does it indicate that a new issue is emerging? Has the driver been on the road too long? Does the driver appear to be lost or entering a potentially hazardous area?

The use of real-time digital twins provides the context needed for the application to answer these questions as it analyzes incoming messages from each truck. For example, it can keep track of the truck’s route, schedule, cargo, mechanical and service history, and information about the driver. Using this information, it can alert drivers to impending problems, such as road blockages, delays or emerging mechanical issues. It can assist lost drivers, alert them to erratic driving or the need for rest stops, and help when changing conditions require route updates.

The following diagram shows a truck communicating with its associated real-time digital twin. (The parallelogram represents application code.) Because the twin holds unique contextual data for each truck, analysis code for incoming messages can provide highly focused feedback that goes well beyond what is possible with traditional streaming analytics:

As illustrated below, the ScaleOut Digital Twin Streaming Service runs as a cloud-hosted service in the Microsoft Azure cloud to provide streaming analytics using real-time digital twins. It can exchange messages with thousands of trucks across the U.S., maintain a real-time digital twin for each truck, and direct messages from that truck to its corresponding twin. It simplifies application code, which only needs to process messages from a given truck and has immediate access to dynamic, contextual information that enhances the analysis. The result is better feedback to drivers and enhanced overall situational awareness for the fleet.

runs as a cloud-hosted service in the Microsoft Azure cloud to provide streaming analytics using real-time digital twins. It can exchange messages with thousands of trucks across the U.S., maintain a real-time digital twin for each truck, and direct messages from that truck to its corresponding twin. It simplifies application code, which only needs to process messages from a given truck and has immediate access to dynamic, contextual information that enhances the analysis. The result is better feedback to drivers and enhanced overall situational awareness for the fleet.

Lower Complexity and Higher Performance

While the functionality implemented by real-time digital twins can be replicated with ad hoc solutions that combine application servers, databases, offline analytics, and visualization, they would require vastly more code, a diverse combination of skill sets, and longer development cycles. They also would encounter performance bottlenecks that require careful analysis to measure and resolve. The real-time digital twin model running on ScaleOut Software’s integrated execution platform sidesteps these obstacles.

Scaling performance to maintain high throughput creates an interesting challenge for traditional streaming analytics platforms because the work performed by their task pipelines does not naturally map to a set of processing cores within multiple servers. Each pipeline stage must be accelerated with parallel execution, and some stages require longer processing time than others, creating bottlenecks in the pipeline.

In contrast, real-time digital twins naturally create a uniformly large set of tasks that can be evenly distributed across servers. To minimize network overhead, this mapping follows the distribution of in-memory objects within ScaleOut’s in-memory data grid, which holds the state information for each twin. This enables the processing of real-time digital twins to scale transparently without adding complexity to either applications or the platform.

Summing Up

Why use real-time digital twins? They solve an important challenge for streaming analytics that is not addressed by other, “pipeline-oriented” platforms, namely, to simultaneously track the state of thousands of data sources. They use contextual information unique to each data source to help interpret incoming messages, analyze their importance, and generate feedback and alerts tailored to that data source.

Traditional streaming analytics finds patterns and trends in the data stream. Real-time digital twins identify and react to important state changes in the data sources themselves. As a result, applications can achieve better situational awareness than previously possible. This new way of implementing streaming analytics can be used in a wide range of applications. We invite you to take a closer look.

The post Why Use “Real-Time Digital Twins” for Streaming Analytics? appeared first on ScaleOut Software.

]]>The post The Power of Integrated Analytics Within an IMDG appeared first on ScaleOut Software.

]]>

In-Memory Data Grids for Fast-Changing Data

For more than fifteen years, ScaleOut StateServer® has demonstrated technology leadership as an in-memory data grid (IMDG) and distributed cache. Designed to help scalable applications deliver high performance, it stores live, fast-changing data in memory (DRAM) for fast updates and retrieval. By transparently distributing stored objects across a cluster of servers (physical or virtual), it automatically scales performance for fast-growing workloads and maintains consistently low access latency. Typical uses include storing session-state and ecommerce shopping carts, product descriptions, airline reservations, financial portfolios, news stories, online learning data, and many others.

From its inception, the design philosophy behind ScaleOut StateServer has been to simultaneously maximize both performance and ease of use. Because IMDGs have complex internal mechanisms, they need to automate them as much as possible so that the developer can just focus on application concerns and not on the inner workings of the IMDG. For this reason, the product incorporates features such as automatic discovery of servers, transparent load-balancing when servers are added to the cluster or removed, automatic data replication for high availability with transparent placement of replicas, quorum-based updating of replicas to ensure consistency, integrated client libraries, and coherent client-side caching. The net effect is that applications maintain a straightforward view of the IMDG as a unified key/value store for serialized application objects.

The Challenges with Parallel Queries

Although IMDGs are optimized for key-based access, applications often need to retrieve groups of objects with matching properties. For example, if an application is storing shopping carts, it might be useful to find all shopping carts with a total value that exceeds a specified threshold so that these shoppers can be given special attention. To this end, ScaleOut StateServer incorporates a property-based, distributed query API that returns a collection of matching objects. To simplify development for .NET applications, it uses Microsoft’s language integrated query (LINQ) to specify queries. (Java applications use a similar mechanism.)

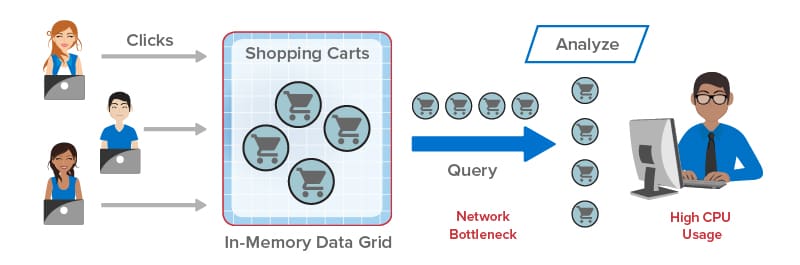

Queries in an IMDG can create interesting performance challenges. Because IMDGs have highly scalable storage capacity, they can easily return large numbers of matching objects to the client application, and this leads to network bottlenecks transferring large amounts of data from the IMDG back to the client. Once all objects are delivered, the client is then faced with the task of analyzing potentially huge numbers of objects. This can saturate the client’s CPU and delay responses, as illustrated in the following diagram:

ScaleOut StateServer Pro: Integrated Data Analytics

To address these challenges, ScaleOut Software has introduced ScaleOut StateServer Pro, an advanced version of ScaleOut StateServer that integrates data analytics within the IMDG. Instead of querying objects from the IMDG and analyzing them in the client, applications can now simply run this analysis within the IMDG itself using APIs available in ScaleOut StateServer Pro. Because all the work is performed with the IMDG, this has the two-fold advantage of offloading both the network and the client’s CPU. It also transparently makes use of the IMDG’s scalable computing resources to accelerate the analysis.

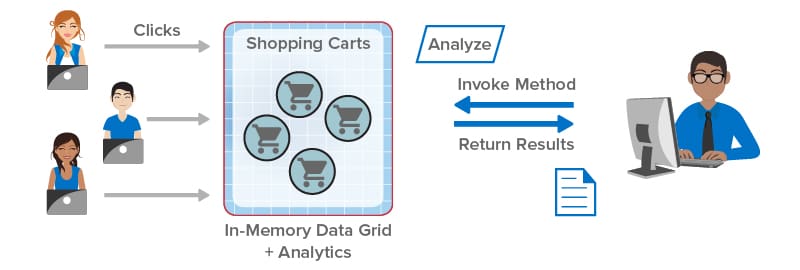

Take a look at how integrated data analytics can help client applications. In the following illustration, the client library sends the application’s analysis method (“Analyze”) to the IMDG for execution in parallel on all shopping carts selected by a query. The results are combined within the IMDG and returned to the application:

Keeping with the design philosophy of maximizing both performance and ease of use, ScaleOut StateServer Pro lets developers easily construct data analytics by specifying an object-oriented method that analyzes each matching object selected by a query and a second method for combining the results. In .NET applications, this data-parallel execution structure can be described using a distributed version of Microsoft’s popular Parallel.ForEach API, which ScaleOut StateServer Pro integrates with LINQ query. Application code is automatically shipped by the client library to the IMDG for execution and runs fully in parallel across all servers for maximum performance.

Consider the above example of querying shopping carts exceeding a threshold value. Suppose the application’s goal is to periodically analyze high value shopping carts to make upsell offers based on the contents of each cart. Instead of querying the IMDG and returning thousands of shopping carts to the client, the application can implement a method which analyzes these carts within the IMDG to determine which carts should receive upsell offers (and possibly determine which upsell offers to make). This analysis runs in parallel within the IMDG and then returns its results to the client for further action. This dramatically reduces the workload on the network and client, and it ensures consistently high performance.

Summing Up: Extracting Maximum Value from an IMDG

Since their inception, IMDGs have to a large extent been underutilized by viewing them as passive key/value stores. Because they are actually designed as a data-parallel execution platform, they can do much more than just store and serve memory-hosted, live data. They also can perform analysis quickly and efficiently — where the data lives.

Taking full advantage of this powerful capability requires just a shift in thinking about where application work should be performed. In many cases, it’s a much better choice to analyze data within the IMDG instead of transferring it to the client for analysis. ScaleOut StateServer Pro makes it easy to do just that, and it delivers fast, scalable performance. Now developers can finally extract full value from their IMDGs.

The post The Power of Integrated Analytics Within an IMDG appeared first on ScaleOut Software.

]]>The post Voluntary Contact Self-Tracing for Companies appeared first on ScaleOut Software.

]]>

How Voluntary Self-Tracing Helps

In a previous blog post, we explored how voluntary contact self-tracing can assist other contact tracing techniques in alerting people who may have been exposed to the COVID-19 virus. This technique enables participants to log interactions with others so that they can discover if they are in a chain of contacts originating with someone, often a stranger, who subsequently tests positive for the virus. These contacts could include friends at a barbeque, grocery checkers, hairdressers, restaurant waitstaff, taxi drivers, or other interactions.

In contrast to highly publicized, proximity-based contact tracing by using mobile devices, voluntary self-tracing avoids security and privacy issues that have threatened widespread adoption. It also adds human judgment to the process so that the chain of contacts captures only potentially risky interactions. This is accomplished in advance of a positive test, enabling immediate notifications when the need arises.

Voluntary self-tracing offers huge value in connecting strangers who otherwise would not be notified about the need for testing without arduous manual contact tracing. However, it imposes the burden that everyone participates in a common tracing system and consistently makes the effort to log interactions. While this might restrict its appeal for public use, it could be readily adopted by companies, which have well-known, slowly changing populations and established working relationships and protocols.

Helping Companies Get Back to Work

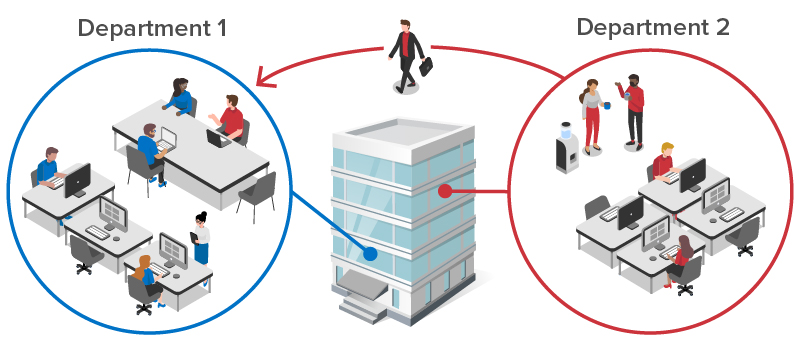

Consider a company that has multiple departments distributed across several locations. As employees come back to work, they typically interact closely with colleagues in the same department. If anyone in the department tests positive for COVID-19, it’s likely that all of these colleagues have been exposed and need to get tested. In addition, employees occasionally interact with colleagues in other departments, both at the same site and at remote sites. These interactions also need to be tracked to contain exposure within the organization, as illustrated in the following diagram:

Voluntary contact self-tracing can handle the most common scenarios by using the company’s employee database to automatically connect colleagues who work in the same department and interact daily. Employees need only manually log contacts they make with employees in other departments. These interactions are relatively infrequent and tracked for a limited period of time (typically two weeks). This approach streamlines the work required to track contacts, while enabling the company to immediately identify all employees who need to be notified, tested, and possibly isolated after one person tests positive.

In addition, employees can manually track information about contacts they make while on business travel, such as during airline flights, taxi rides, and meals at restaurants. That way, when an employee tests positive, these external contacts can be immediately alerted of possible exposure. This enables companies to assist their communities in contact tracing and help contain the spread of COVID-19.

Enabling Technology: In-Memory Computing

Many large companies have tens of thousands of employees and need to perform fast, efficient contact tracing. They require both immediate notifications and up-to-the-moment statistics that identify emerging trends, such as hot spots at one of their offices. To make this possible, a technology called in-memory computing can be used to track contacts and immediately alert all affected employees (and community touchpoints, such as restaurants) when anyone tests positive and alerts the system. Using a mobile app connected to a cloud service, it creates and maintains a dynamic web of contacts that evolves as interactions occur and time passes.

For example, when an employee tests positive and alerts the system, all colleagues in the same department are quickly notified, as are employees in other departments with whom interactions have occurred. The contact tracing system follows the chain of contacts across departments at all locations within the company. It also notifies community contacts, such as airlines and taxi companies, of possible exposures so that they can take the appropriate action.

Within the cloud service, the in-memory computing system maintains a software-based real-time digital twin for each employee. This software twin records and maintains all contacts for the employee, as well as all community contacts. It also removes non-recurring contacts after sufficient time passes and exposure is no longer likely. When an employee tests positive, the mobile app notifies the corresponding real-time digital twin in the cloud. This sets off the chain of communication that alerts all connected twins to the exposure and notifies their real-world counterparts.

Maximizing Situational Awareness