The post Deploying ScaleOut’s Distributed Cache In Google Cloud appeared first on ScaleOut Software.

]]>

by Olivier Tritschler, Senior Software Engineer

Because of their ability to provide highly elastic computing resources, public clouds have become a highly attractive platform for hosting distributed caches, such as ScaleOut StateServer®. To complement its current offerings on Amazon AWS and Microsoft Azure, ScaleOut Software has just announced support for the Google Cloud Platform. Let’s take a look at some of the benefits of hosting distributed caches in the cloud and understand how we have worked to make both deployment and management as simple as possible.

Distributed Caching in the Cloud

Distributed caches, like ScaleOut StateServer, enhance a wide range of applications by offering shared, in-memory storage for fast-changing state information, such as shopping carts, financial transactions, geolocation data, etc. This data needs to be quickly updated and shared across all application servers, ensuring consistent tracking of user state regardless of the server handling a request. Distributed caches also offer a powerful computing platform for analyzing live data and generating immediate feedback or operational intelligence for applications.

Built using a cluster of virtual or physical servers, distributed caches automatically scale access throughput and analytics to handle large workloads. With their tightly integrated client-side caching, these caches typically provide faster access to fast-changing data than backing stores, such as blob stores and database servers. In addition, they incorporate redundant data storage and recovery techniques to provide built-in high availability and ensure uninterrupted access if a server fails.

To meet the needs of elastic applications, distributed caches must themselves be elastic. They are designed to transparently scale upwards or downwards by adding or removing servers as the workload varies. This is where the power of the cloud becomes clear.

Because cloud infrastructures provide inherent elasticity, they can benefit both applications and distributed caches. As more computing resources are needed to handle a growing workload, clouds can deploy additional virtual servers (also called cloud “instances”). Once a period of high demand subsides, resources can be dialed back to minimize cost without compromising quality of service. The flexibility of on-demand servers also avoids costly capital investments and reduces management costs.

Deploying ScaleOut’s Distributed Cache in the Google Cloud

A key challenge in using a distributed cache as part of a cloud-hosted application is to make it easy to deploy, manage, and access by the application. Distributed caches are typically deployed in the cloud as a cluster of virtual servers that scales as the workload demands. To keep it simple, a cloud-hosted application should just view a distributed cache as an abstract entity and not have to keep track of individual caching servers or which data they hold. The application does not want to be concerned with connecting N application instances to M caching servers, especially when N and M (as well as cloud IP addresses) vary over time. In particular, an application should not have to discover and track the IP addresses for the caching servers.

Even though a distributed cache comprises several servers, the simplest way to deploy and manage it in the cloud is to identify the cache as a single, coherent service. ScaleOut StateServer takes this approach by identifying a cloud-hosted distributed cache with a single “store” name combined with access credentials. This name becomes the basis for both managing the deployed servers and connecting applications to the cache. It lets applications connect to the caching cluster without needing to be aware of the IP addresses for the cluster’s virtual servers.

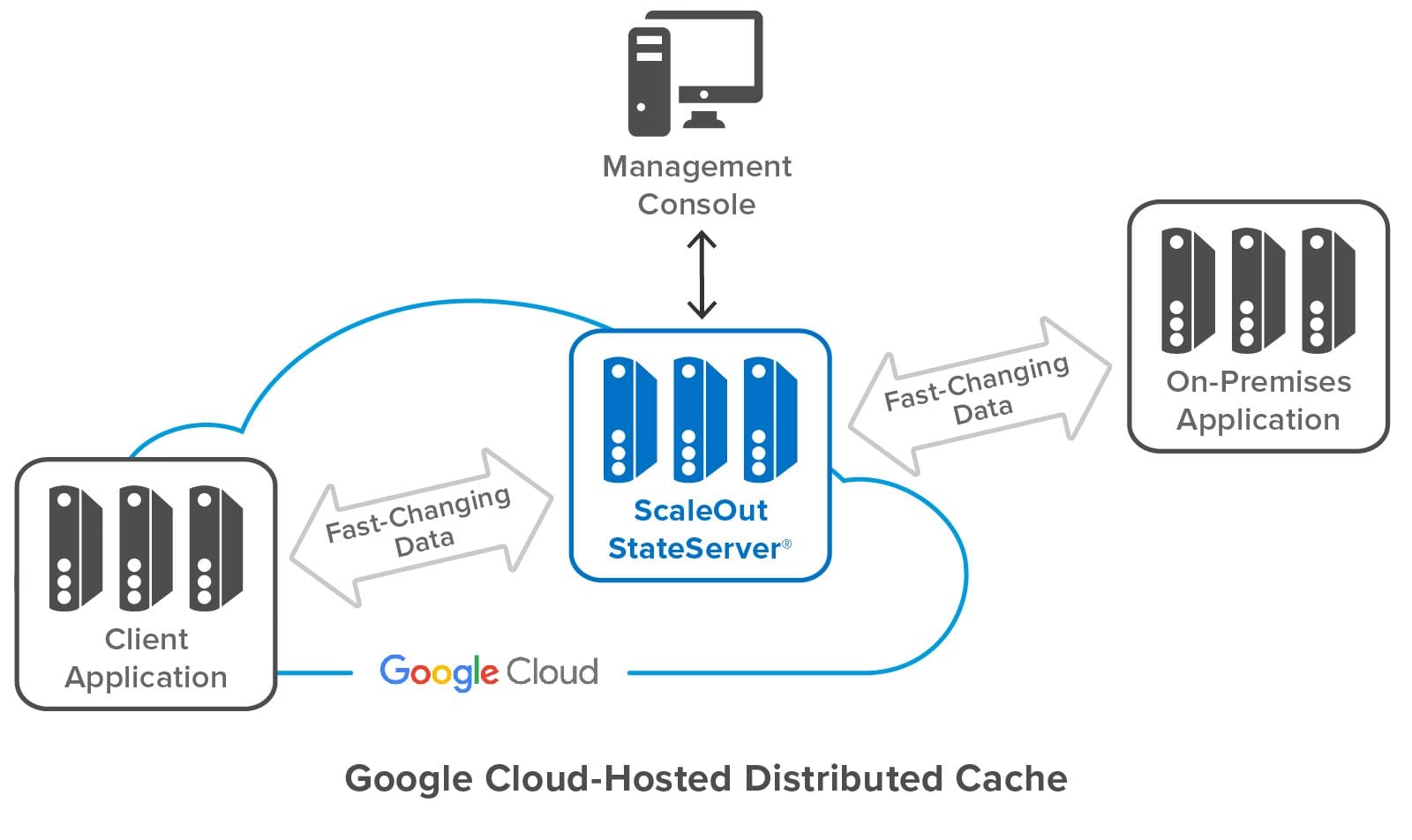

The following diagram shows a ScaleOut StateServer distributed cache deployed in Google Cloud. It shows both cloud-hosted and on-premises applications connected to the cache, as well as ScaleOut’s management console, which lets users deploy and manage the cache. Note that while the distributed cache and applications all contain multiple servers, applications and users can access the cache just by using its store name.

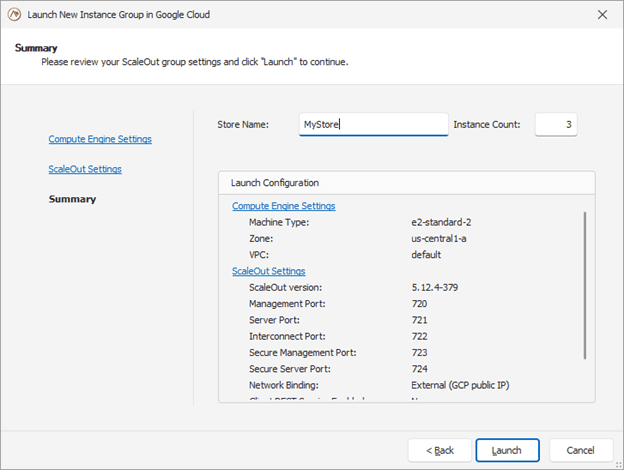

Building on the features developed for the integration of Amazon AWS and Microsoft Azure, the ScaleOut Management Console now lets users deploy and manage a cache in Google Cloud by just specifying a store name and initial number of servers, as well as other optional parameters. The console does the rest, interacting with Google Cloud to start up the distributed cache and configure its servers. To enable the servers to form a cluster, the console records metadata for all servers and identifies them as having the same store name.

Here’s a screenshot of the console wizard used for deploying ScaleOut StateServer in Google Cloud:

The management console provides centralized, on-premises management for initial deployment, status tracking, and adding or removing servers. It uses Google’s managed instance groups to host servers, and automated scripts use server metadata to guarantee that new servers automatically connect with an existing store. The managed instance groups used by ScaleOut also support defining auto-scaling options based on CPU/Memory usage metrics.

Instead of using the management console, users can also deploy ScaleOut StateServer to Google Cloud directly with Google’s Deployment Manager using optional templates and configuration files.

Simplifying Connectivity for Applications

On-premises applications typically connect each client instance to a distributed cache using a fixed list of IP addresses for the caching servers. This process works well on premises because the cache’s IP addresses typically are well known and static. However, it is impractical in the cloud since IP addresses change with each deployment or reboot of a caching server.

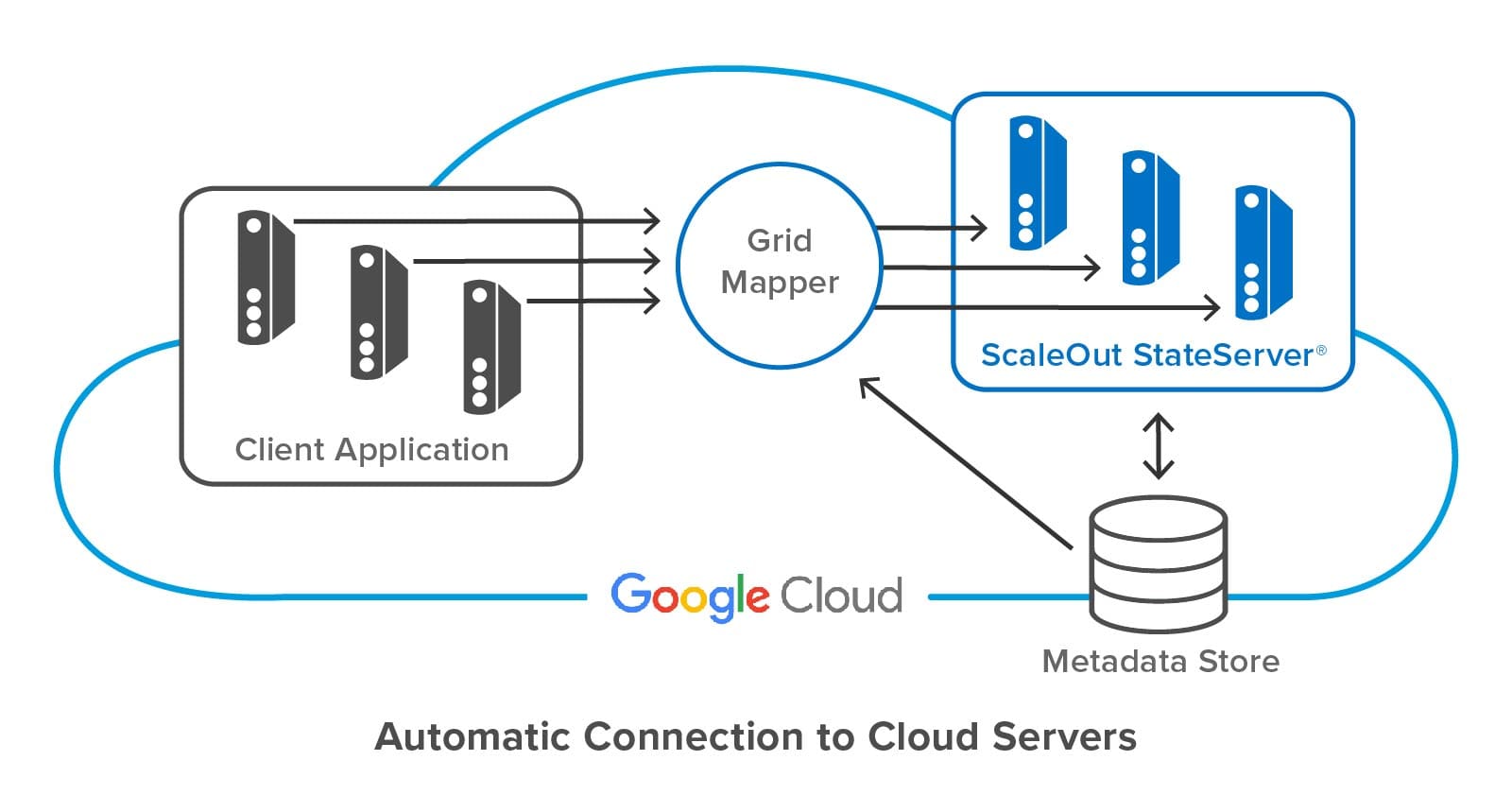

To avoid this problem, ScaleOut StateServer lets client applications specify a store name and credentials to access a cloud-hosted distributed cache. ScaleOut’s client libraries internally use this store name to discover the IP addresses of caching servers from metadata stored in each server.

The following diagram shows a client application connecting to a ScaleOut StateServer distributed cache hosted in Google Cloud. ScaleOut’s client libraries make use of an internal software component called a “grid mapper” which acts as a bootstrap mechanism to find all servers belonging to a specified cache using its store name. The grid mapper accesses the metadata for the associated caching servers and returns their IP addresses back to the client library. The grid mapper handles any potential changes in IP addresses, such as servers being added or removed for scaling purposes.

Summing up

Because they provide elastic computing resources and high performance, public clouds, such as Google Cloud, offer an excellent platform for hosting distributed caches. However, the ephemeral nature of their virtual servers introduces challenges for both deploying the cluster and connecting applications. Keeping deployment and management as simple as possible is essential to controlling operational costs. ScaleOut StateServer makes use of centralized management, server metadata, and automatic client connections to address these challenges. It ensures that applications derive the full benefits of the cloud’s elastic resources with maximum ease of use and minimum cost.

The post Deploying ScaleOut’s Distributed Cache In Google Cloud appeared first on ScaleOut Software.

]]>The post Steve Smith Review: Simplify Redis Clustering with ScaleOut IMDB appeared first on ScaleOut Software.

]]>

Check out the blog post and video from distinguished software architect and .NET guru Steve “ardalis” Smith on the challenges of scaling single-server Redis and how ScaleOut In-Memory Database tackles them with fully automated cluster technology to avoid complex manual configuration steps.

tackles them with fully automated cluster technology to avoid complex manual configuration steps.

Steve Smith is a well-known entrepreneur and software developer. He is passionate about building quality software and spreading his knowledge through training workshops, speaking at developer conferences, and sharing his experience on his blog and podcast. Steve Smith has also been recognized as a Microsoft MVP for over ten consecutive years.

The post Steve Smith Review: Simplify Redis Clustering with ScaleOut IMDB appeared first on ScaleOut Software.

]]>The post Introducing a New ScaleOut Java Client API appeared first on ScaleOut Software.

]]>

by Brandon Ripley, Senior Software Engineer

ScaleOut Software introduces a new Java client API for our distributed caching platform, ScaleOut StateServer®, that adds important new features for Java applications. It was designed with cloud-based deployments in mind, enabling clients to access ScaleOut in-memory data grids (IMDGs also called distributed caches) in multiple availability zones. It introduces the use of connection strings with DNS support for connecting Java clients to IMDGs, and it allows multiple, simultaneous connections to different caches. It also includes asynchronous APIs that add flexibility to application development.

You can download the JAR for the client API from ScaleOut’s Maven repository at https://repo.scaleoutsoftware.com. Simply connect your build tool to the repository and reference the API as a dependency to get started. The online User Guide can help you setup a project. Alternatively, you can download the JAR directly from the repo and then host the JAR with your build tool of choice. You can find an API reference here.

Let’s take a brief tour of the new Java APIs and look at an example using Docker for accessing multiple IMDGs.

A Quick Tour of the Java Client

The ScaleOut client API for Java lets client applications store and retrieve POJOs (plain old java objects) from a ScaleOut IMDG and provides an easy to use, fast, cloud-ready caching API. It can be used within any web application and is independent of any framework. This means that you can use the ScaleOut client API within your existing application architecture.

To simplify the developer experience, the API is logically divided into three primary packages:

Client Package

The client package houses the GridConnection class for connecting to a ScaleOut IMDG via a connection string. Each instance of GridConnection maintains a set of TCP connections to a ScaleOut cache and transparently handles retries, host failures, and load balancing.

The client package is also the place to register for event handling. ScaleOut servers can fire events for objects that are expiring and events for backing store operations (that is, read-through, refresh-ahead, write-behind, and erase-behind). The ServiceEvents class is used to register an event handler for events fired by the grid.

Caching Package

The caching package contains the strongly typed Cache<K,V> class that is used for all caching operations to store and retrieve POJOs of type V using a key of type K from a name space within the IMDG. All caching operations return a CacheResponse that details the result of the cache access.

For example, a successful access that adds a value to the cache using:

cache.add(key, value)

returns a CacheResponse with the status ObjectAdded, which can be obtained by calling the CacheResponse.getStatus() method. However, if the cache already contained an object for the key and the access was called again, CacheResponse.getStatus() would return ObjectAlreadyExists. (See the Javadoc for all possible responses.)

Query Package

The query package lets you perform queries to select objects from the IMDG. Queries are constructed using query filters created using the FilterFactory class. A filter can consist of a simple equality predicate, or it can combine multiple predicates to query with finer granularity.

Sample Applications

The following samples show how the ScaleOut Java client API can be used within a microservices architecture to access cached data and process events. The client API make it easy to develop modern web applications.

In these samples we will:

- Write an application that connects to two ScaleOut IMDGs to store and retrieve objects. (The two caches are configured to replicate data to each other using ScaleOut GeoServer®.)

- Write a second application that registers for and handles ScaleOut expiration events.

- Create four dockerfiles: the caching application, the expiration event handling application, and two ScaleOut IMDGs.

- Use the Docker compose command to spawn all four containers and run the two applications.

You can find the full samples, including the dockerfiles, on GitHub. Let’s look at the code for these two applications.

Accessing Multiple IMDGs

The first application’s goal is to verify ScaleOut GeoServer replication between two IMDGs. It first connects to the two IMDGs, creates an instance of Cache(K,V) for each IMDG, and then performs accesses.

The application connects to the grid using the GridConnection.connect() static method to instantiate a GridConnection object for each IMDG (named store1 and store2 here):

GridConnection store1Connection = GridConnection.connect("bootstrapGateways=store1:2721");

GridConnection store2Connection = GridConnection.connect("bootstrapGateways=store2:3721");

The next step is to create an instance of Cache(K,V) for each IMDG. Caches are instantiated with a GridConnection which associates the instance with a specific IMDG. This allows different instances to connect to different IMDGs.

The Java client API uses a builder pattern to instantiate caches. For applications using dependency injection, the immutable cache guarantees that the defaults we set at build time will stay consistent for the lifetime of the app. This is great for large applications with many caches as it guarantees there will be no unexpected modifications.

On the builder we can specify properties for defaults. Here is an example that sets an object timeout of fifteen seconds and a timeout type of Absolute (versus ResetOnUpdate or Sliding). The string “example” specifies the cache’s name space:

Cache<Integer, String> store1Cache = new CacheBuilder<Integer, String>(store1Connection, "example", Integer.class)

.objectTimeout(Duration.ofSeconds(15))

.timeoutType(TimeoutType.Absolute)

.build();

The Cache(K,V) class has multiple signatures for storing and retrieving objects from the IMDG. These signatures follow traditional Java naming semantics for distributed caching. For example, the add(key,value) method assumes that no key/value object mapping exists in the cache, whereas update(key,value) assumes than a key/value mapping exists in the cache.

This application uses the add method to insert an item into store1Cache and then checks the response for success. Here’s a code sample that adds two items to the cache:

CacheResponse<String, String> addResponse = store1Cache.add(“MyKey”, "SomeValue");

if(addResponse.getStatus() != RequestStatus.ObjectAdded)

System.out.println("Unexpected request status " + response.getStatus());

addResponse = store1Cache.add(“MyFavoriteKey”, "MyFavoriteValue");

if(addResponse.getStatus() != RequestStatus.ObjectAdded)

System.out.println("Unexpected request status " + response.getStatus());

The application’s goal is to verify that ScaleOut GeoServer replicates stored objects from the store1 IMDG to store2. It creates an instance of Cache(K,V) for the same namespace on store2 and then attempts to retrieve the object with the read API:

CacheResponse<String, String> readResponse = store2Cache.read(“Key”);

if(readResponse.getStatus() != RequestStatus.ObjectAdded)

System.out.println("Unexpected request status " + response.getStatus());

Registering for Events

This sample application demonstrates how an application can have fine grain control over which objects will be removed from the IMDG after a time interval elapses. With the object timeout and timeout-type properties established, objects added to the IMDG will be subject to expiration. When an object expires, the ScaleOut grid will fire an expiration event.

Our application can register to handle expiration events by supplying an instance of Cache(K,V) and an appropriate lambda (or implementing class) to the ServiceEvents static method. The following code removes all objects other than a cache entry mapping with the key, “MyFavoriteKey”:

ServiceEvents.setExpirationHandler(cache, new CacheEntryExpirationHandler<Integer, String>() {

@Override

public CacheEntryDisposition handleExpirationEvent(Cache<Integer, String> cache, String key) {

System.out.println("ObjectExpired: " + key);

if(key.compareTo(“MyFavoriteKey”) == 0)

return CacheEntryDisposition.Save;

return CacheEntryDisposition.Remove;

}});

Running the Applications

We’ve created code snippets for connecting to a ScaleOut grid, creating a cache, and registering for ScaleOut expiration events. We can put all these pieces together to create the two applications with two Java classes called CacheRunner and CacheExpirationListener.

CacheRunner connects to two ScaleOut IMDGs that are setup for push replication using ScaleOut GeoServer. (This is handled by the infrastructure via the dockerfiles and not done in code.) It creates an instance of Cache(K,V) associated with one of the IMDG (called store1) that has a very small absolute timeout for each object and another instance for the other IMDG (called store2). It stores an object in store1 and then retrieves it from store2 to verify that the object was pushed from one IMDG to the other.

Here is the code for CacheRunner:

package com.scaleout.caching.sample;

import com.scaleout.client.GridConnectException;

import com.scaleout.client.GridConnection;

import com.scaleout.client.caching.*;

import java.time.Duration;

public class CacheRunner {

public static void main(String[] args) throws CacheException, GridConnectException {

System.out.println("Connecting to store 1...");

GridConnection store1Connection = GridConnection.connect("bootstrapGateways=store1:2721");

System.out.println("Connecting to store 2...");

GridConnection store2Connection = GridConnection.connect("bootstrapGateways=store2:3721");

Cache<String, String> store1Cache = new CacheBuilder<String, String>(store1Connection, "sample", String.class)

.geoServerPushPolicy(GeoServerPushPolicy.AllowReplication)

.objectTimeout(Duration.ofSeconds(15))

.objectTimeoutType(TimeoutType.Absolute)

.build();

Cache<String, String> store2Cache = new CacheBuilder<String, String>(store2Connection, "sample", String.class)

.build();

System.out.println("Adding object to cache in store 1!");

CacheResponse<String, String> addResponse = store1Cache.add("MyKey", "MyValue");

System.out.println("Object " + ((addResponse.getStatus() == RequestStatus.ObjectAdded ? "added" : "not added."))

+ " to cache in store 1.");

addResponse = store1Cache.add("MyFavoriteKey", "MyFavoriteValue");

System.out.println("Object " + ((addResponse.getStatus() == RequestStatus.ObjectAdded ? "added" : "not added."))

+ " to cache in store 1.");

System.out.println("Reading object from cache in store 2!");

CacheResponse<String,String> readResponse = store2Cache.read("foo");

System.out.println("Object " + ((readResponse.getStatus() == RequestStatus.ObjectRetrieved ?

"retrieved" : "not retrieved.")) + " from cache in store 2.");

}

}

CacheExpirationListener connects to one ScaleOut IMDG, create an instance of Cache(K,V), and registers for expiration events. Here is its code:

package com.scaleout.caching.sample;

import com.scaleout.client.GridConnectException;

import com.scaleout.client.GridConnection;

import com.scaleout.client.ServiceEvents;

import com.scaleout.client.ServiceEventsException;

import com.scaleout.client.caching.*;

import java.io.IOException;

import java.time.Duration;

import java.util.concurrent.CountDownLatch;

public class ExpirationListener {

public static void main(String[] args) throws ServiceEventsException, IOException, InterruptedException,

GridConnectException {

GridConnection store1Connection = GridConnection.connect("bootstrapGateways=store1:2721");

Cache<String, String> store1Cache = new CacheBuilder<String, String>(store1Connection, "sample", String.class)

.geoServerPushPolicy(GeoServerPushPolicy.AllowReplication)

.objectTimeout(Duration.ofSeconds(15))

.objectTimeoutType(TimeoutType.Absolute)

.build();

ServiceEvents.setExpirationHandler(store1Cache, new CacheEntryExpirationHandler<String, String>() {

@Override

public CacheEntryDisposition handleExpirationEvent(Cache<String, String> cache, String key) {

CacheEntryDisposition disposition = CacheEntryDisposition.NotHandled;

System.out.printf("Object (%s) expired\n", key);

if(key.equals("MyFavoriteKey"))

disposition = CacheEntryDisposition.Save;

else disposition = CacheEntryDisposition.Remove;

return disposition;

}

});

}

}

To run these applications, we’ll use the Docker compose command to build Docker containers. We will have 4 services, each defined in their own respective dockerfile, which are all provided and available on the GitHub repo. You can clone the repository and then run the deployment with the following command:

docker-compose -f ./docker-compose.yml up -d –build

Here is the expected output for CacheRunner:

Adding object to cache in store 1! Object added to cache in store 1. Object added to cache in store 1. Reading object from cache in store 2! Object retrieved. from cache in store 2.

Here is the output for ExpirationListener:

Connected to store1! Object (MyFavoriteKey) expired Object (MyKey) expired

Summing Up

The new ScaleOut client API for Java adds important features that support the development of modern web and cloud applications. Built-in support for connection strings enables simultaneous connections to multiple IMDGs using DNS entries. Full support for asynchronous accesses also assists in application development. Let us know what you think with your comments on our community forum.

The post Introducing a New ScaleOut Java Client API appeared first on ScaleOut Software.

]]>The post Introducing A New Execution Platform for Redis Clients appeared first on ScaleOut Software.

]]>

The Challenge

Redis®* offers a compelling set of data structures that enhance the capabilities of a distributed cache beyond just storing serialized objects. Created in 2009 as a single-server store to assist in the design of a web server, Redis gives applications numerous useful options for organizing stored data, including sets, lists, and hashes. Cluster support was added later, and it introduced specialized concepts, like hashslots and master/replica shards, that system administrators must understand and manage. Along with its use of eventual consistency, this has created complexity that makes cluster management challenging while reducing flexibility in configurations.

In contrast, ScaleOut StateServer®, a distributed cache for serialized objects and first released in 2005, was designed from the ground up to run on a server cluster with automated load-balancing, data replication, and recovery while storing data with full consistency (i.e., sequential consistency) across replicas. It also executes client requests using all available processing cores for maximum throughput. These features dramatically simplify cluster management, especially for enterprise users, improve flexibility, and lower TCO. For example, unlike Redis, ScaleOut server clusters can seamlessly grow from a single to multiple servers, and system administrators do not need to manage hashslots or master/replica shards. See a recent blog post that discusses how ScaleOut StateServer simplifies cluster management in comparison to Redis.

ScaleOut Software recognized that running Redis commands on a ScaleOut StateServer cluster would offer Redis users the best of both worlds: familiar and rich data structures combined with significantly simpler cluster management and full data consistency. However, the ideal implementation would need to use Redis open-source code to execute Redis commands so that client commands would behave identically to open-source Redis clusters. The challenge is then to integrate Redis code into ScaleOut StateServer’s execution platform and take advantage of ScaleOut’s highly automated clustering features while eliminating the single-threaded constraints of Redis’s event-loop architecture.

Integrating Redis into ScaleOut StateServer

Released as a community preview, version 5.11 of ScaleOut StateServer introduces support for the most popular Redis data structures (strings, sets, lists, hashes, and sorted sets) plus publish/subscribe commands, transactions, and various utility commands (such as FLUSHDB and EXPIRE). Both Windows and Linux versions are available. This release uses open-source Redis version 6.2.5 to process Redis commands.

Redis clients connect to any ScaleOut StateServer server in a cluster using the standard RESP protocol. (A cluster can contain one or more servers.) Client libraries internally obtain the mapping of hashslots to servers using either the CLUSTER SLOTS or CLUSTER NODES commands and then direct Redis access requests to the appropriate ScaleOut server. To maximize throughput, each ScaleOut server processes incoming Redis commands on multiple threads using all available processor cores; there is no need to deploy multiple shards on each server for this purpose.

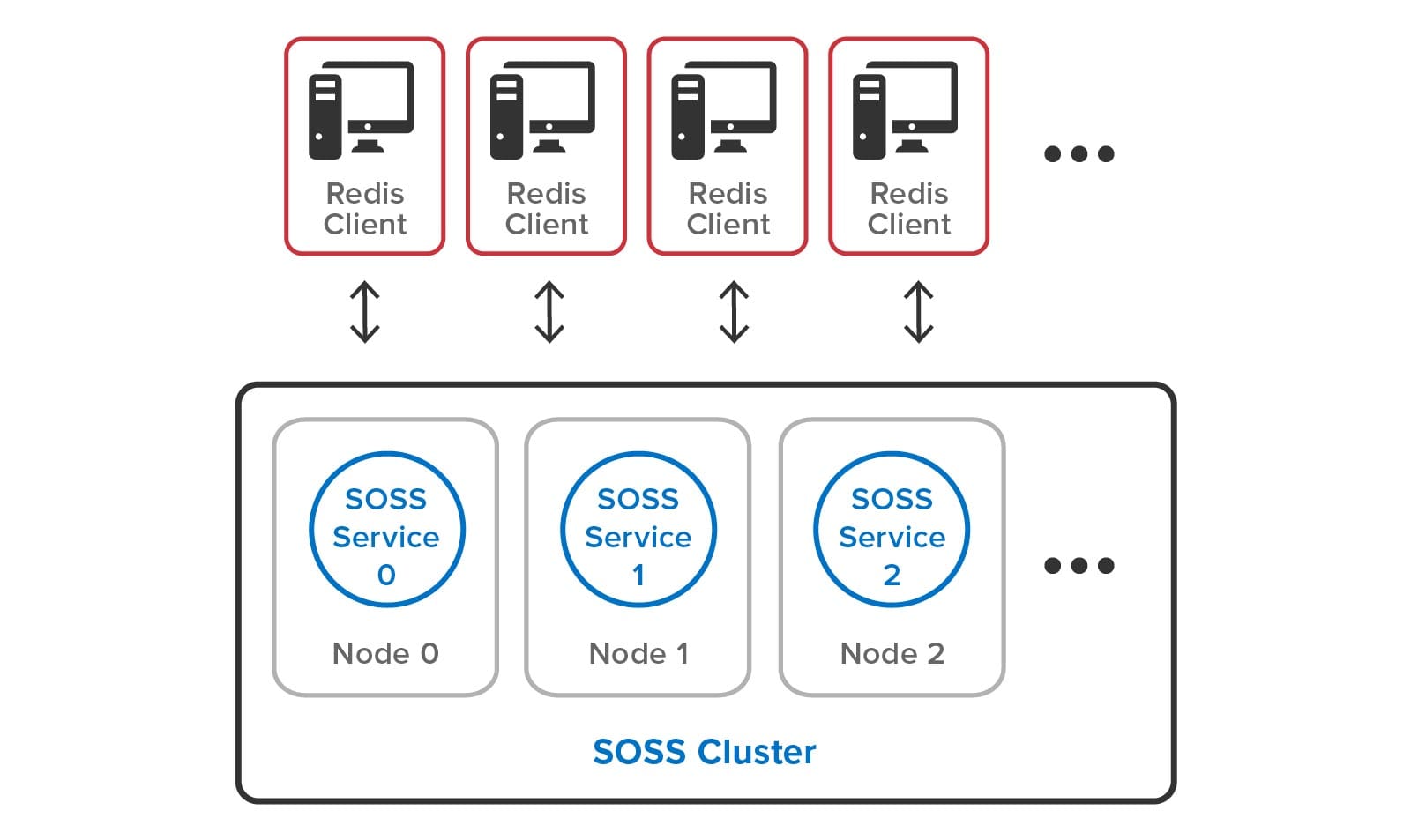

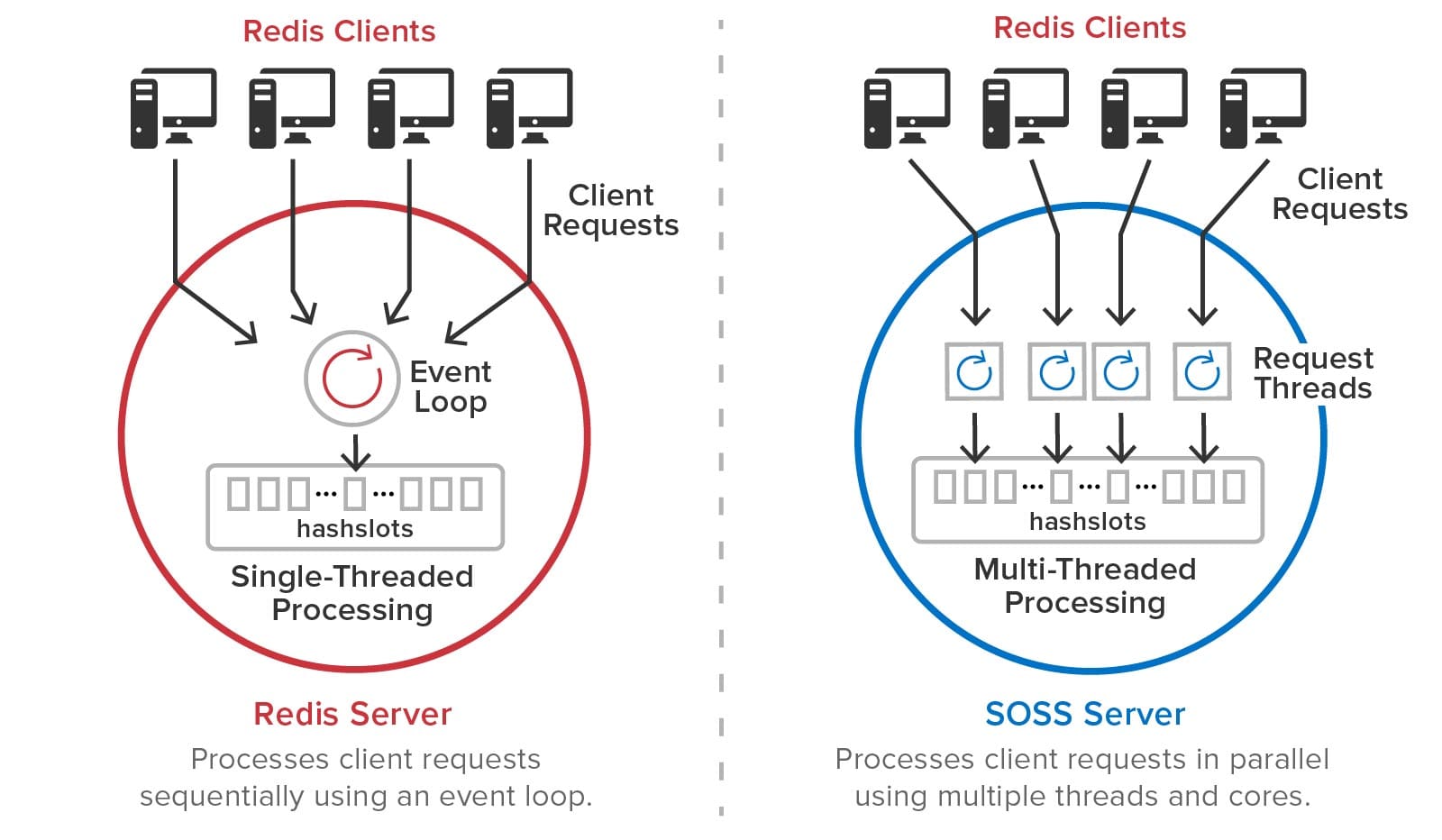

The following diagram shows a set of Redis clients connecting to a ScaleOut StateServer cluster. Note that the complexities of hashslots and shards have been eliminated:

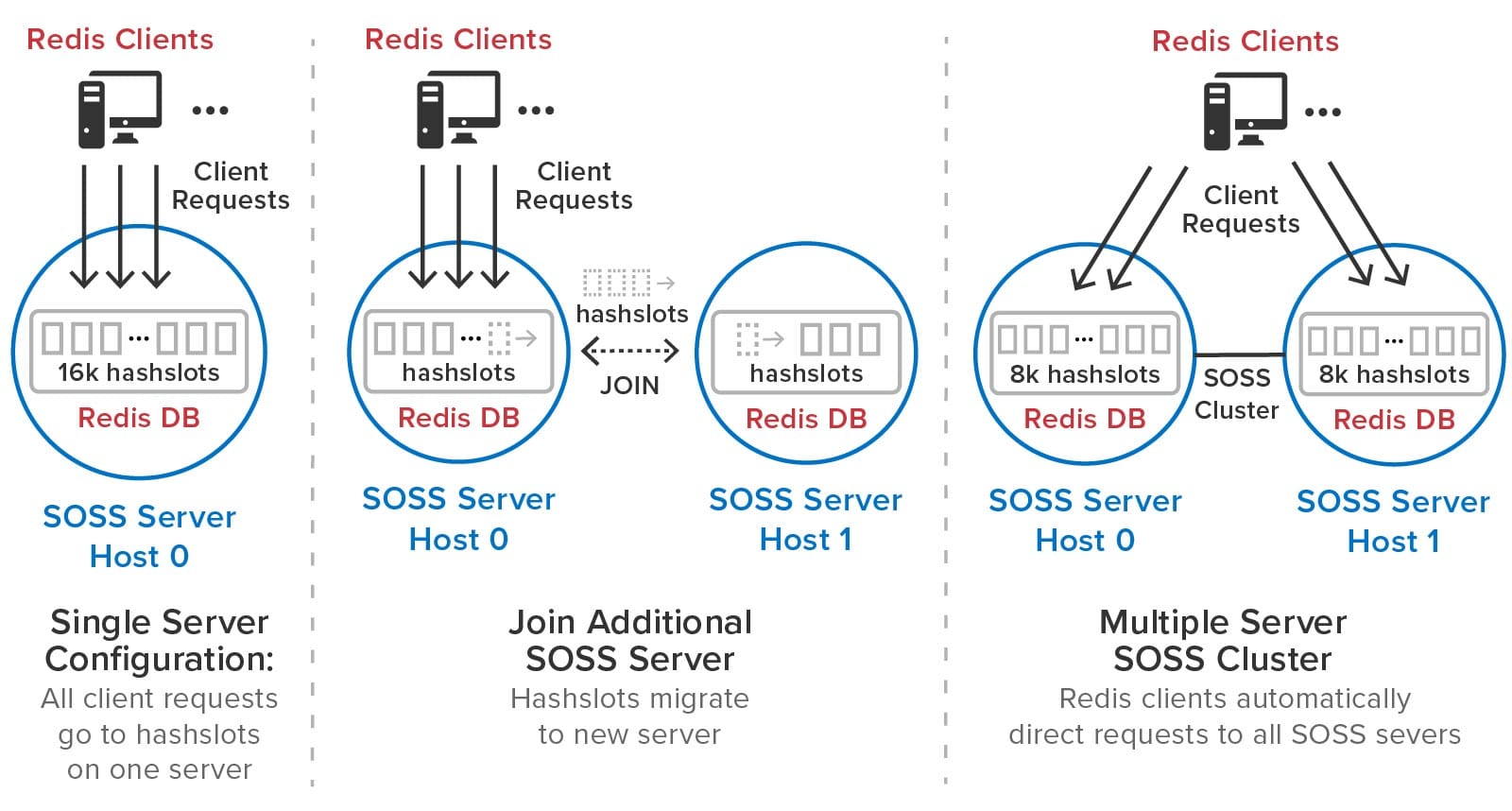

As the need for additional throughput grows, system administrators can simply join new servers to the cluster. ScaleOut StateServer automatically rebalances the hashslots across the cluster as servers are added or removed. It also delays execution of Redis commands during load-balancing (and recovery) to give clients a consistent picture of hashslot placement and avoid client exceptions. After a hashslot has fully migrated to a remote server, a requesting client is returned the Redis -MOVED indication so that it can redirect its request to the new server.

The following diagram illustrates how ScaleOut StateServer automatically manages hashslots. In this example, it migrates half of the hashslots to a second server that joins a cluster:

ScaleOut StateServer automatically creates replicas for all hashslots. There is no need for system administrators to manually create master and replica shards or move them from server to server during membership changes and recovery. ScaleOut StateServer automatically places replicas on different servers from their corresponding primary hashslots and migrates them as necessary during membership changes to ensure optimal load-balancing. If a server fails or has a network outage, ScaleOut StateServer automatically “self-heals” by promoting replicas to primaries and creating new replicas as necessary.

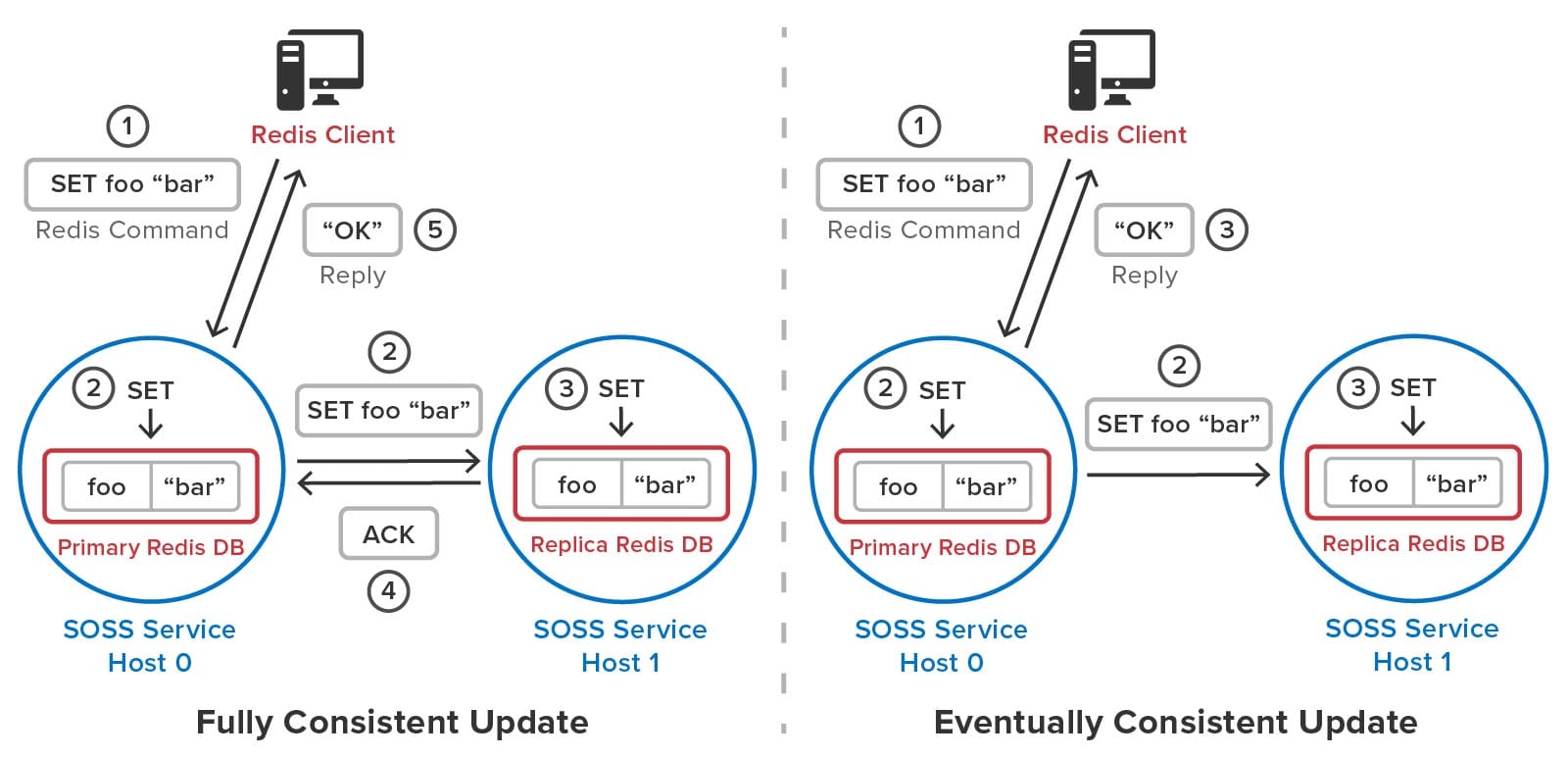

To avoid serving stale data to clients after recovery from an outage, ScaleOut StateServer uses a patented quorum algorithm to implement fully consistent updates to stored objects. In contrast, Redis uses an eventual consistency model for updating replicas. (To maximize throughput at the expense of data consistency, ScaleOut StateServer can optionally be configured for eventual consistency.) When a server receives a Redis command, it executes this command on a quorum containing the primary hashslot and replicas (one or two in the current implementation) prior to returning to the client. Transactions are processed in the same manner.

The following diagram compares the full and eventually consistent models for updating replicas and shows how they differ in behavior. A fully consistent update waits for the replica to be updated prior to returning to the client, whereas an eventually consistent update does not. If a primary server should fail prior to committing the replica’s update, the cluster could lose the update and serve stale data to clients.

Implementation Details

The following diagram shows how Redis open-source code has been integrated into ScaleOut StateServer:

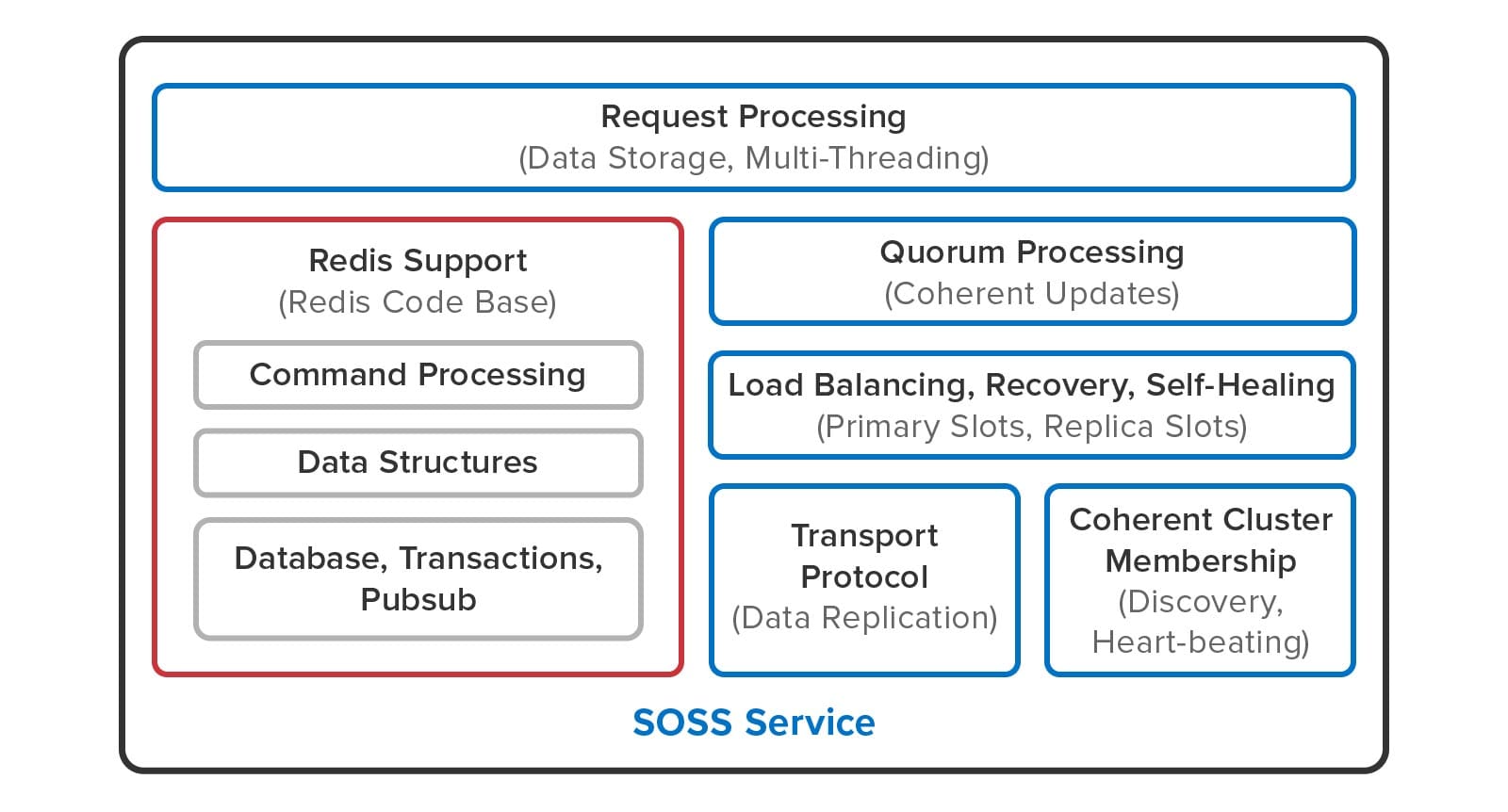

Redis open-source code (shown in the red box) implements command parsing and processing, the data structure commands, transactions, publish/subscribe commands, and blocking commands. ScaleOut StateServer takes over all clustering functions, including request processing, membership, quorum processing of updates, load-balancing, recovery, and self-healing. It also uses a proprietary transport protocol for server-to-server communication.

As illustrated below, ScaleOut StateServer uses multi-threaded execution for Redis commands to take advantage of all processing cores and eliminate the need for multiple primary shards on each server. In contrast, Redis executes commands using an event loop that processes commands sequentially on a single processing core:

To accomplish this, ScaleOut StateServer has implemented a command scheduler that independently executes commands for each hashslot so that they can run in parallel without global locking.

What’s Missing?

The community preview release focuses on demonstrating support for Redis data structures, which represent the widely used core of Redis functionality. It does not include support for Redis streams, Lua scripting, modules, AOL/RDB persistence, ACLs, and Redis configuration files. In addition, many utility commands which are not required, such as cluster commands for manually moving hashslots, are not supported. Lastly, this version does not incorporate all of the performance enhancements in development for the production release.

Summing Up

ScaleOut’s new integration of Redis open-source code into ScaleOut StateServer was designed to bring powerful new capabilities to Redis users while ensuring native-Redis behavior for client applications. Targeted to meet the needs of enterprise users, it dramatically simplifies the management of Redis clusters by automating all cluster operations, and it ensures that fully consistent updates are performed by Redis commands. In addition, this integration runs alongside ScaleOut StateServer’s native APIs, which incorporate advanced features not available on open-source Redis clusters, such as data-parallel computing, streaming analytics, and coherent, wide-area data replication.

ScaleOut Software is excited to hear your feedback about the community preview and learn what additional features you would like to see in the upcoming production release. You can download ScaleOut StateServer, which incorporates the preview release, here for Linux or Windows and try it out now. Let us know what you think.

*Redis is a registered trademark of Redis Ltd. and the Redis box logo is a mark of Redis Ltd. Any rights therein are reserved to Redis Ltd. Any use by ScaleOut Software is for referential purposes only and does not indicate any sponsorship, endorsement or affiliation between Redis and ScaleOut Software.

The post Introducing A New Execution Platform for Redis Clients appeared first on ScaleOut Software.

]]>The post Deploying Real-Time Digital Twins On Premises with ScaleOut StreamServer DT appeared first on ScaleOut Software.

]]>

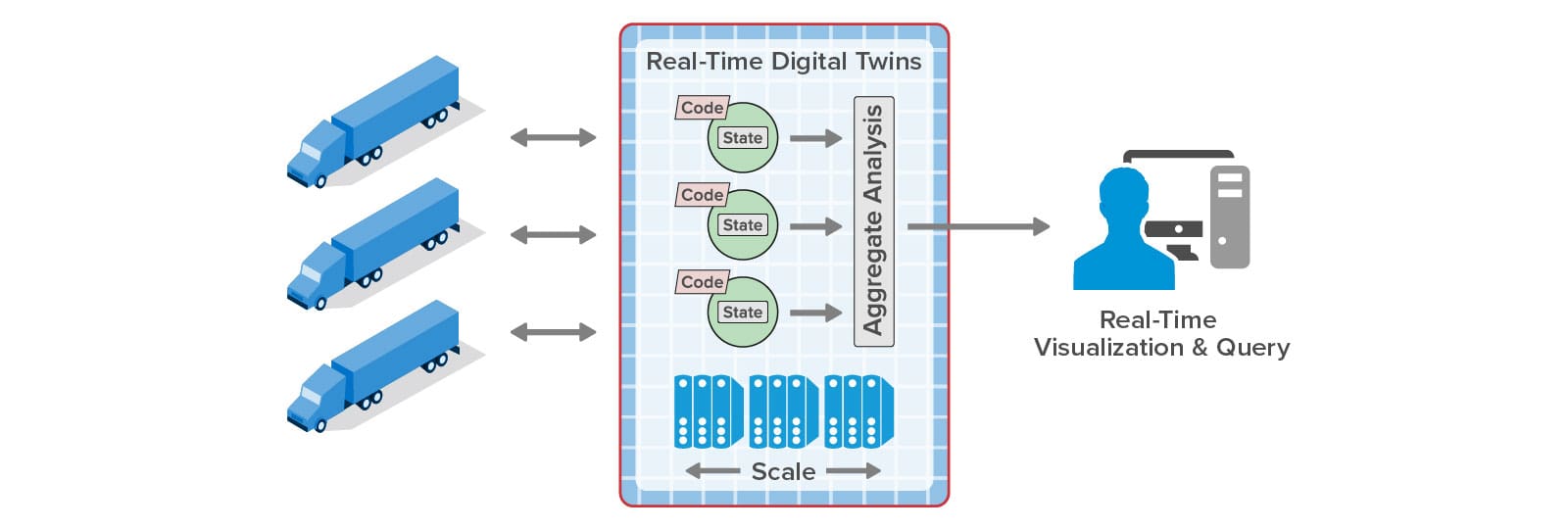

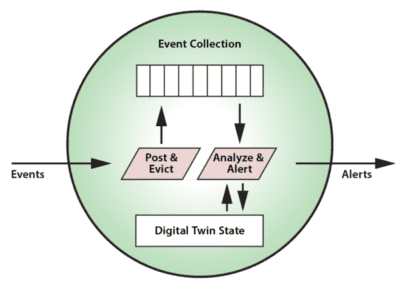

With the ScaleOut Digital Twin Streaming Service , an Azure-hosted cloud service, ScaleOut Software introduced breakthrough capabilities for streaming analytics using the real-time digital twin concept. This new software model enables applications to easily analyze telemetry from individual data sources in 1-3 milliseconds while maintaining state information about data sources that deepens introspection. It also provides a basis for applications to create key status information that the streaming platform aggregates every few seconds to maximize situational awareness. Because it runs on a scalable, highly available in-memory computing platform, it can do all this simultaneously for hundreds of thousands or even millions of data sources.

, an Azure-hosted cloud service, ScaleOut Software introduced breakthrough capabilities for streaming analytics using the real-time digital twin concept. This new software model enables applications to easily analyze telemetry from individual data sources in 1-3 milliseconds while maintaining state information about data sources that deepens introspection. It also provides a basis for applications to create key status information that the streaming platform aggregates every few seconds to maximize situational awareness. Because it runs on a scalable, highly available in-memory computing platform, it can do all this simultaneously for hundreds of thousands or even millions of data sources.

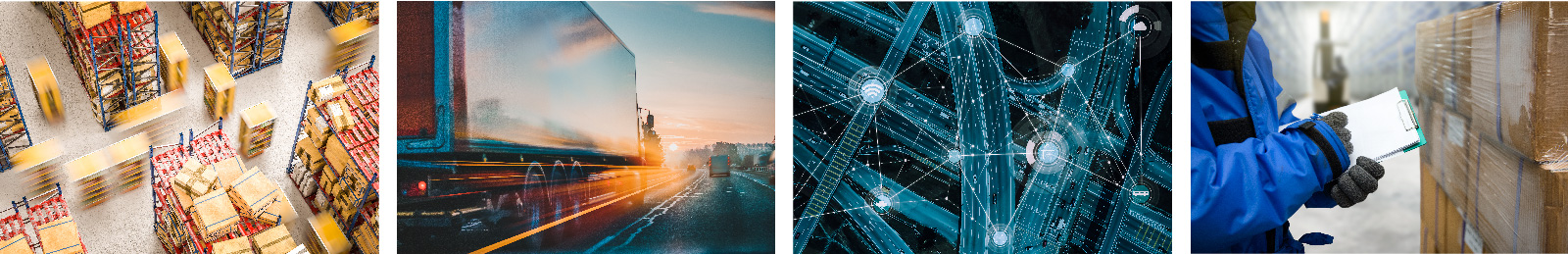

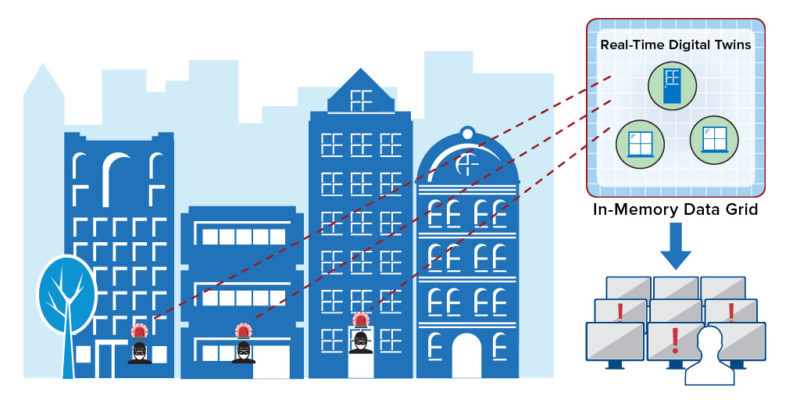

The unique capabilities of real-time digital twins can provide important advances for numerous applications, including security, fleet telematics, IoT, smart cities, healthcare, and financial services. These applications are all characterized by numerous data sources which generate telemetry that must be simultaneously tracked and analyzed, while maintaining overall situational awareness that immediately highlights problems of concern an/or opportunities of interest. For example, consider some of the new capabilities that real-time digital twins can provide in fleet telematics and vaccine distribution during COVID-19.

To address security requirements or the need for tight integration with existing infrastructure, many organizations need to host their streaming analytics platform on-premises. Scaleout StreamServer® DT was created to meet this need. It combines the scalable, battle-tested in-memory data grid that powers ScaleOut StreamServer with the graphical user interface and visualization features of the cloud service in a unified, on-premises deployment. This gives users all of the capabilities of the ScaleOut Digital Twin Streaming Service with complete infrastructure control.

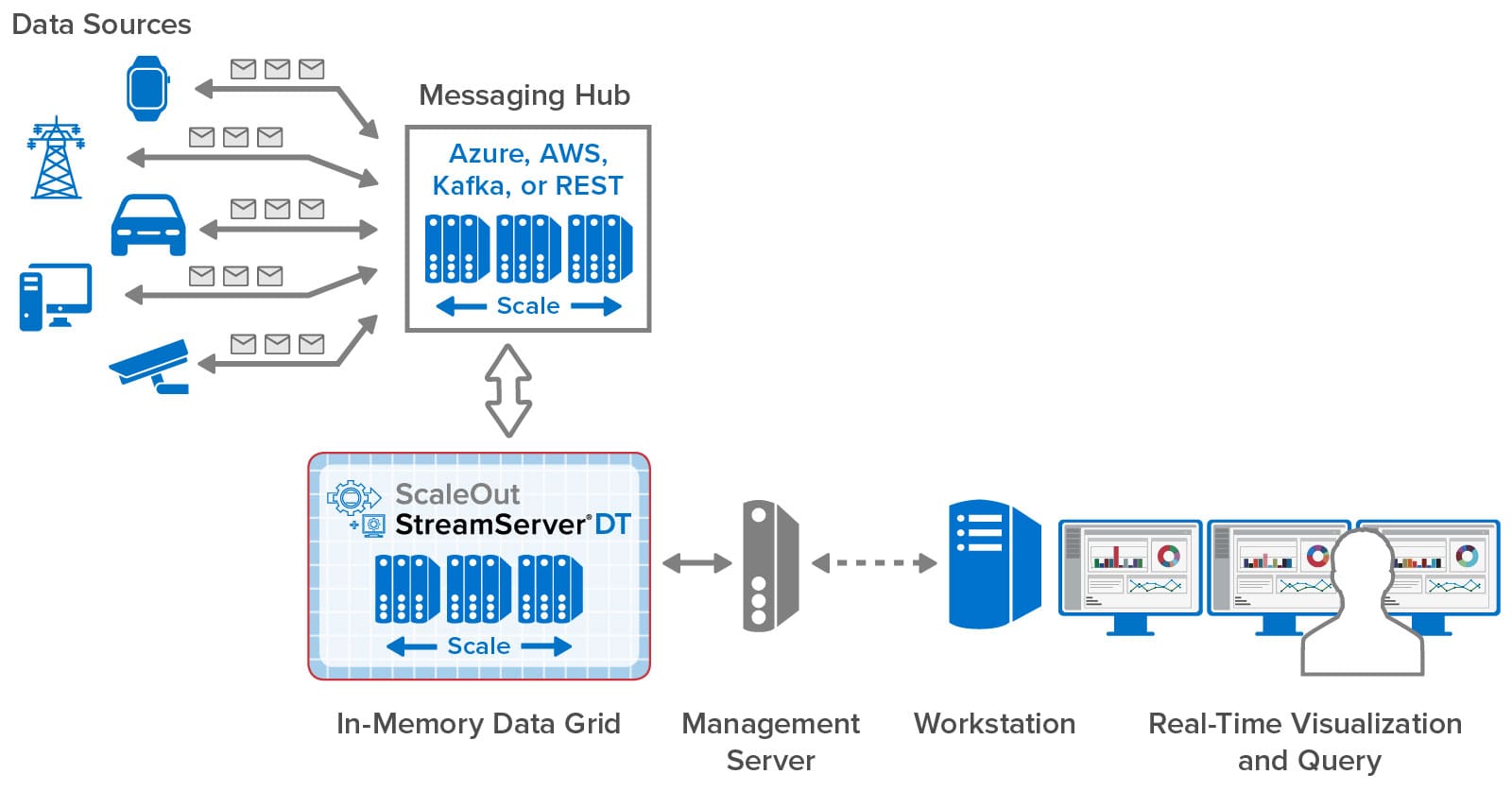

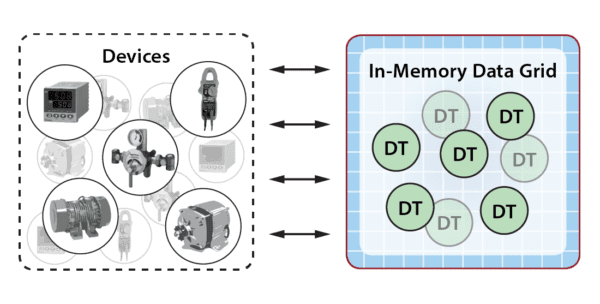

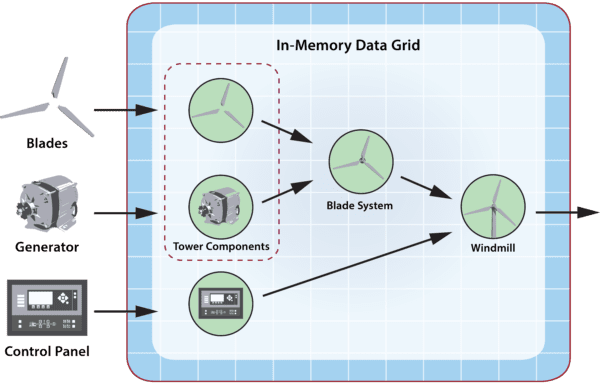

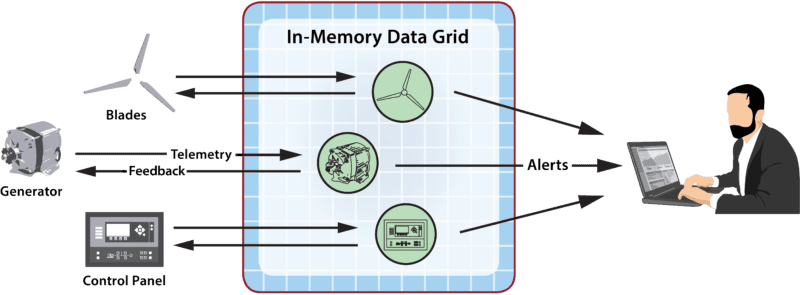

As illustrated in the following diagram, ScaleOut StreamServer DT installs its management console on a standalone server that connects to ScaleOut StreamServer’s in-memory data grid. This console hosts the graphical user interface that is securely accessed by remote workstations within an organization. It also deploys real-time digital twin models to the in-memory data grid, which hosts instances of digital twins (one per data source) and runs application-defined code to process incoming messages. Message are delivered to the grid using messaging hubs, such as Azure IoT Hub, AWS IoT Core, Kafka, a built-in REST service, or directly using APIs.

The management console installs as a set of Docker containers on the management server. This simplifies the installation process and ensures portability across operating systems. Once installed, users can create accounts to control access to the console, and all connections are secured using SSL. The results of aggregate analytics and queries performed within the in-memory data grid can then be accessed and visualized on workstations running throughout an organization.

Because ScaleOut’s in-memory data grid runs in an organization’s data center and avoids the requirement to use a cloud-hosted message hub or REST service, incoming messages from data sources can be processed with minimum latency. In addition, application code running in real-time digital twins can access local resources, such as databases and alerting systems, with the best possible performance and security. Use of dedicated computing resources for the in-memory data grid delivers the highest possible throughput for message processing and real-time analytics.

While cloud hosting of streaming analytics as a SaaS (software-as-a-service) offering creates clear advantages in reducing capital costs and providing access to highly elastic computing resources, it may not be suitable for organizations which need to maintain full control of their infrastructures to address security and performance requirements. ScaleOut StreamServer DT was designed to meet these needs and deliver the important, unique benefits of streaming analytics using real-time digital twins to these organizations.

The post Deploying Real-Time Digital Twins On Premises with ScaleOut StreamServer DT appeared first on ScaleOut Software.

]]>The post Use Digital Twins for the Next Generation in Telematics appeared first on ScaleOut Software.

]]>

Real-Time Digital Twins Can Add Important New Capabilities to Telematics Systems and Eliminate Scalability Bottlenecks

Rapid advances in the telematics industry have dramatically boosted the efficiency of vehicle fleets and have found wide ranging applications from long haul transport to usage-based insurance. Incoming telemetry from a large fleet of vehicles provides a wealth of information that can help streamline operations and maximize productivity. However, telematics architectures face challenges in responding to telemetry in real time. Competitive pressures should spark innovation in this area, and real-time digital twins can help.

Current Telematics Architecture

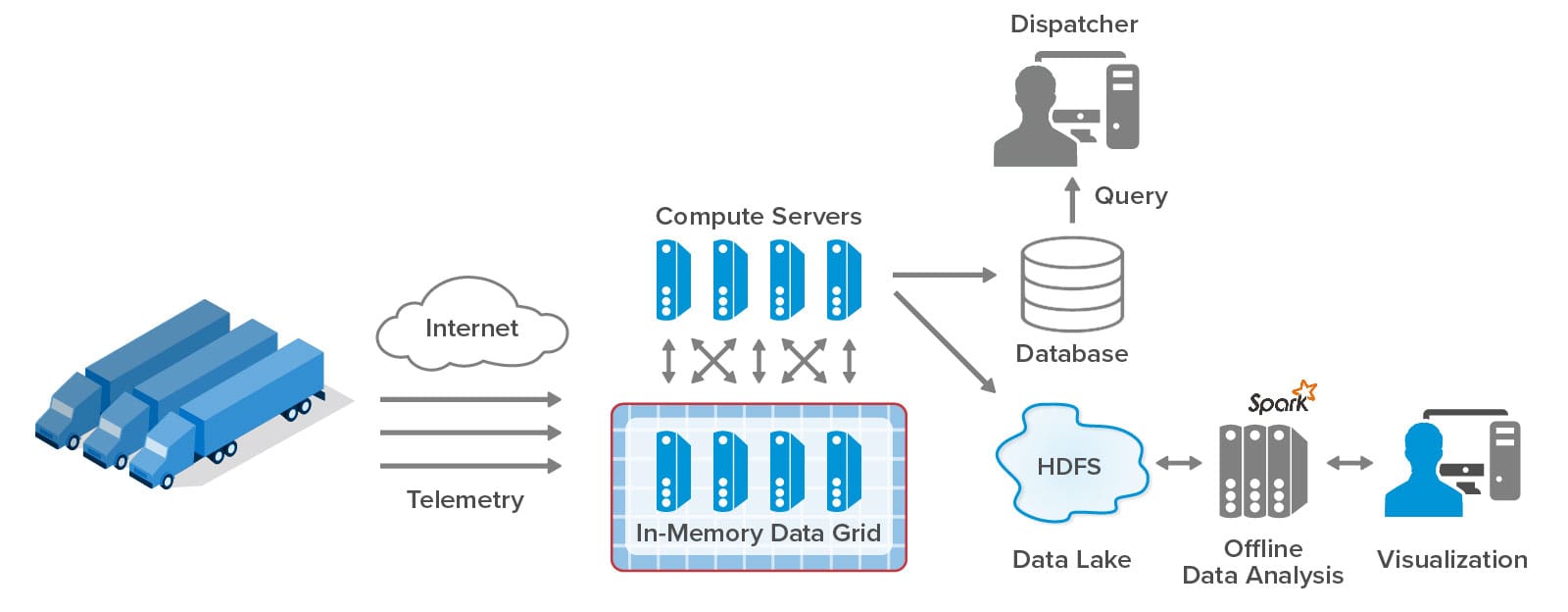

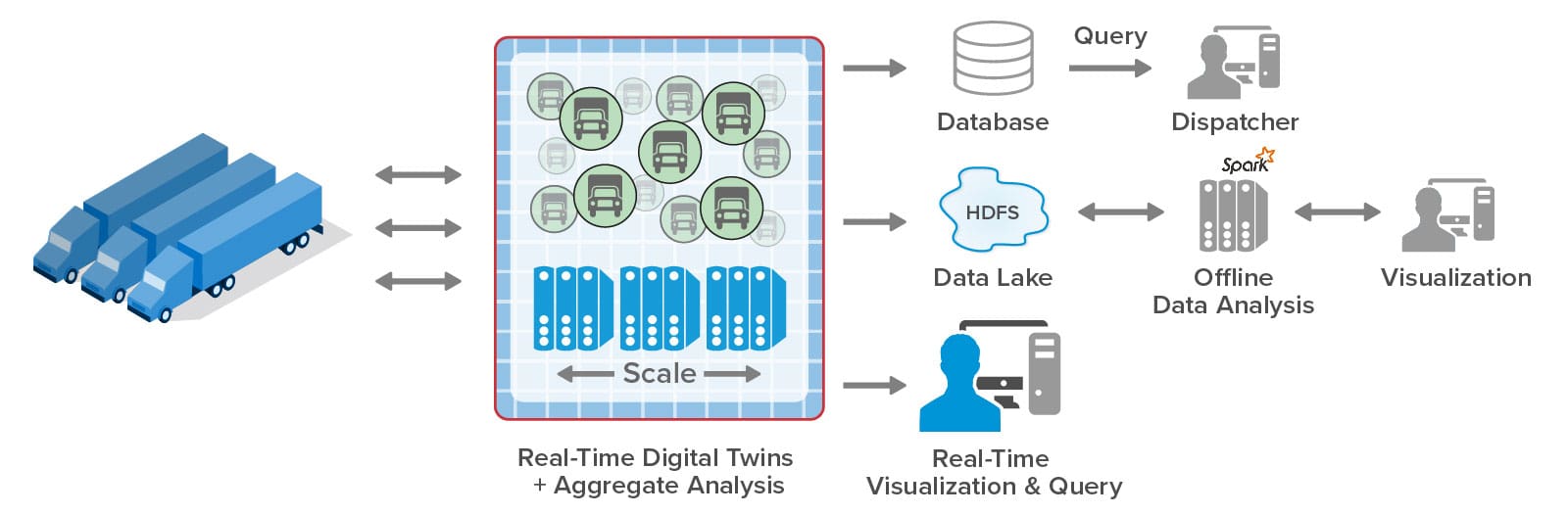

The volume of incoming telemetry challenges current telematics systems to keep up and quickly make sense of all the data. Here’s a typical telematics architecture for processing telemetry from a fleet of trucks:

Each truck today has a microprocessor-based sensor hub which collects key telemetry, such as vehicle speed and acceleration, engine parameters, trailer parameters, and more. It sends messages over the cell network to the telematics system, which uses its compute servers (that is, web and application servers) to store incoming messages as snapshots in an in-memory data grid, also known as a distributed cache. Every few seconds, the application servers collect batches of snapshots and write them to the database where they can be queried by dispatchers managing the fleet. At the same time, telemetry snapshots are stored in a data lake, such as HDFS, for offline batch analysis and visualization using big data tools like Spark. The results of batch analysis are typically produced after an hour’s delay or more. Lastly, all telemetry is archived for future use (not shown here).

This telematics architecture has evolved to handle ever increasing message rates (often reaching 2K messages per second), make up-to-the-minute information available to dispatchers, and feed offline analytics. Using a database, dispatchers can query raw telemetry to determine the information they need to manage the fleet in real time. This enables them to answer questions such as:

- “Where is truck 7563?”

- “How long has the driver been on the road?”

- “Which trucks have abnormally high oil temperature?”

Offline analytics can mine the telemetry for longer term statistics that help managers assess the fleet’s overall performance, such as the average length of delivery or routing delays, the fleet’s change in fuel efficiency, the number of drivers exceeding their allowed shift times, and the number and type of mechanical issues. These statistics help pinpoint areas where dispatchers and other personnel can make strategic improvements.

Challenges for Current Architectures

There are three key limitations in this telematics architecture which impact its ability to provide managers with the best possible situational awareness. First, incoming telemetry from trucks in the fleet arrives too fast to be analyzed immediately. The architecture collects messages in snapshots but leaves it to human dispatchers to digest this raw information by querying a database. What if the system could separately track incoming telemetry for each truck, look for changes based on contextual information, and then alert dispatchers when problems were identified? For example, the system could perform continuous predictive analytics on the engine’s parameters with knowledge of the engine’s maintenance history and signal if an impending failure was detected. Likewise, it could watch for hazardous driving with information about the driver’s record and medical condition. Having the system continuously introspect on the telemetry for each truck would enable the dispatcher to spot problems and intervene more quickly and effectively.

A second key limitation is the lack of real-time aggregate analysis. Since this analysis must be performed offline in batch jobs, it cannot respond to immediate issues and is restricted to assessing overall fleet performance. What if the real-time telemetry tracking for each truck could be aggregated within seconds to spot emerging issues that affect many trucks and require a strategic response? These issues could include:

- Unexpected delays in a region due to highway blockages or weather that indicate the need to inform or reroute several trucks

- An unusually large number of soon-to-be timed-out drivers or impending maintenance issues which require making immediate schedule changes to avoid downtime

- Congregated drivers who are impacting on-time deliveries

The current telematics architecture also has inherent scalability issues in the form of network bottlenecks. Because all telemetry is stored in the in-memory data grid and accessed by a separate farm of compute servers, the network between the grid and the server farm can quickly bottleneck as the incoming message rate increases. As the fleet size grows and the message rate per truck increases from once per minute to once per second, the telematics system may not be able to handle the additional incoming telemetry.

Solution: Real-Time Digital Twins

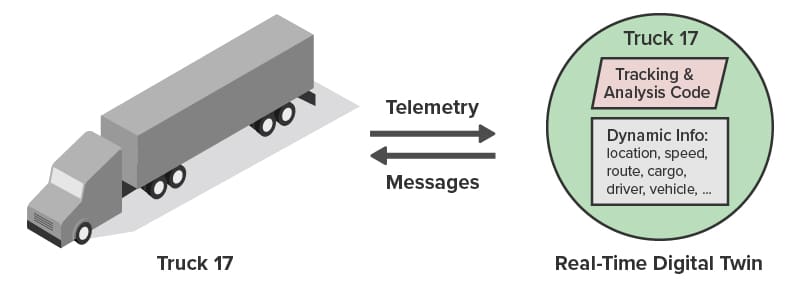

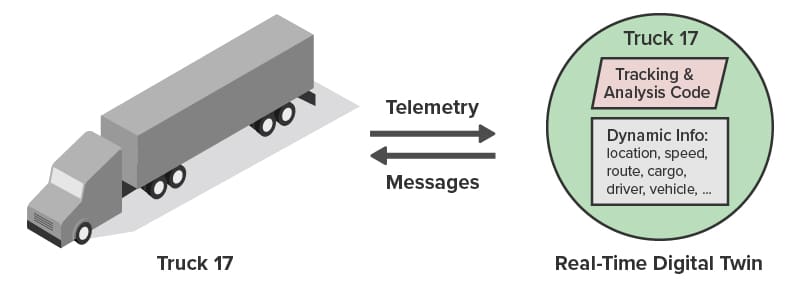

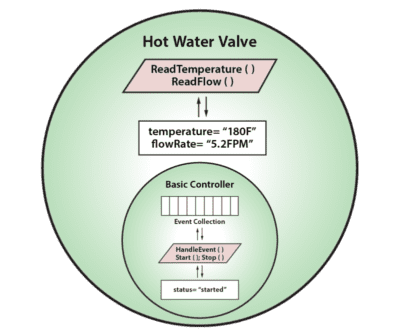

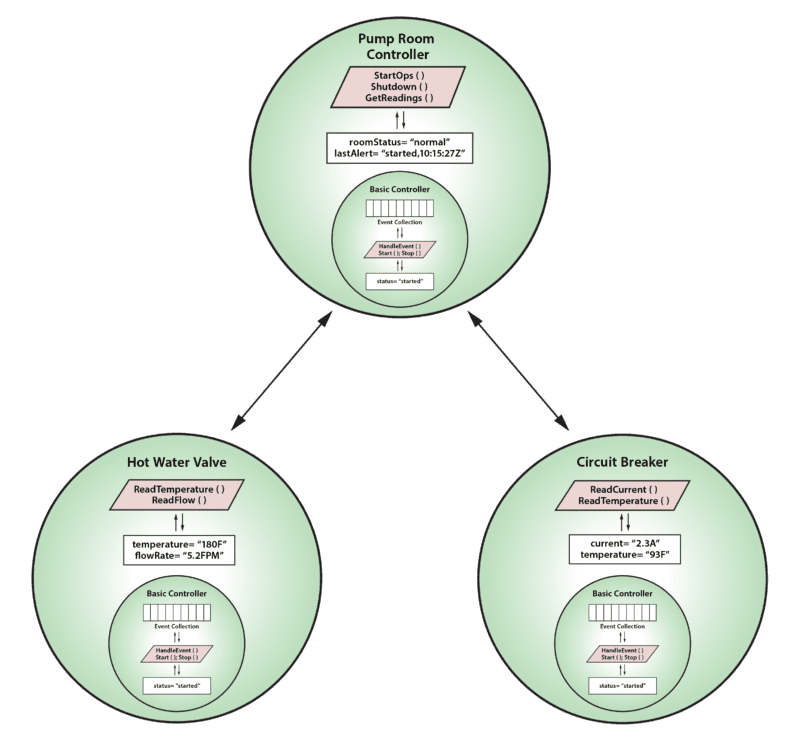

A new software architecture for streaming analytics based on the concept of real-time digital twins can address these challenges and add significant capabilities to telematics systems. This new, object-oriented software technique provides a memory-based orchestration framework for tracking and analyzing telemetry from each data source. It comprises message-processing code and state variables which host dynamically evolving contextual information about the data source. For example, the real-time digital twin for a truck could look like this:

Instead of just snapshotting incoming telemetry, real-time digital twins for every data source immediately analyze it, update their state information about the truck’s condition, and send out alerts or commands to the truck or to managers as necessary. For example, they can track engine telemetry with knowledge of the engine’s known issues and maintenance history. They can track position, speed, and acceleration with knowledge of the route, schedule, and driver (allowed time left, driving record, etc.). Message-processing code can incorporate a rules engine or machine learning to amplify their capabilities.

Real-time digital twins digest raw telemetry and enable intelligent alerting in the moment that assists both drivers and dispatchers in surfacing issues that need immediate attention. They are much easier to develop than typical streaming analytics applications, which have to sift through the telemetry from all data sources to pick out patterns of interest and which lack contextual information to guide them. Because they are implemented using in-memory computing techniques, real-time digital twins are fast (typically responding to messages in a few milliseconds) and transparently scalable to handle hundreds of thousands of data sources and message rates exceeding 100K messages/second.

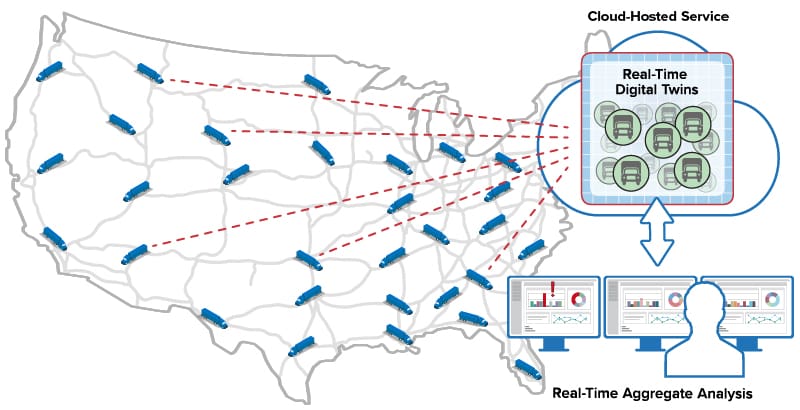

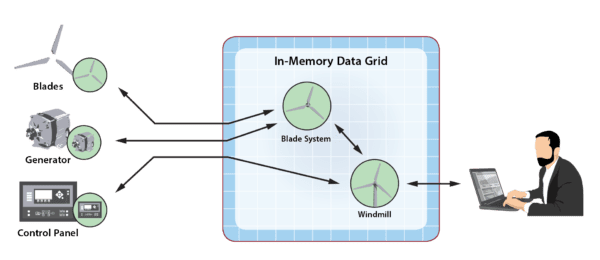

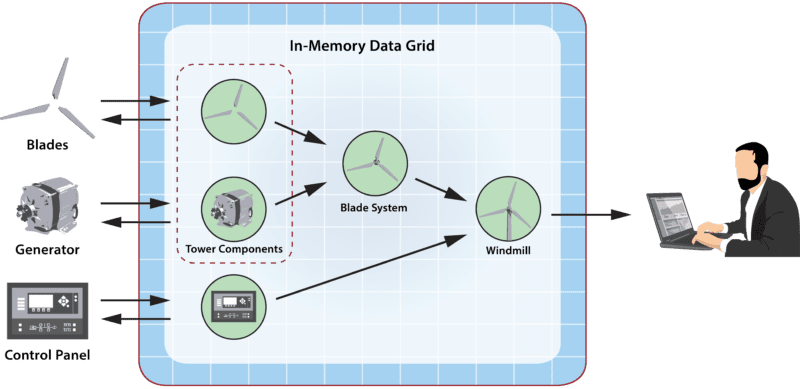

Here’s a depiction of real-time digital twins running within an in-memory data grid in a telematics architecture:

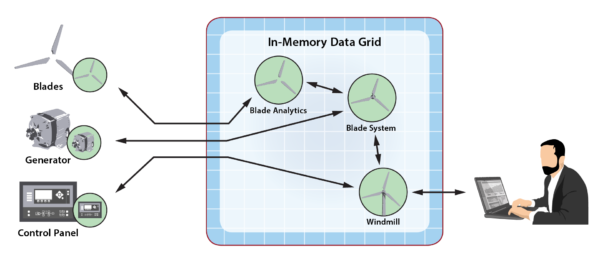

In addition to fitting within an overall architecture that includes database query and offline analytics, real-time digital twins enable built-in aggregate analytics and visualization. They provide curated state information derived from incoming telemetry that can be continuously aggregated and visualized to boost situational awareness for managers, as illustrated below. This opens up an important new opportunity to aggregate performance indicators needed in real time, such as emerging road delays by region or impending scheduling issues due to timed out drivers, that can be acted upon while new problems are still nascent. Real-time aggregate analytics add significant new capabilities to telematics systems.

Summing Up

While telematics systems currently provide a comprehensive feature set for managing fleets, they lack the important ability to track and analyze telemetry from each vehicle in real time and then aggregate derived information to maintain continuous situational awareness for the fleet. Real-time digital twins can address these shortcomings with a powerful, fast, easy to develop, and highly scalable software architecture. This new software technique has the potential to make a major impact on the telematics industry.

To learn more about real-time digital twins in action, take a look at ScaleOut Software’s streaming service for hosting real-time digital twins in the cloud or on-premises here.

The post Use Digital Twins for the Next Generation in Telematics appeared first on ScaleOut Software.

]]>The post ScaleOut Software Announces the Availability of ScaleOut GeoServer® Pro appeared first on ScaleOut Software.

]]>BELLEVUE, Wash – November 17, 2020 – ScaleOut Software today announced ScaleOut GeoServer® Pro, a new software product release that integrates site-to-site data replication with fully coherent data access for its battle-tested ScaleOut StateServer® in-memory data grid (IMDG) and distributed cache. This release extends the company’s ScaleOut GeoServer® DR product, which provides asynchronous, site-to-site data replication to protect against site-wide failures and currently is in production use.

For more than fifteen years, ScaleOut StateServer has set the standard for high performance reliability and industry-leading ease of use at hundreds of enterprise sites around the world. The product stores fast-changing data in a wide variety of applications, including ecommerce, financial services, online learning, airline reservations, gaming and much more.

“With the release of ScaleOut GeoServer Pro, we are excited to offer our customers breakthrough capabilities for multi-site storage of their fast-changing data,” said Dr. William L. Bain, founder and CEO of ScaleOut Software. “Now they can take advantage of our industry-leading technology that replicates data across sites to protect against data center failures while making fully coordinated use of the sites.”

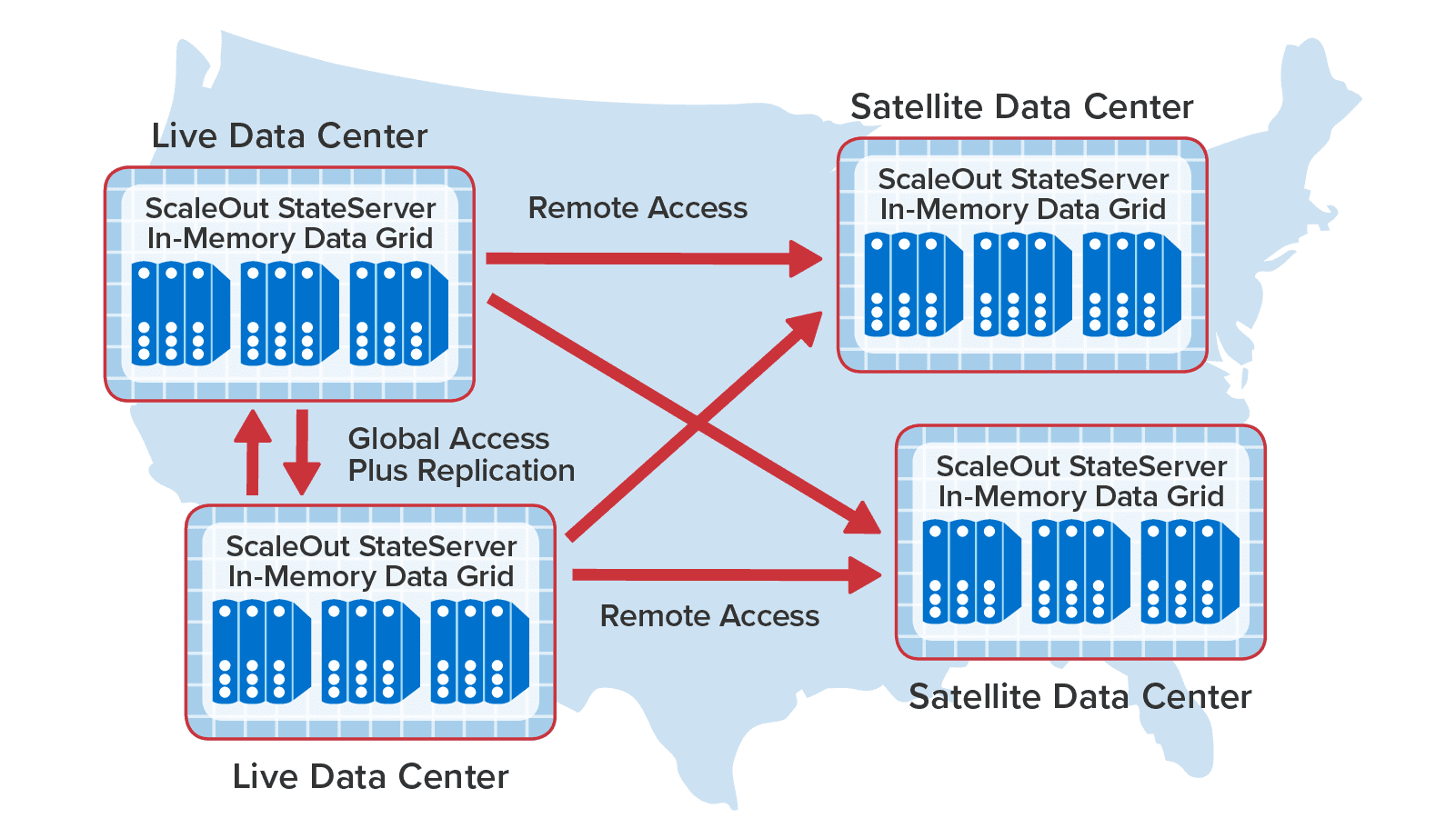

By harnessing ScaleOut GeoServer Pro, users can now take in-memory data storage and distributed caching to the next level with an integrated solution for disaster recovery and synchronized data access across multiple sites. This technology enables applications to both protect against site-wide failures and to maintain a consistent view of data stored at all data centers.

Key ScaleOut GeoServer Pro Benefits:

ScaleOut GeoServer Pro enables organizations to store, access and protect fast-changing data at multiple sites, while maintaining a consistent view of the data at all times. The technology ensures that critical data is always accessible and synchronized across locations.

- Transparent Replication Across Data Centers: Applications can automatically replicate all stored data across multiple data centers for continuous availability in case a data center fails. This includes replicating in-memory data across different cloud regions, while automatically coordinating access to data stored at these sites.

- Integrated, Synchronized Data Access: ScaleOut GeoServer Pro introduces optional, synchronized access to replicated data across multiple data centers. This enables applications that distribute workloads across data centers to maintain a straightforward, unified view of stored data. For example, web applications which use a global load balancer to share user data held in two data centers can now use both data centers in an “active-active” configuration.

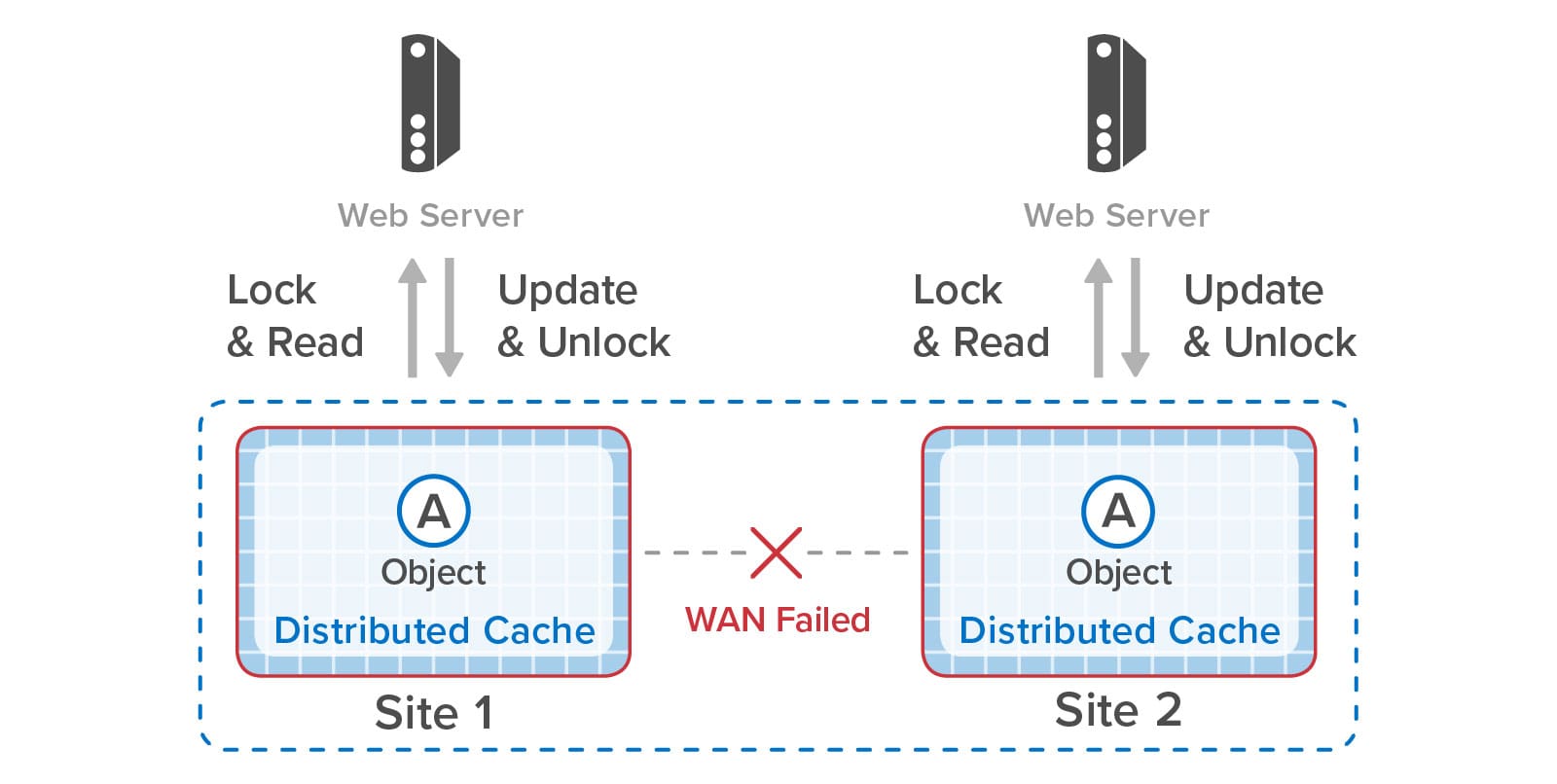

- Automatic Recovery from WAN Failures: In the event of a WAN failure between data centers, applications can independently access data at each data center. When WAN connectivity is re-established, ScaleOut GeoServer Pro automatically resolves inconsistencies in stored data due to duplicate updates during the WAN outage known as a “split-brain” condition.

- Maximize Application Performance: To maximize overall performance, ScaleOut GeoServer Pro’s caches offer flexible coherency policies so that applications can select the appropriate combination of coherency and access latency according to data usage. This avoids unnecessary WAN data usage and associated delays.

Additional Resources:

For more information about ScaleOut GeoServer Pro, please visit:

- ScaleOut GeoServer Pro announcement blog post

- ScaleOut GeoServer Pro product page

About ScaleOut Software

Founded in 2003, ScaleOut Software develops leading-edge software that delivers scalable, highly available, in-memory computing and streaming analytics technologies to a wide range of industries. ScaleOut Software’s in-memory computing platform enables operational intelligence by storing, updating, and analyzing fast-changing, live data so that businesses can capture perishable opportunities before the moment is lost. It has offices in Bellevue, Washington and Beaverton, Oregon.

For more information, please visit www.scaleoutsoftware.com and follow @scaleout_inc

###

Contact:

RH Strategic for ScaleOut Software

The post ScaleOut Software Announces the Availability of ScaleOut GeoServer® Pro appeared first on ScaleOut Software.

]]>The post Combine Data Replication Across Sites with Synchronized Access appeared first on ScaleOut Software.

]]>

Web applications, such as ecommerce sites and financial services, often need to replicate fast-changing, in-memory data across multiple data centers or cloud regions. As part of an overall strategy for disaster recovery, cross-site data replication ensures that mission-critical data is continuously available, even if one site goes offline.

Many applications need to use two (and sometimes more) sites in an “active-active” manner, distributing the workload across the sites. Here are some real-world applications we have seen. Ecommerce applications need to maintain shopping carts at multiple sites and distribute the workload from their shoppers with a global load-balancer. Cell phone providers need to keep their lists of available mobile numbers consistent across sites as individual stores allocate them. Conference-management companies need to keep attendee lists and schedules consistent at conference sites and their central data center.

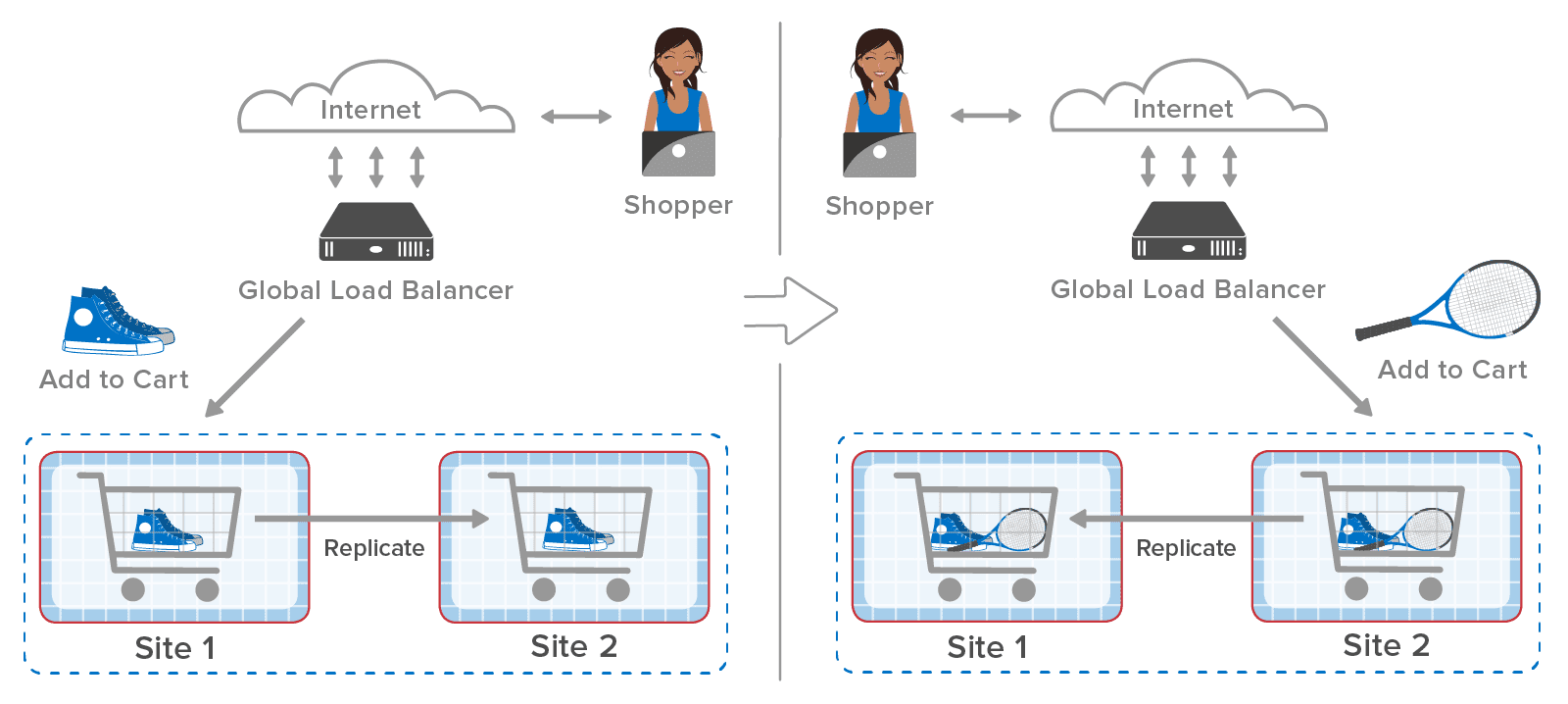

Let’s take a closer look at an ecommerce site using a global load-balancer to distribute incoming web requests to multiple sites. This approach lets the web application take advantage of the processing power at multiple sites during normal operations. However, it creates the challenge of coordinating access to in-memory objects which are replicated across two or more sites. This can add substantial complexity if handled by the application.

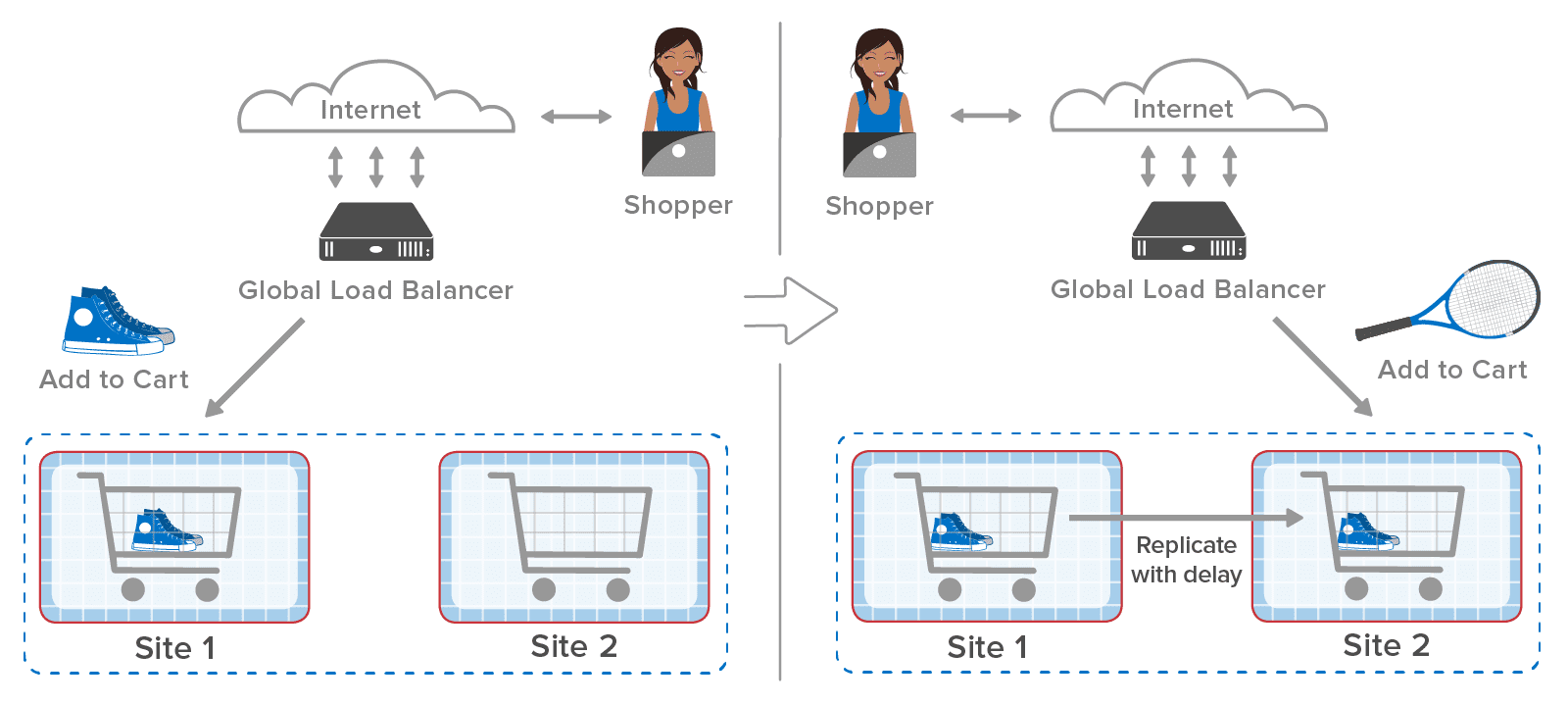

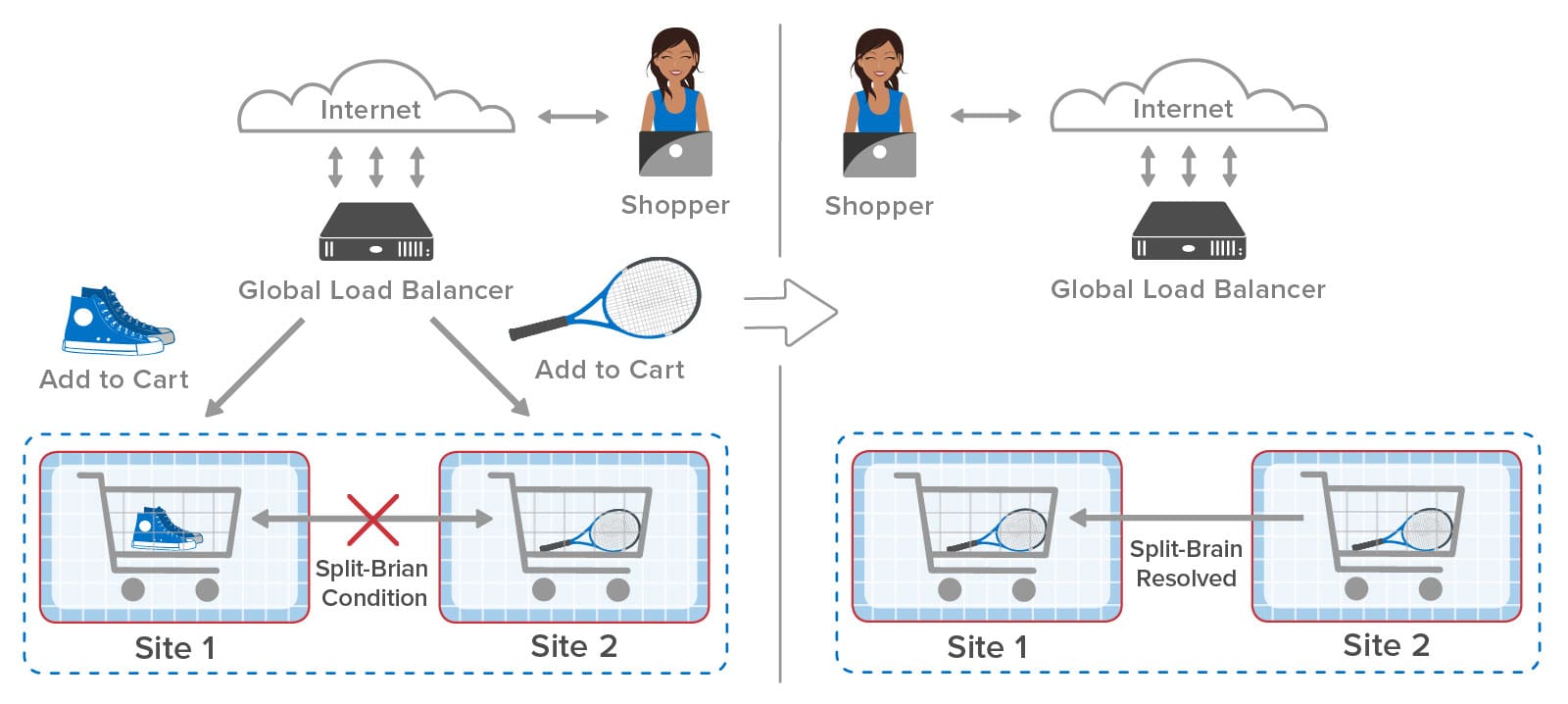

Here’s an example of an ecommerce site using a global load-balancer to distribute incoming web requests across two sites, each of which hosts shopping carts within an in-memory data grid (also called a distributed cache), such as ScaleOut StateServer®. A web shopper might select a pair of shoes and place them in the shopping cart followed by selecting a tennis racket. As shown in the following diagram, the global load-balancer sends the first request to site 1 and the second request to site 2 in this example:

After the first request completes, the in-memory data grid at site 1 replicates the cart to site 2. The global load-balancer then sends the second request to site 2, which adds the tennis racket to the cart. Finally, site 2 replicates the changes back to site 1 so that both sites have the latest copy of the shopping cart.

What happens if replication from site 1 to site 2 is slightly delayed? After site 2 puts the tennis racket in the cart, the incoming replicated update arrives and overwrites the cart. This causes both sites to lose the update at site 2, and the shopper will undoubtedly be annoyed to find that the tennis racket is missing from the cart:

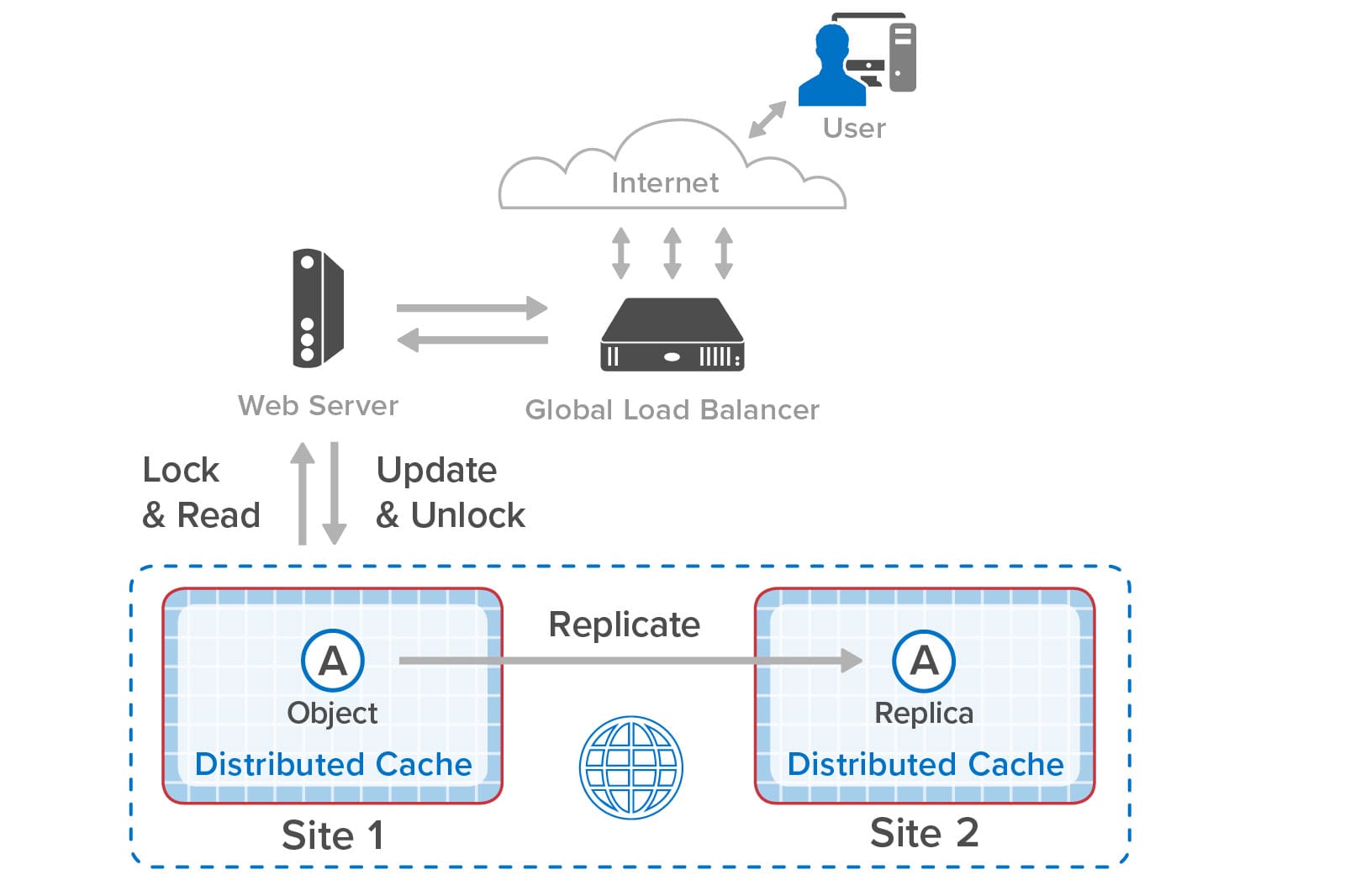

The solution to this problem is to have the web applications at both sites synchronize updates to the shopping carts. This ensures that only one site at a time updates the shopping cart and that each site always sees the latest version of the in-memory object. Using ScaleOut GeoServer Pro, applications can use standard object-locking APIs for this purpose, just as they would to coordinate object access within a single in-memory data grid:

After the web application on site 1 updates and unlocks object A (the shopping cart in our example), site 1 replicates the update to site 2. When the global load-balancer sends the next request to site 2, the web application on that site 2 also locks and reads the object, updates it, and then unlocks it:

When the object is locked on site 2, ScaleOut GeoServer Pro makes sure that the application sees the latest version of the object. It does so by migrating ownership of the object to site 2 and checking that it has the latest version. Although this requires a round trip to site 1, once a site gains ownership, all further accesses are local until the other site again attempts to lock the object and request ownership. If the global load-balancer avoids ping-ponging between sites with every web request, the latency to lock an object remains low.

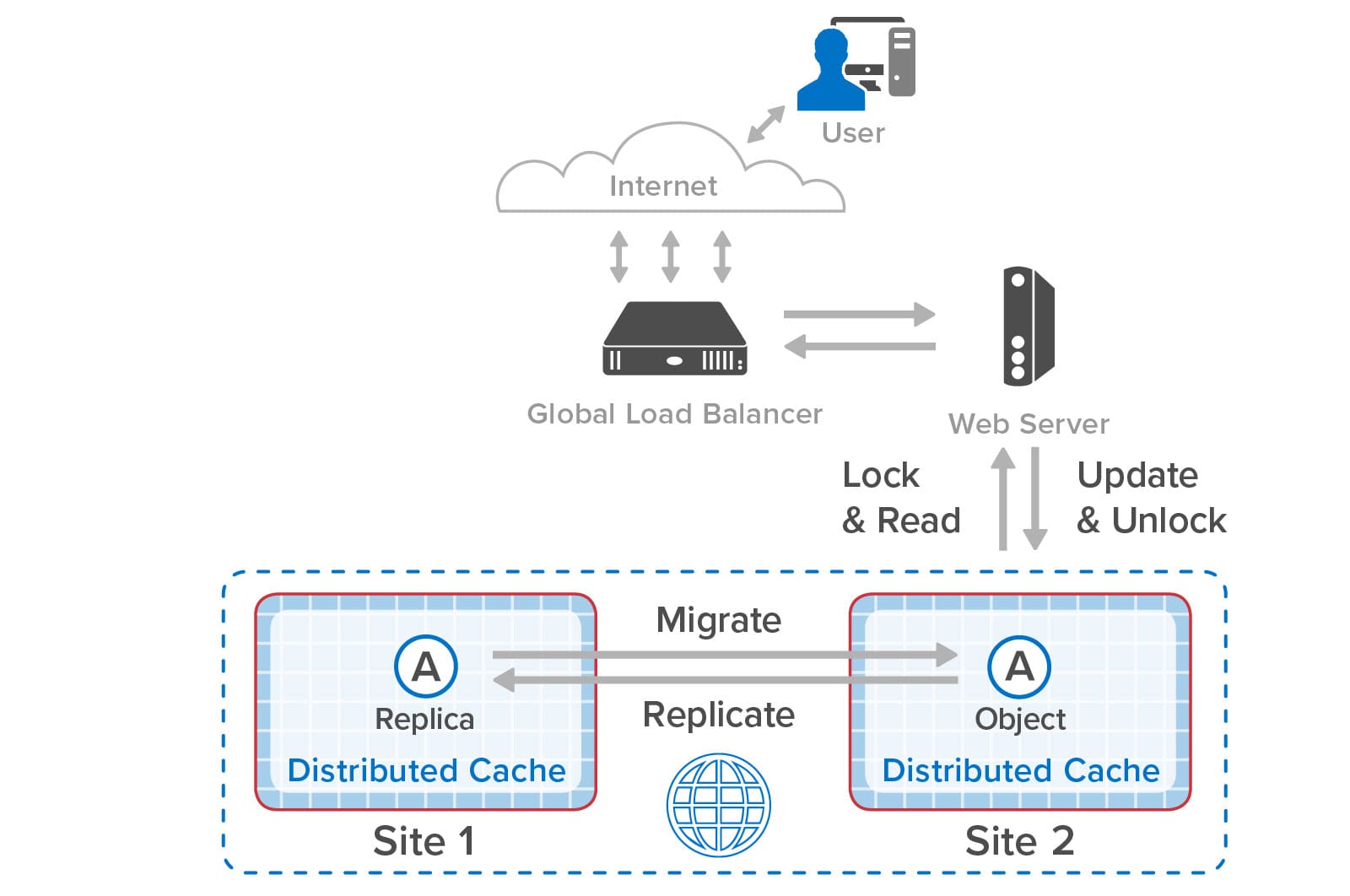

Should the wide area network (WAN) connecting the two sites fail, or if the remote site goes offline, the two sites can operate independently; this is called “split brain” mode in distributed systems. They detect the WAN failure and automatically promote local replica objects as needed to gain ownership when requesting a lock. This enables uninterrupted operations that make use of object replicas held at each site. By combining object replication with synchronized access, applications enjoy the full benefits of synchronized object access across sites during normal operations and uninterrupted access during WAN or site outages:

A key challenge created by split-brain mode is how to restore normal operations after an outage has been corrected. For example, the following diagram shows the two sites in our shopping example operating independently during a WAN outage that occurs between the two web requests. Site 1 adds the shoes to its shopping cart but is unable to replicate that update to site 2. The web application on site 2 then places the tennis racket in its shopping cart:

After the WAN is restored, the two sites have to resolve the differences in the contents of their copies of stored objects. Unless the application uses special, conflict-free data types that can be merged (and this is rare for most applications), a heuristic needs to be used to resolve conflicts. ScaleOut GeoServer Pro automatically resolves conflicts for each pair of object copies by selecting the copy with the latest update time or randomly picking one of the copies if the update times are the same. So in this case, both sites are updated with the version of the shopping cart holding the tennis racket. (This will be another source of annoyance for our shopper, but at least the ecommerce site survived a WAN outage without interruption.)

ScaleOut GeoServer Pro resolves split-brain conflicts as it detects them when updates are performed and then are successfully replicated across the WAN. It also has to resolve the fact that both sites now think they own the same object, and it handles this by randomly picking a site to retain ownership. As the two sites attempt to lock and read the object, ownership will then automatically migrate to the site where it’s needed.

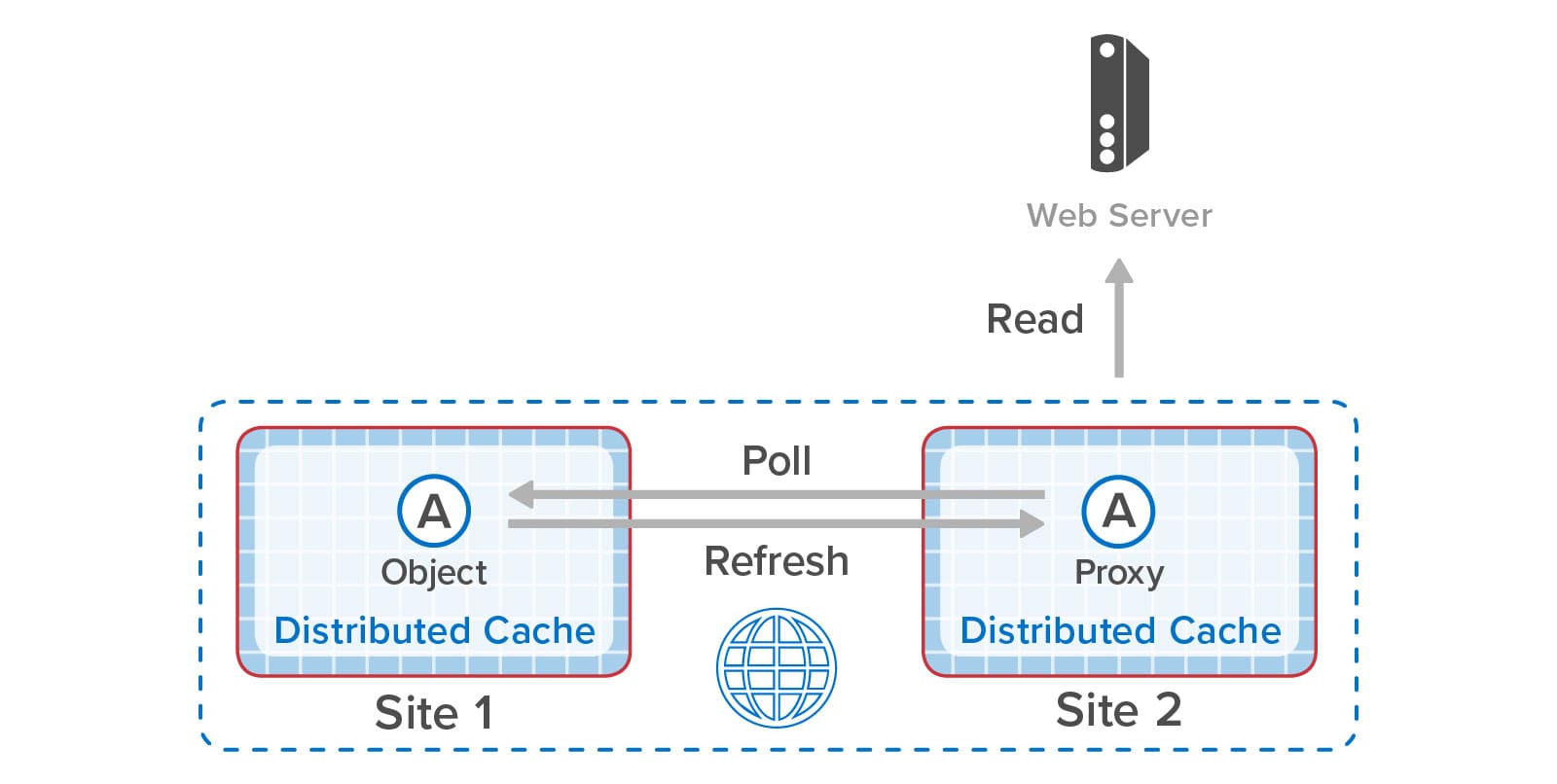

One more key benefit of ScaleOut GeoServer Pro is that it lets applications efficiently access objects that have slowly changing contents (such as product descriptions, schedules, and portfolio lists) without making repeated WAN accesses. Sites that are configured for bi-directional replication have immediate access to replicas when just reading but not updating remote objects. Other sites can be configured to maintain local copies of remote objects (called “proxies”) that can periodically poll for updates using a configurable timeout. This minimizes WAN accesses while allowing applications to track changes in objects stored at remote sites.

To illustrate how all of these features can work together, the following diagram shows two sites on the west coast of the U.S. configured for bi-directional replication and synchronized access along with additional “satellite” sites in other states that are periodically polling to read data held in the “live” data centers:

With its advanced capabilities for combining data replication with synchronized access, ScaleOut GeoServer Pro takes a leadership position among commercial in-memory data grids by enabling applications to seamlessly access and update objects replicated across data centers. This solves a long-standing challenge for applications that actively maintain mission-critical data at multiple sites and further extends the power of in-memory data grids to manage fast-changing business data.

The post Combine Data Replication Across Sites with Synchronized Access appeared first on ScaleOut Software.

]]>The post Founder & CEO William Bain Discusses Real-Time Digital Twins with TechStrong TV appeared first on ScaleOut Software.

]]>Watch the video here.

The post Founder & CEO William Bain Discusses Real-Time Digital Twins with TechStrong TV appeared first on ScaleOut Software.

]]>The post Why Use “Real-Time Digital Twins” for Streaming Analytics? appeared first on ScaleOut Software.

]]>

What Problems Does Streaming Analytics Solve?

To understand why we need real-time digital twins for streaming analytics, we first need to look at what problems are tackled by popular streaming platforms. Most if not all platforms focus on mining the data within an incoming message stream for patterns of interest. For example, consider a web-based ad-serving platform that selects ads for users and logs messages containing a timestamp, user ID, and ad ID every time an ad is displayed. A streaming analytics platform might count all the ads for each unique ad ID in the latest five-minute window and repeat this every minute to give a running indication of which ads are trending.

Based on technology from the Trill research project, the Microsoft Stream Analytics platform offers an elegant and powerful platform for implementing applications like this. It views the incoming stream of messages as a columnar database with the column representing a time-ordered history of messages. It then lets users create SQL-like queries with extensions for time-windowing to perform data selection and aggregation within a time window, and it does this at high speed to keep up with incoming data streams.

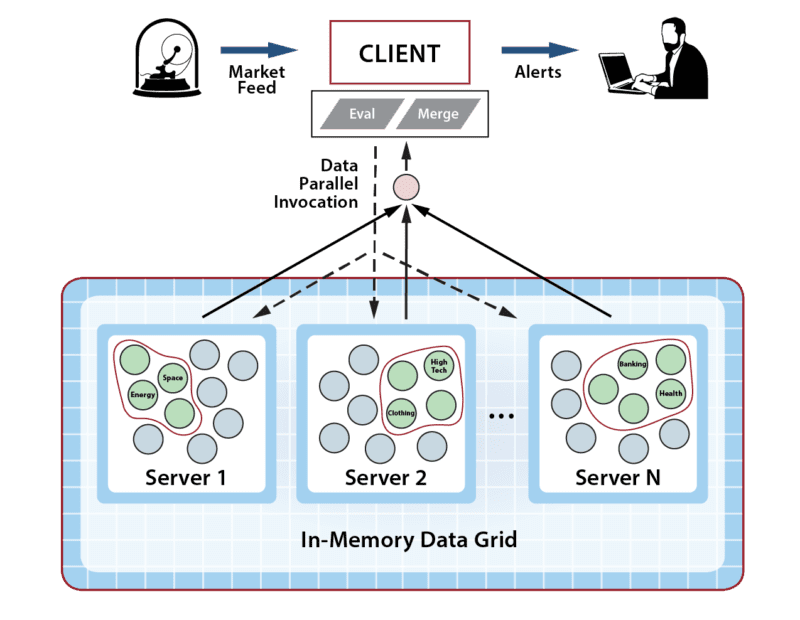

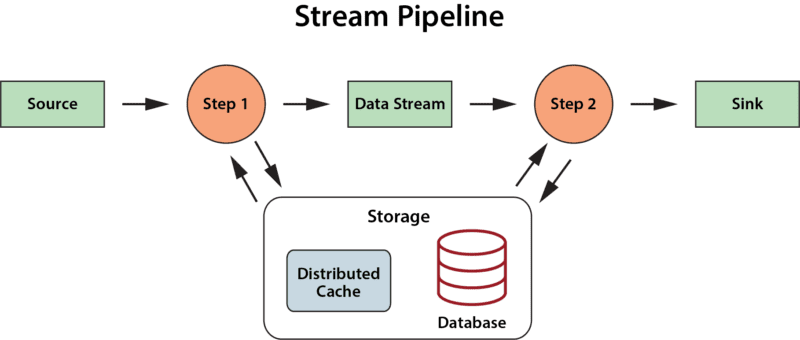

Other streaming analytic platforms, such as open-source Apache Storm, Flink, and Beam and commercial implementations such as Hazelcast Jet, let applications pass an incoming data stream through a pipeline (or directed graph) of processing steps to extract information of interest, aggregate it over time windows, and create alerts when specific conditions are met. For example, these execution pipelines could process a stock market feed to compute the average stock price for all equities over the previous hour and trigger an alert if an equity moves up or down by a certain percentage. Another application tracking telemetry from gas meters could likewise trigger an alert if any meter’s flow rate deviates from its expected value, which might indicate a leak.

What’s key about these stream-processing applications is that they focus on examining and aggregating properties of data communicated in the stream. Other than by observing data in the stream, they do not track the dynamic state of the data sources themselves, and they don’t make inferences about the behavior of the data sources, either individually or in aggregate. So, the streaming analytics platform for the ad server doesn’t know why each user was served certain ads, and the market-tracking application does not know why each equity either maintained its stock price or deviated materially from it. Without knowing the why, it’s much harder to take the most effective action when an interesting situation develops. That’s where real-time digital twins can help.

The Need for Real-Time Digital Twins

Real-time digital twins shift the application’s focus from the incoming data stream to the dynamically evolving state of the data sources. For each individual data source, they let the application incorporate dynamic information about that data source in the analysis of incoming messages, and the application can also update this state over time. The net effect is that the application can develop a significantly deeper understanding about the data source so that it can take effective action when needed. This cannot be achieved by just looking at data within the incoming message stream.

For example, the ad-serving application can use a real-time digital twin for each user to track shopping history and preferences, measure the effectiveness of ads, and guide ad selection. The stock market application can use a real-time digital twin for each company to track financial information, executive changes, and news releases that explain why its stock price starts moving and filter out trades that don’t fit desired criteria.

Also, because real-time digital twins maintain dynamic information about each data source, applications can aggregate this highly curated data instead of just aggregating data in the data stream. This gives users deeper insights into the overall state of all data sources and boosts “situational awareness” that is hard to maintain by just looking at the message stream.

An Example

Consider a trucking fleet that manages thousands of long-haul trucks on routes throughout the U.S. Each truck periodically sends telemetry messages about its location, speed, engine parameters, and cargo status (for example, trailer temperature) to a real-time monitoring application at a central location. With traditional streaming analytics, personnel can detect changes in these parameters, but they can’t assess their significance to take effective, individualized action for each truck. Is a truck stopped because it’s at a rest stop or because it has stalled? Is an out-of-spec engine parameter expected because the engine is scheduled for service or does it indicate that a new issue is emerging? Has the driver been on the road too long? Does the driver appear to be lost or entering a potentially hazardous area?

The use of real-time digital twins provides the context needed for the application to answer these questions as it analyzes incoming messages from each truck. For example, it can keep track of the truck’s route, schedule, cargo, mechanical and service history, and information about the driver. Using this information, it can alert drivers to impending problems, such as road blockages, delays or emerging mechanical issues. It can assist lost drivers, alert them to erratic driving or the need for rest stops, and help when changing conditions require route updates.

The following diagram shows a truck communicating with its associated real-time digital twin. (The parallelogram represents application code.) Because the twin holds unique contextual data for each truck, analysis code for incoming messages can provide highly focused feedback that goes well beyond what is possible with traditional streaming analytics:

As illustrated below, the ScaleOut Digital Twin Streaming Service runs as a cloud-hosted service in the Microsoft Azure cloud to provide streaming analytics using real-time digital twins. It can exchange messages with thousands of trucks across the U.S., maintain a real-time digital twin for each truck, and direct messages from that truck to its corresponding twin. It simplifies application code, which only needs to process messages from a given truck and has immediate access to dynamic, contextual information that enhances the analysis. The result is better feedback to drivers and enhanced overall situational awareness for the fleet.

runs as a cloud-hosted service in the Microsoft Azure cloud to provide streaming analytics using real-time digital twins. It can exchange messages with thousands of trucks across the U.S., maintain a real-time digital twin for each truck, and direct messages from that truck to its corresponding twin. It simplifies application code, which only needs to process messages from a given truck and has immediate access to dynamic, contextual information that enhances the analysis. The result is better feedback to drivers and enhanced overall situational awareness for the fleet.

Lower Complexity and Higher Performance

While the functionality implemented by real-time digital twins can be replicated with ad hoc solutions that combine application servers, databases, offline analytics, and visualization, they would require vastly more code, a diverse combination of skill sets, and longer development cycles. They also would encounter performance bottlenecks that require careful analysis to measure and resolve. The real-time digital twin model running on ScaleOut Software’s integrated execution platform sidesteps these obstacles.

Scaling performance to maintain high throughput creates an interesting challenge for traditional streaming analytics platforms because the work performed by their task pipelines does not naturally map to a set of processing cores within multiple servers. Each pipeline stage must be accelerated with parallel execution, and some stages require longer processing time than others, creating bottlenecks in the pipeline.

In contrast, real-time digital twins naturally create a uniformly large set of tasks that can be evenly distributed across servers. To minimize network overhead, this mapping follows the distribution of in-memory objects within ScaleOut’s in-memory data grid, which holds the state information for each twin. This enables the processing of real-time digital twins to scale transparently without adding complexity to either applications or the platform.

Summing Up

Why use real-time digital twins? They solve an important challenge for streaming analytics that is not addressed by other, “pipeline-oriented” platforms, namely, to simultaneously track the state of thousands of data sources. They use contextual information unique to each data source to help interpret incoming messages, analyze their importance, and generate feedback and alerts tailored to that data source.

Traditional streaming analytics finds patterns and trends in the data stream. Real-time digital twins identify and react to important state changes in the data sources themselves. As a result, applications can achieve better situational awareness than previously possible. This new way of implementing streaming analytics can be used in a wide range of applications. We invite you to take a closer look.

The post Why Use “Real-Time Digital Twins” for Streaming Analytics? appeared first on ScaleOut Software.

]]>The post The Power of Integrated Analytics Within an IMDG appeared first on ScaleOut Software.

]]>

In-Memory Data Grids for Fast-Changing Data

For more than fifteen years, ScaleOut StateServer® has demonstrated technology leadership as an in-memory data grid (IMDG) and distributed cache. Designed to help scalable applications deliver high performance, it stores live, fast-changing data in memory (DRAM) for fast updates and retrieval. By transparently distributing stored objects across a cluster of servers (physical or virtual), it automatically scales performance for fast-growing workloads and maintains consistently low access latency. Typical uses include storing session-state and ecommerce shopping carts, product descriptions, airline reservations, financial portfolios, news stories, online learning data, and many others.

From its inception, the design philosophy behind ScaleOut StateServer has been to simultaneously maximize both performance and ease of use. Because IMDGs have complex internal mechanisms, they need to automate them as much as possible so that the developer can just focus on application concerns and not on the inner workings of the IMDG. For this reason, the product incorporates features such as automatic discovery of servers, transparent load-balancing when servers are added to the cluster or removed, automatic data replication for high availability with transparent placement of replicas, quorum-based updating of replicas to ensure consistency, integrated client libraries, and coherent client-side caching. The net effect is that applications maintain a straightforward view of the IMDG as a unified key/value store for serialized application objects.

The Challenges with Parallel Queries

Although IMDGs are optimized for key-based access, applications often need to retrieve groups of objects with matching properties. For example, if an application is storing shopping carts, it might be useful to find all shopping carts with a total value that exceeds a specified threshold so that these shoppers can be given special attention. To this end, ScaleOut StateServer incorporates a property-based, distributed query API that returns a collection of matching objects. To simplify development for .NET applications, it uses Microsoft’s language integrated query (LINQ) to specify queries. (Java applications use a similar mechanism.)

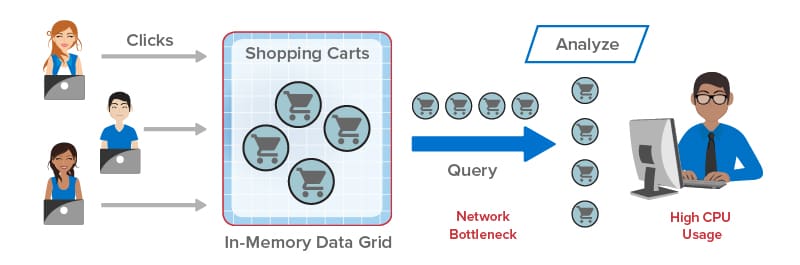

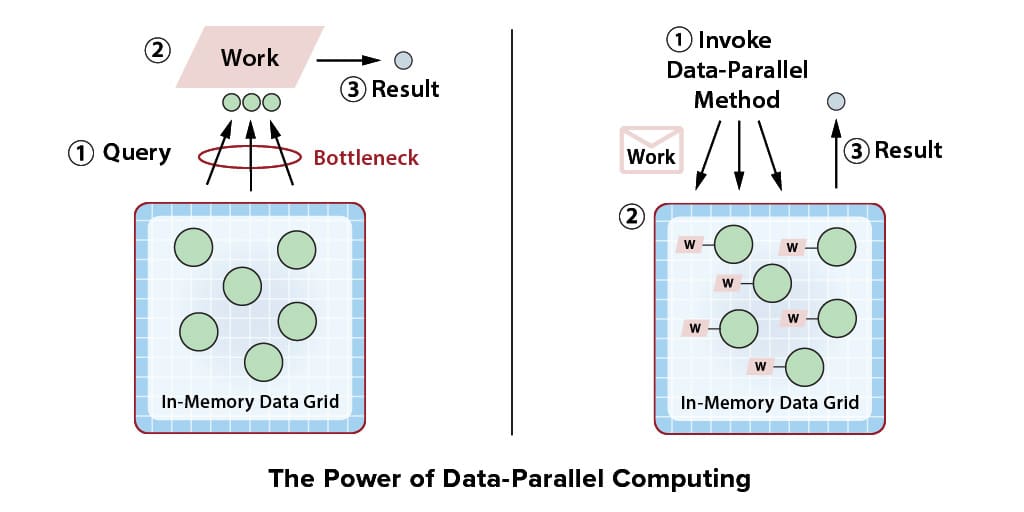

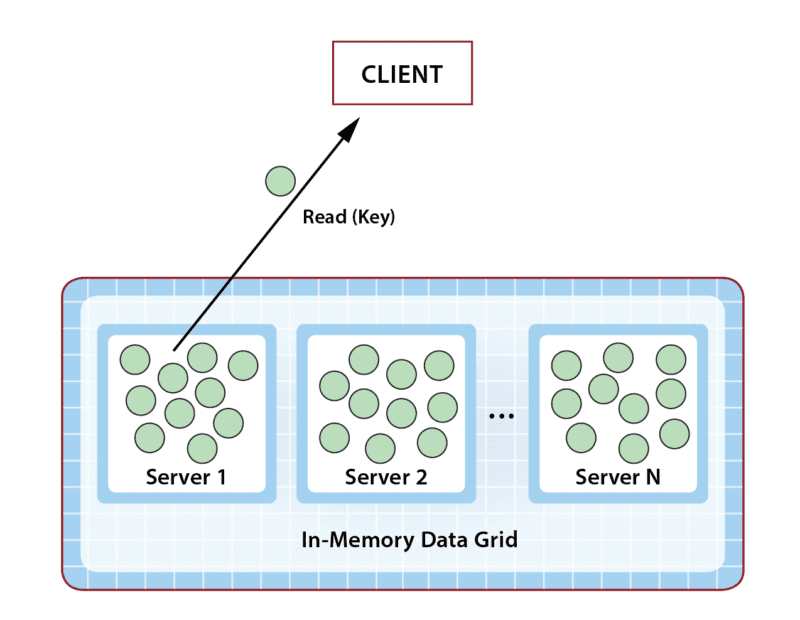

Queries in an IMDG can create interesting performance challenges. Because IMDGs have highly scalable storage capacity, they can easily return large numbers of matching objects to the client application, and this leads to network bottlenecks transferring large amounts of data from the IMDG back to the client. Once all objects are delivered, the client is then faced with the task of analyzing potentially huge numbers of objects. This can saturate the client’s CPU and delay responses, as illustrated in the following diagram:

ScaleOut StateServer Pro: Integrated Data Analytics

To address these challenges, ScaleOut Software has introduced ScaleOut StateServer Pro, an advanced version of ScaleOut StateServer that integrates data analytics within the IMDG. Instead of querying objects from the IMDG and analyzing them in the client, applications can now simply run this analysis within the IMDG itself using APIs available in ScaleOut StateServer Pro. Because all the work is performed with the IMDG, this has the two-fold advantage of offloading both the network and the client’s CPU. It also transparently makes use of the IMDG’s scalable computing resources to accelerate the analysis.

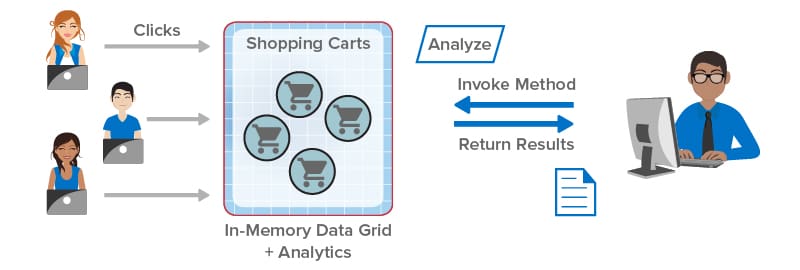

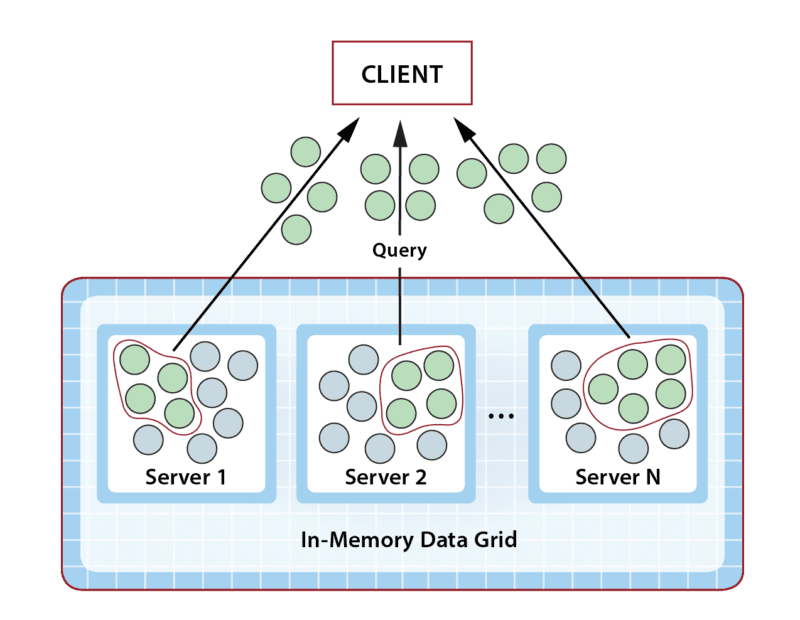

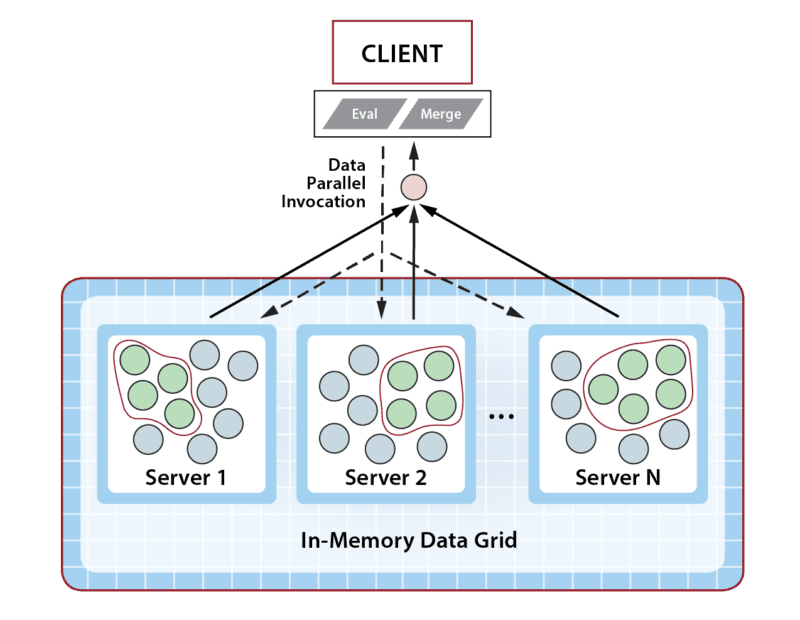

Take a look at how integrated data analytics can help client applications. In the following illustration, the client library sends the application’s analysis method (“Analyze”) to the IMDG for execution in parallel on all shopping carts selected by a query. The results are combined within the IMDG and returned to the application:

Keeping with the design philosophy of maximizing both performance and ease of use, ScaleOut StateServer Pro lets developers easily construct data analytics by specifying an object-oriented method that analyzes each matching object selected by a query and a second method for combining the results. In .NET applications, this data-parallel execution structure can be described using a distributed version of Microsoft’s popular Parallel.ForEach API, which ScaleOut StateServer Pro integrates with LINQ query. Application code is automatically shipped by the client library to the IMDG for execution and runs fully in parallel across all servers for maximum performance.

Consider the above example of querying shopping carts exceeding a threshold value. Suppose the application’s goal is to periodically analyze high value shopping carts to make upsell offers based on the contents of each cart. Instead of querying the IMDG and returning thousands of shopping carts to the client, the application can implement a method which analyzes these carts within the IMDG to determine which carts should receive upsell offers (and possibly determine which upsell offers to make). This analysis runs in parallel within the IMDG and then returns its results to the client for further action. This dramatically reduces the workload on the network and client, and it ensures consistently high performance.

Summing Up: Extracting Maximum Value from an IMDG

Since their inception, IMDGs have to a large extent been underutilized by viewing them as passive key/value stores. Because they are actually designed as a data-parallel execution platform, they can do much more than just store and serve memory-hosted, live data. They also can perform analysis quickly and efficiently — where the data lives.

Taking full advantage of this powerful capability requires just a shift in thinking about where application work should be performed. In many cases, it’s a much better choice to analyze data within the IMDG instead of transferring it to the client for analysis. ScaleOut StateServer Pro makes it easy to do just that, and it delivers fast, scalable performance. Now developers can finally extract full value from their IMDGs.

The post The Power of Integrated Analytics Within an IMDG appeared first on ScaleOut Software.

]]>The post The Amazing Evolution of In-Memory Computing appeared first on ScaleOut Software.

]]>

Going back to the mid-1990s, online systems have seen relentless, explosive growth in usage, driven by ecommerce, mobile applications, and more recently, IoT. The pace of these changes has made it challenging for server-based infrastructures to manage fast-growing populations of users and data sources while maintaining fast response times. For more than two decades, the answer to this challenge has proven to be a technology called in-memory computing.

In general terms, in-memory computing refers to the related concepts of (a) storing fast-changing data in primary memory instead of in secondary storage and (b) employing scalable computing techniques to distribute a workload across a cluster of servers. Assuming bottlenecks are avoided, this enables transparent throughput scaling that matches an increase in workload, which in turn keeps response times low for users. It can also take advantage of the elastic computing resources available in cloud infrastructures to quickly and cost-effectively scale throughput to meet changes in demand.

Harnessing the power of in-memory computing requires software platforms that can make in-memory computing’s scalability readily available to applications using APIs while hiding the complexity of its implementation. Emerging in the early 2000s, the first such platforms provided distributed caching on clustered servers with straightforward APIs for storing and retrieving in-memory objects. When first introduced, distributed caching offered a breakthrough for applications by storing fast-changing data in memory on a server cluster for consistently fast response times, while simultaneously offloading database servers that would otherwise become bottlenecked. For example, ecommerce applications adopted distributed caching to store session-state, shopping carts, product descriptions, and other data that shoppers need to be able to access quickly.

Software platforms for distributed caching, such as ScaleOut StateServer®, which was introduced in 2005, hide internal mechanisms for cluster membership, throughput scaling, and high availability to take full advantage of the cluster’s scalable memory without adding complexity to applications. They transparently distribute stored objects across the cluster’s servers and ensure that data is not lost if a server or network component fails.

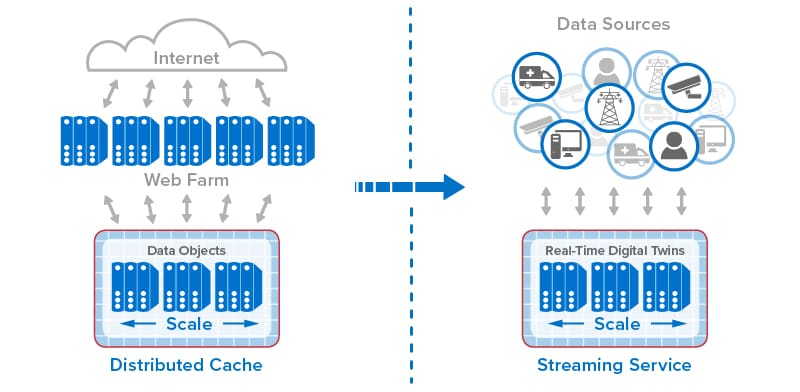

As distributed caching has evolved over the last two decades, additional mechanisms for in-memory computing have been incorporated to take advantage of the computing power available in the server cluster. Parallel query enables stored objects on all servers to be scanned simultaneously to retrieve objects with desired properties. Data-parallel computing analyzes objects on the server cluster to extract and report patterns of interest; it scales much better than parallel query by avoiding network bottlenecks and by using the cluster’s computing resources.

Most recently, stream-processing has been implemented with in-memory computing to simultaneously analyze telemetry from thousands or even millions of data sources and track dynamic state information for each data source. ScaleOut Software’s real-time digital twin model provides straightforward APIs for implementing stream-processing applications within its ScaleOut Digital Twin Streaming Service , an Azure-based cloud service, while hiding internal mechanisms, such as distributing incoming messages to in-memory objects, updating state information for each data source, and running aggregate analytics.

, an Azure-based cloud service, while hiding internal mechanisms, such as distributing incoming messages to in-memory objects, updating state information for each data source, and running aggregate analytics.

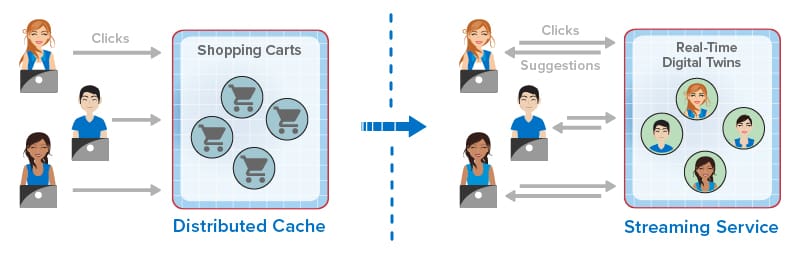

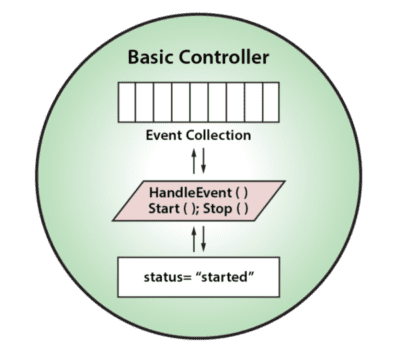

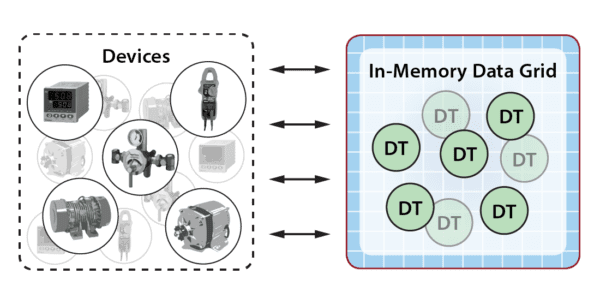

The following diagram shows the evolution of in-memory computing from distributed caching to stream-processing with real-time digital twins. Each step in the evolution has built on the previous one to add new capabilities that take advantage of the scalable computing power and fast data access that in-memory computing enables.