The post ScaleOut Software Expands Digital Twins Potential with Version 3 Release appeared first on ScaleOut Software.

]]>BELLEVUE, WASH. — May 14, 2024 — Today, ScaleOut Software introduces Version 3 of its ScaleOut Digital Twins cloud service and on-premises hosting platform. The latest release introduces a cutting-edge user interface designed to streamline the deployment and management of digital twins. It also adds advanced capabilities for constructing large-scale simulations using digital twins and establishing connections with a wider array of messaging sources. Notably, Version 3 seamlessly integrates with Amazon Dynamo DB, offering enhanced persistence capabilities.

cloud service and on-premises hosting platform. The latest release introduces a cutting-edge user interface designed to streamline the deployment and management of digital twins. It also adds advanced capabilities for constructing large-scale simulations using digital twins and establishing connections with a wider array of messaging sources. Notably, Version 3 seamlessly integrates with Amazon Dynamo DB, offering enhanced persistence capabilities.

“With the unveiling of ScaleOut Digital Twins, Version 3, we’re thrilled to introduce significant enhancements to our digital twin development and hosting platform,” declared Dr. William Bain, CEO and founder of ScaleOut Software. “As we collaborate with our clients to explore novel applications for digital twins within large-scale systems, we continue to uncover new ways to harness the combined power of digital twins and in-memory computing.

Key Features and Benefits of ScaleOut Digital Twins, Version 3:

- Easily Deploy and Manage Digital Twin Models: The new user interface reorganizes information to make it easier than ever to manage application deployment and status tracking for digital twin models. A new navigation design makes it easier to find key functions and access system statistics.

- Seamlessly Aggregate Statistics and Query Digital Twins: UI features in this new version simplify the creation of aggregate statistics that track large populations of digital twins and visualize live results. New capabilities enable continuous tabular queries and provide automatic query storage.

- Create and Manage Message Recordings: Users can create recordings of incoming real-time messages for replay during digital twin simulations and persist them to SQL Server. This enables application designers to refine and then test their analytics for real-time monitoring. It also enables the use of device messages to drive simulations for predictive modeling.

- Connect to New Message Sources and Persistence Stores: With ScaleOut Digital Twins, Version 3 users can connect digital twin models to open-source Kafka running on-premises or Azure Kafka running in the cloud. Digital twins can now be persisted to AWS Dynamo DB in addition to SQL Server and Azure Digital Twins.

Application developers can take advantage of ScaleOut’s open-source APIs to construct digital twin models for real-time monitoring and simulation on the ScaleOut Digital Twins platform. Prior to deployment at scale with thousands of digital twins, they can test applications using an open-source workbench.

The ScaleOut Digital Twins platform uses highly scalable, in-memory computing technology to host thousands of digital twins for monitoring live data from IoT devices and other data sources, enabling real-time analytics that provide actionable results in seconds. The platform also runs large-scale simulations that aid in the design and operation of complex systems, such as transportation and logistics networks, smart cities, and more.

For more information, please visit www.scaleoutdigitaltwins.com and follow @ScaleOut_Inc on X.

Additional Resources:

- Reimagining the ScaleOut Digital Twins UI for Version 3 blog post

- ScaleOut Digital Twins Product Page

About ScaleOut Software

Founded in 2003, ScaleOut Software develops leading-edge software that delivers scalable, highly available, in-memory computing and streaming analytics technologies to a wide range of industries. ScaleOut Software’s in-memory computing platform enables operational intelligence by storing, updating, and analyzing fast-changing, live data so that businesses can capture perishable opportunities before the moment is lost. The company is headquartered in Bellevue, Washington.

Media Contact

Kristin Peixotto

RH Strategic for ScaleOut Software

ScaleOutPR@rhstrategic.com

253-225-8029

The post ScaleOut Software Expands Digital Twins Potential with Version 3 Release appeared first on ScaleOut Software.

]]>The post ScaleOut Software Releases Open-Source APIs for Digital Twins and Development Workbench appeared first on ScaleOut Software.

]]>BELLEVUE, WASH. — December 12 — ScaleOut Software today announces the first open-source release of its digital twin APIs and development workbench for its ScaleOut Digital Twins hosting platform. Digital twin APIs allow developers to build powerful applications for real-time monitoring and simulating large systems. The workbench enables fast development and testing of digital twin applications before deployment. To help accelerate the development of digital twin applications, ScaleOut Software is releasing these components as open source under the Apache 2.0 license.

hosting platform. Digital twin APIs allow developers to build powerful applications for real-time monitoring and simulating large systems. The workbench enables fast development and testing of digital twin applications before deployment. To help accelerate the development of digital twin applications, ScaleOut Software is releasing these components as open source under the Apache 2.0 license.

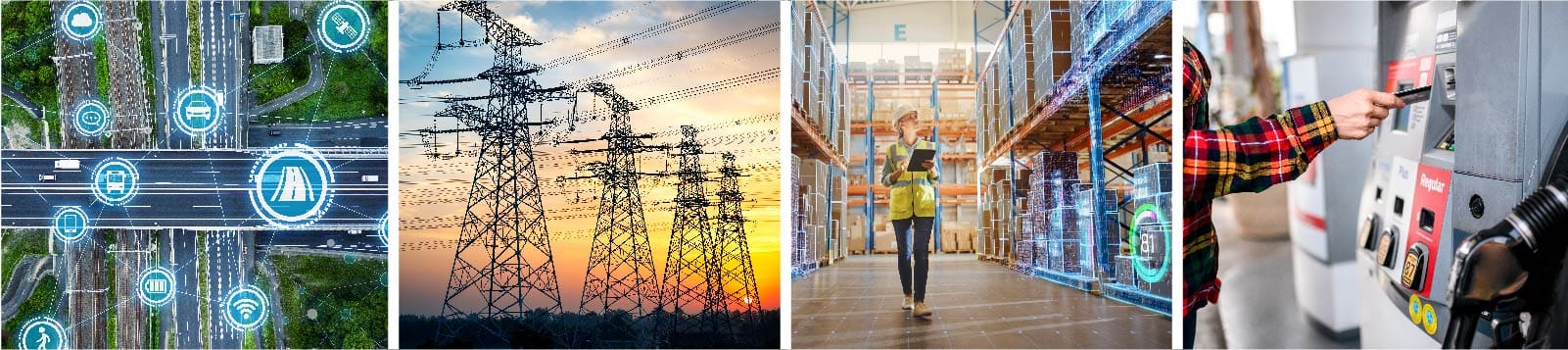

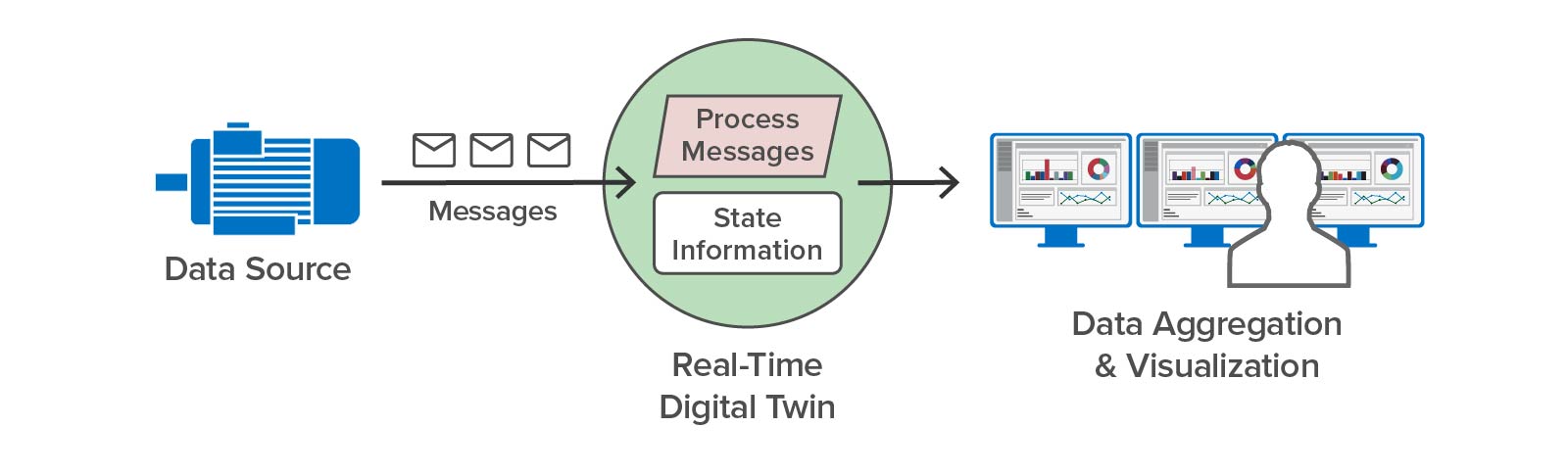

Going beyond product lifecycle management (PLM), which focuses on improving product design, digital twins enable important new capabilities for real-time monitoring and simulating systems with thousands of components. For example, they allow applications to analyze streaming data in real time instead of requiring offline batch analytics. They can also simulate large complex systems to improve design choices and decision making. Digital twins offer key benefits to data analysts and managers in a wide range of industries, including transportation, logistics, security, healthcare, disaster recovery, and financial services.

“We are excited to open source our digital twin APIs and new development workbench so that application developers can easily use digital twins to create a new generation of applications,” said Dr. William Bain, ScaleOut Software’s CEO and founder. “These freely accessible software components should simplify and accelerate the development of digital twin applications and encourage community participation.”

The ScaleOut Digital Twins platform uses highly scalable, in-memory computing technology to host thousands of digital twins for monitoring real-time data from IoT devices and other data sources, enabling real-time analytics that provides actionable results in seconds. The platform also runs large-scale simulations that aid in the design of complex systems, such as airline logistics and traffic control networks.

platform uses highly scalable, in-memory computing technology to host thousands of digital twins for monitoring real-time data from IoT devices and other data sources, enabling real-time analytics that provides actionable results in seconds. The platform also runs large-scale simulations that aid in the design of complex systems, such as airline logistics and traffic control networks.

Application developers use ScaleOut’s open-source APIs to build digital twin models for deployment on the ScaleOut Digital Twins platform. They can now use the open-source workbench to test application code built using these APIs and gain immediate feedback that shortens the design cycle. Once tested, developers can deploy applications on the platform to run at scale with thousands of digital twins.

Key Features and Benefits for ScaleOut Software’s Digital Twin APIs and Workbench:

- Easily Build Digital Twin Models: ScaleOut’s open-source APIs enable developers to build digital twin models in Java or C#. These models serve as templates for creating digital twins. The APIs employ standard, object-oriented design principles to simplify development and avoid the use of specialized or platform-specific techniques.

- Easily Test Digital Twin Models: ScaleOut’s open-source workbench lets developers test their digital twin models for both real-time monitoring and simulation in the user’s native development and test environment. The workbench accelerates application development by avoiding the need to deploy models to the hosting platform during the development process.

- Greater Ease of Access and Flexibility: Because ScaleOut’s digital twin APIs and workbench are freely available as open-source components, developers can download them at no cost and immediately start building digital twin applications. These APIs can also serve as the basis for creating digital twins that run on other platforms and provide cross-platform compatibility.

- Enable High Performance: ScaleOut’s digital twin model simplifies hosting on scalable, in-memory computing platforms. In-memory hosting dramatically reduces latency when digital twins access state information or process messages for both real-time analytics and simulation. It also enables high processing rates that support the creation of thousands of digital twins.

For more information, please visit www.scaleoutdigitaltwins.com and follow @ScaleOut_Inc on Twitter.

Additional Resources:

- ScaleOut Open Source APIs and Workbench Blog Post

- ScaleOut Digital Twins Product Page

- GitHub Repositories for Java and C# Open-Source APIs

About ScaleOut Software

Founded in 2003, ScaleOut Software develops leading-edge software that delivers scalable, highly available, in-memory computing and streaming analytics technologies to a wide range of industries. ScaleOut Software’s in-memory computing platform enables operational intelligence by storing, updating, and analyzing fast-changing, live data so that businesses can capture perishable opportunities before the moment is lost. It has offices in Bellevue, Washington and Beaverton, Oregon.

Media Contact

Brendan Hughes

RH Strategic for ScaleOut Software

ScaleOutPR@rhstrategic.com

206-264-0246

The post ScaleOut Software Releases Open-Source APIs for Digital Twins and Development Workbench appeared first on ScaleOut Software.

]]>The post Preventing Train Derailments Using Digital Twins appeared first on ScaleOut Software.

]]>

Using Digital Twins to Track and Simulate Large Systems

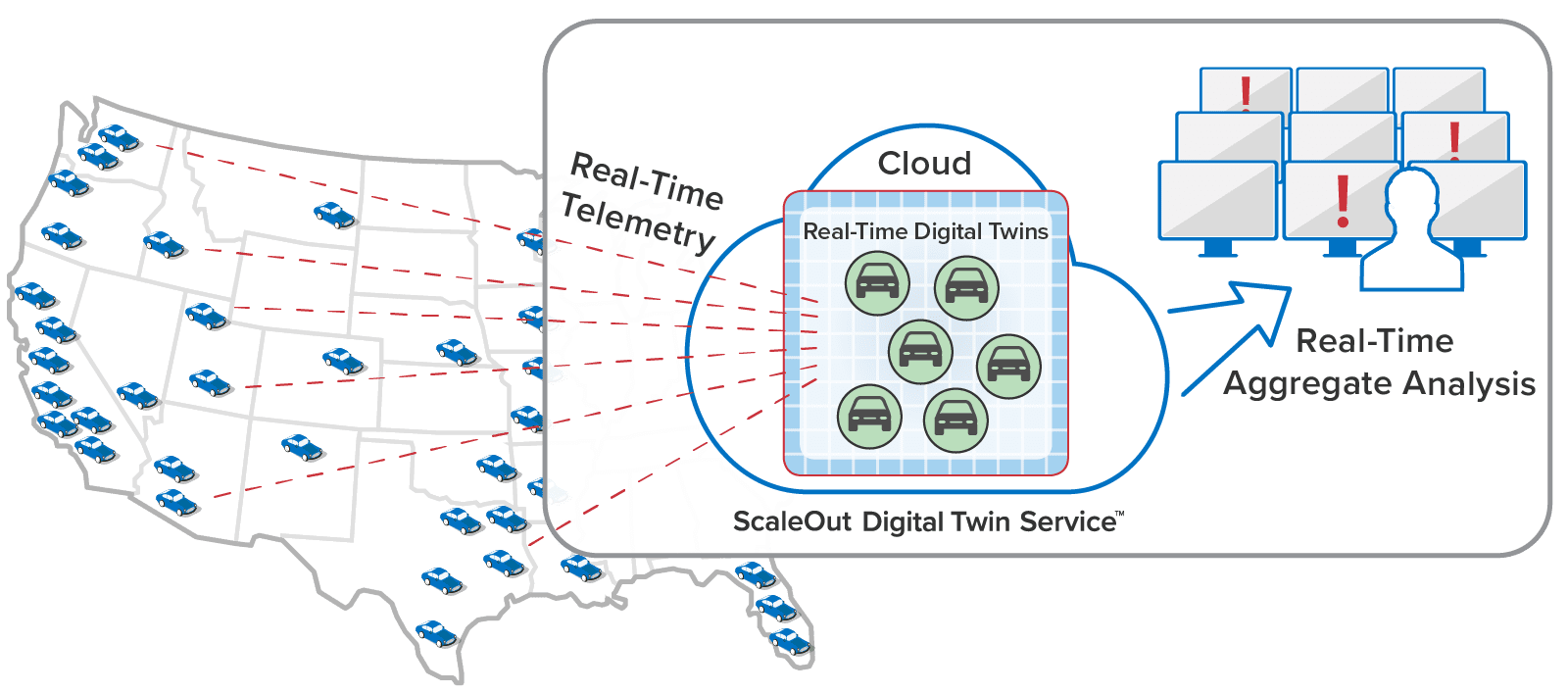

For decades, digital twins have played a crucial role in the field of product lifecycle management (PLM), where they assist in the design and testing of many types of devices, from valves to jet engines. ScaleOut Software has pioneered the use of digital twin technology combined with in-memory computing to track the behavior of live systems with many components – such as vehicle fleets, IoT devices, and even people – to monitor status in real time and boost situational awareness for operational managers.

Now, both data analysts and system managers can also harness the power of digital twins to simulate the behaviors of complex systems with thousands of interacting entities. Digital twin simulations can provide invaluable information about complex interactions that are otherwise difficult to study. They can explore scenarios often found in live systems, informing decisions and helping to identify potential issues in the planning phase. They also empower professionals to validate real-time analytics prior to deployment and to make predictions that help manage live systems.

A Case Study: Rail Transportation Safety

Consider an important use case in transportation safety for the U.S. freight railway system. The U.S moves more than 1.6 billion tons of freight over 140,000 miles of track each year. In 2022, there were 1,164 train derailments that caused damage measured in the millions of dollars and cost multiple lives. For example, in February 2023, fifty freight cars derailed in East Palestine, Ohio in a widely publicized accident. How can digital twins help prevent similar emergencies?

Currently, track-side sensors detect mechanical issues that can cause derailments, such as severely overheated wheel bearings, and radio train engineers often too late to prevent an accident. In the Ohio event, the NTSB preliminary report described increasing temperatures reported by three rail-side “hot box” detectors before the accident occurred. The U.S. railway network places these detectors every few miles across the country:

Example of a hot box detector (BBT609 – Own work, CC BY-SA 3.0, https://commons.wikimedia.org/w/index.php?curid=25975512)

Hot box detectors capture the data needed to track increasing wheel bearing temperatures and predict impending derailments. However, safety systems need to harness this data more effectively to prevent these incidents. Digital twins can help.

Real-time analytics using digital twins can combine temperature information from multiple hot boxes to detect anomalies and take action faster, before small problems escalate into derailments. Cloud-hosted analytics can simultaneously track the entire rail network’s rolling stock using a scalable, in-memory computing platform, such as the ScaleOut Digital Twin Streaming Service , to host digital twins. They can continuously analyze patterns of temperature changes for each car’s wheel bearings, combine this with known information about the rail car, such as its maintenance history, and then assess the likelihood of failure and alert personnel within milliseconds. This use of contextual information also helps prevent false-positive alerts that create costly delays.

, to host digital twins. They can continuously analyze patterns of temperature changes for each car’s wheel bearings, combine this with known information about the rail car, such as its maintenance history, and then assess the likelihood of failure and alert personnel within milliseconds. This use of contextual information also helps prevent false-positive alerts that create costly delays.

Using Digital Twin Simulations to Design and Test Real-Time Analytics

To help railway engineers develop and test new predictive analytics software, large-scale simulations can model the flow of information from the hundreds of thousands of freight cars that cross the U.S. each day, as well as the thousands of detectors placed along the tracks. These simulations can statistically simulate emerging wheel bearing issues to test how well real-time analytics software can detect impending failures before an accident occurs. Digital twins serve double duty here; they implement real-time analytics, and they model wheel bearing failures.

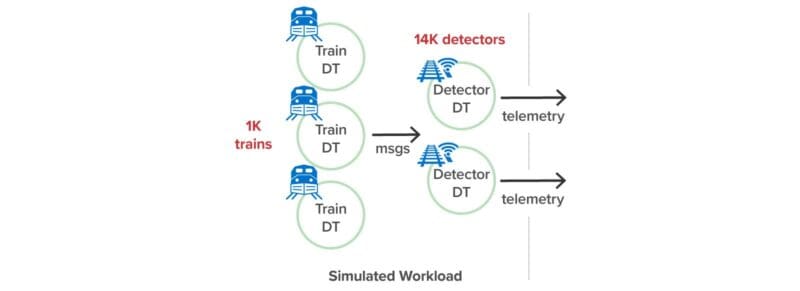

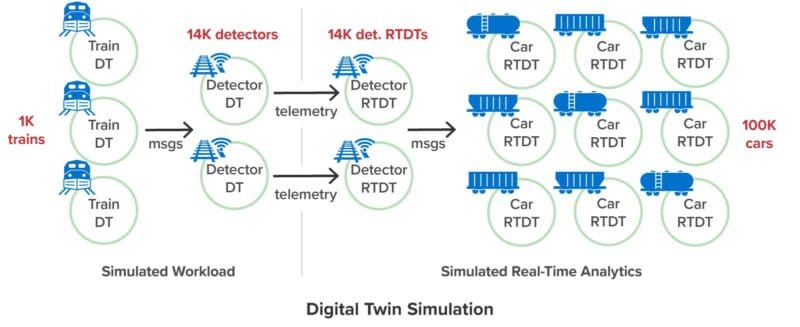

As a proof of concept, ScaleOut Software created a simulation of the U.S. freight rail system to evaluate how well digital twins can track wheel bearing temperatures from multiple hot box detectors and alert engineers to avoid derailments. The simulation runs as a discrete event simulation with digital twins exchanging messages in simulated time to model interactions.

Workload Generator

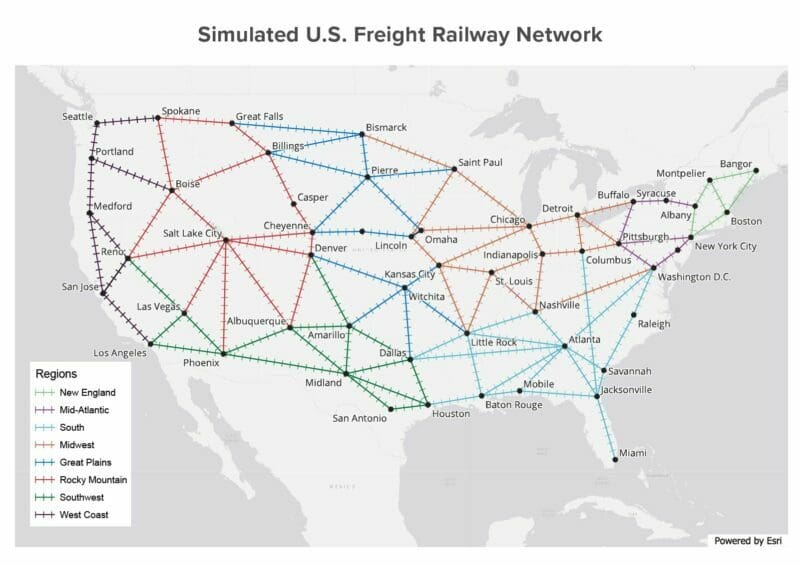

This workload generator creates 500-1000 simulated trains, each with 100 freight cars and 8 wheel bearings per car. The simulated trains travel on a hypothetical rail map that crisscrosses a hypothetical U.S. rail map with 107 routes between major U.S. cities:

The simulated rail network places 3,800 hot box detectors approximately every 10 miles along the tracks. Each detector’s job is to report the wheel bearing temperatures for every freight car as a train passes it along the route, just as a real hot box detector would.

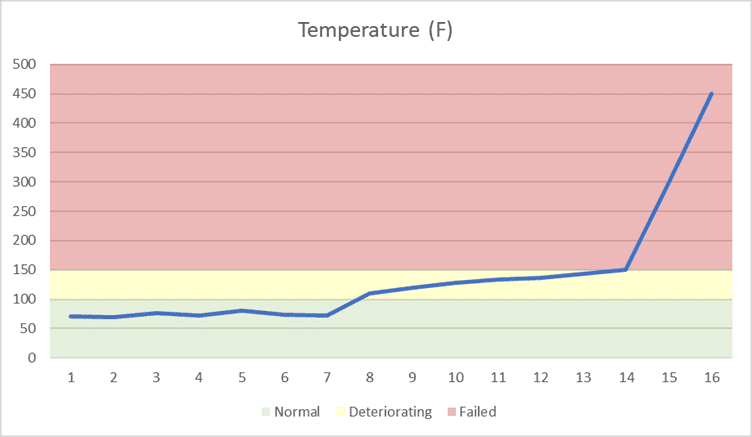

The simulation uses a separate digital twin model to implement trains and hot box detectors. (Each digital twin model has its own properties and algorithms.) A simulated train keeps track of its route, current position, speed, and freight cars. It also implements a probabilistic model of wheel bearing failures that cause a wheel bearing to enter a deteriorating state with a probability of 1:1M and then increase its temperature over time. As it passes a simulated detector, each train reports the temperature of all wheel bearings to the detector. After a deteriorating wheel bearing passes ten detectors, it increases to a 1:4 probability of entering a failed state with a rapid temperature rise. Once a bearing reaches 500 degrees Fahrenheit, the model considers it to have experienced a catastrophic failure, which corresponds to a fire or derailment.

Here is an example of a wheel bearing’s temperature profile as it passes detectors along the rails:

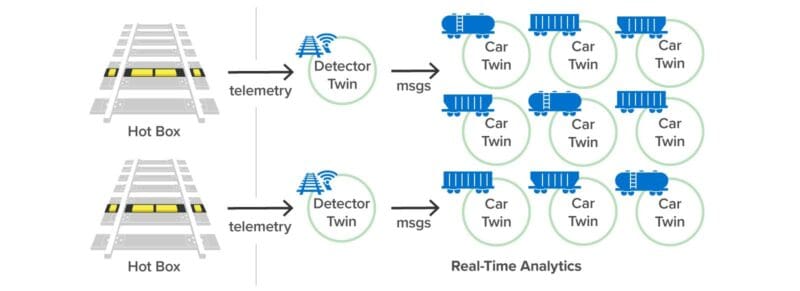

As simulated trains pass hot box detectors and report their wheel bearing temperatures, the detectors send a message to their corresponding real-time digital twins, which capture and analyze this telemetry.

The following diagram shows the simulation’s workload generator made up of digital twins:

Real-Time Analytics

Digital twins also implement real-time analytics code for detecting wheel bearing failures. Once deployed in a data center for production use, they continuously track telemetry from real hot box detectors to look for possible wheel bearing failures and alert train engineers. In an actual deployment, existing hot box detectors would send messages over the cellular phone system to a cloud-based analytics service instead of just making radio broadcasts to nearby train personnel.

The analytics code uses two digital twin models, one for hot box detectors and another for individual train cars. The hot box detector twins receive telemetry messages from corresponding physical hot boxes along the tracks. Digital twins of train cars track telemetry and other relevant information about all the wheel bearings on each car. They build a picture over time of trends in wheel bearing temperatures reported by multiple detectors. They also can combine a temperature history with other contextual information, such as the type of wheel bearing and its service history, to best decide when a failure might be imminent.

In the simulation, train car digital twins just keep temperature histories for all wheel bearings and look for an upward trend over time. If a digital twin detects a potentially dangerous trend, it sends a message back to the simulated train, instructing it to stop.

To run the simulation, the workload generator sends messages to the real-time analytics:

The same analytics twins can receive telemetry from actual hot box detectors after deployment:

Simulation Results

The simulation divides the U.S. rail network into regions. To check that trend analysis is working, we disable it in the south and southwest and compare it to other regions. The simulation shows that trend analysis catches all deteriorating bearings before they fail and cause derailments. Derailments only occur on routes not performing trend analysis.

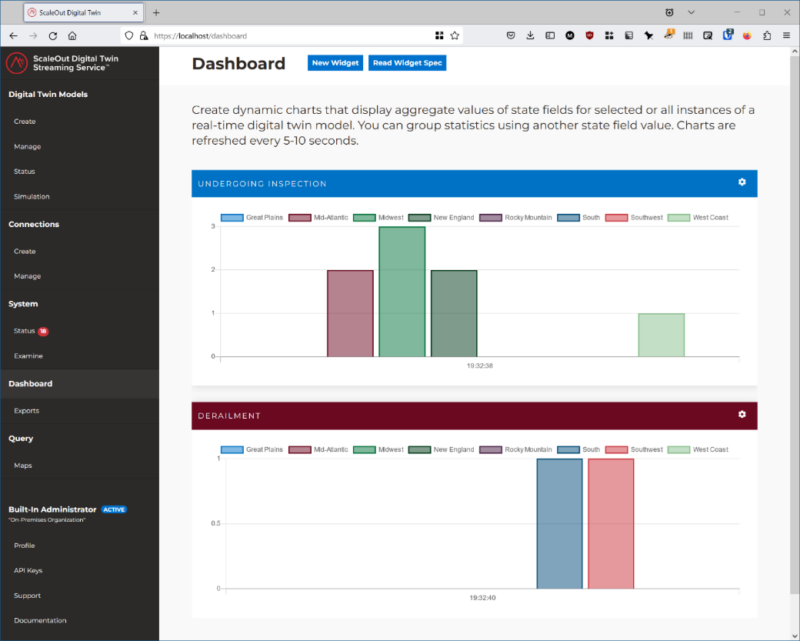

The ScaleOut Digital Twin Streaming Service provides tools to visualize these results. The following dashboard widgets track the number of alerted trains by region that are undergoing inspection (because trend analysis detects an issue) along with the number of derailed trains. Note that derailments only occur in the regions with trend analysis disabled:

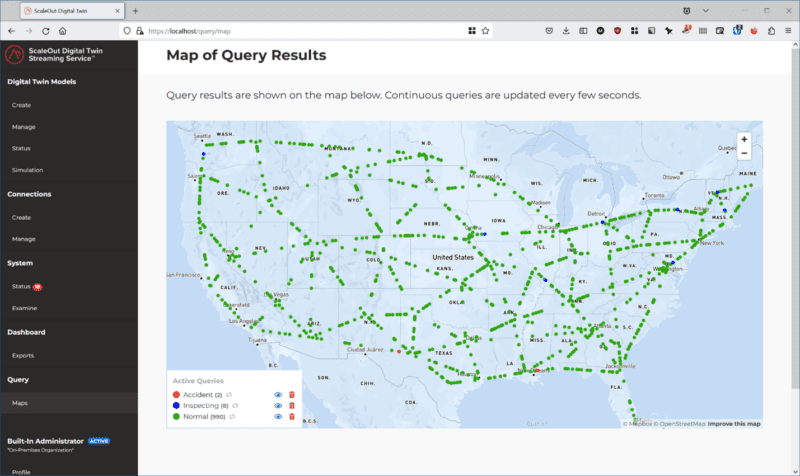

The following geospatial map of a continuous query shows the trains which are running normally in green, undergoing inspection in blue, and derailed in red. This map confirms that all derailed trains are located in the south and southwest regions and shows trains undergoing inspection in other regions:

Summing Up

The U.S. freight railways provide the backbone of the country’s freight transport system and must run with minimum disruptions. New technology like digital twins can take advantage of existing infrastructure to provide continuous monitoring that is missing today. Using scalable in-memory computing, digital twins can capture live telemetry throughout the rail system, analyze it in context, and create immediate alerts when needed. They can also implement simulations to model these issues and help planners evaluate real-time analytics software.

Beyond just watching wheel bearings, digital twins can track other areas of the rail system, such as rail intersections and switches, to further boost safety. With this technology, digital twins can help build next-generation safety systems to eliminate dangerous and costly derailments.

The post Preventing Train Derailments Using Digital Twins appeared first on ScaleOut Software.

]]>The post Video: Preventing Train Derailments with Real-Time Digital Twins appeared first on ScaleOut Software.

]]>

Introducing the latest ScaleOut Software video release: Preventing Train Derailments with Real-Time Digital Twins. Modern society relies on complex transportation networks to keep things moving smoothly, but when issues arise, the consequences can be substantial. In 2022 alone, over 1,100 train derailments in the US led to damages exceeding $100 million. Costs from the East Palestine, Ohio train derailment in February 2023 reached a staggering $803 million.

Many costly train derailments could potentially be prevented using a combination of existing infrastructure and new real-time digital twin technology. In this video, you’ll learn how real-time digital twins can revolutionize accident prevention for railroads by monitoring live data and taking action before derailments occur. See how digital twins can analyze data from existing trackside detectors simultaneously and determine when wheel bearings are likely to fail. Watch a simulation that illustrates how ScaleOut’s digital twins can predict problems and intervene to prevent accidents.

- Learn more about ScaleOut’s Digital Twin Streaming Service

- Subscribe to ScaleOut’s Youtube channel for more videos

The post Video: Preventing Train Derailments with Real-Time Digital Twins appeared first on ScaleOut Software.

]]>The post Major U.S. Airline appeared first on ScaleOut Software.

]]>

Applications: Fast data tracking for flights, passengers, and messages

Server configuration: Multiple server farms in several locations run ScaleOut StateServer® Pro on more than 100 physical and virtual servers to manage data for hundreds of connected web and application servers.

Reason for Deployment: Needed low latency data storage and scalable data access for mission-critical applications serving hundreds of thousands of global passengers, flights, and operations functions.

Results: Removed data access bottlenecks to provide real-time data when it is needed for improved customer satisfaction and operational efficiency, developed a trusted technology partnership, and lowered cost by replacing another technology solution with ScaleOut Software.

Overview

A major U.S. airline has been a ScaleOut Software customer for more than a decade, using ScaleOut StateServer Pro with its in-memory computing and caching technologies to help manage the airline’s critical global flight tracking, passenger, baggage, and operations data with fast and highly available access to all stored data at any time.

Over the years, ScaleOut has become a trusted technology partner for the airline. It has helped to build a customized data access layer on top of ScaleOut StateServer Pro to meet the airline’s specific data access and management needs, to deliver high-value solutions with substantial cost efficiencies, and most recently to navigate additional complications spurred on by COVID-19’s impacts on the travel industry.

Challenges

At the airline, ScaleOut Software primarily supports a team that is responsible for sourcing and persisting real-time data into enterprise data stores and for delivering events to application clients for business operations around the globe. Having fast and reliable access to the data stored in ScaleOut Software’s in-memory data grid is critical to managing passenger information, flight and baggage tracking, and operations control.

This team functions at the core of the airline’s nervous system and sends server-based information to data centers and systems around the world. Whether for passenger data or flight tracking and positioning information, the airline depends on ScaleOut Software to provide fast access to its data from any location without issues or outages.

“We really needed a fast system to store our data, keep it persisted, and make sure it doesn’t get lost or damaged.” – Senior Software Developer

Results

Since deploying ScaleOut StateServer Pro, the airline has increased the performance of its applications and gained a reliable tool for caching critical .NET objects and their associations across locations that meets the need to process hundreds of thousands of data points each day.

“The product has been awesome, stable. And that’s exactly what we want, you know? We don’t have to do anything to it. So, it’s perfect. It just runs and runs.” – Senior Software Developer

Due to ScaleOut’s reliability and ease of use, the airline has consolidated from using three different caching technologies to two and standardized on ScaleOut StreamServer Pro. In addition to improving software engineering workflows, this change provides additional business and cost-saving efficiencies.

“When looking at competing technologies, ScaleOut Software, is a much better value and it delivers consistently. They have also been a really great partner to us, taking our feedback seriously and helping to keep our data operations running smoothly.” – Resource Development Manager

This major U.S. airline values having a true partnership with ScaleOut Software and its development, sales, and leadership teams. Whether providing additional coding support to complete a software transformation project, customizing its product for the airline’s needs, or flexibility to mitigate COVID-19 driven challenges, ScaleOut Software has been on call to help address all challenges.

The post Major U.S. Airline appeared first on ScaleOut Software.

]]>The post CEO William Bain Gives Talk for the Digital Twin Consortium appeared first on ScaleOut Software.

]]>In this talk, Dr. Bain described a new vision for digital twins that takes them beyond traditional applications to address challenges faced by managers of large systems with thousands or even millions of data sources. Digital twins can implement streaming analytics that continuously monitor these complex systems for emerging issues and help managers boost their situational awareness.

Numerous applications can benefit from this new use of digital twins. Examples described in the talk include tracking vehicle fleets and logistics networks, improving the safety of transportation systems, and assisting in disaster recovery.

ScaleOut Software’s in-memory computing technology makes it possible to simultaneously host thousands of digital twins and run both streaming analytics and simulations. The talk explains how this technology adds real-time aggregate analytics while lowering response times and scaling performance.

Download The Presentation Slides

- Learn more about the ScaleOut Digital Twin Streaming Service

.

. - Watch Dr. Bain’s previous digital twin talk.

The post CEO William Bain Gives Talk for the Digital Twin Consortium appeared first on ScaleOut Software.

]]>The post Watch: Founder and CEO William Bain Talks Real-Time Digital Twins with Techstrong TV appeared first on ScaleOut Software.

]]>Watch the video below:

- Learn more about ScaleOut’s Digital Twin Streaming Service

- Watch Dr. Bain’s previous interview with Techstrong TV

The post Watch: Founder and CEO William Bain Talks Real-Time Digital Twins with Techstrong TV appeared first on ScaleOut Software.

]]>The post ScaleOut Software Adds Google Cloud Support Across Products appeared first on ScaleOut Software.

]]>BELLEVUE, WASH. — June 15, 2023 — ScaleOut Software today announced that its product suite now includes Google Cloud support. Applications running in Google Cloud can take advantage of ScaleOut’s industry leading distributed cache and in-memory computing platform to scale their performance and run fast, data-parallel analysis on dynamic business data. The ScaleOut Product Suite is a comprehensive collection of production-proven software products, including In-Memory Database, StateServer, GeoServer, Digital Twin Streaming Service, StreamServer and more. This integration complements ScaleOut’s existing Amazon EC2 and Microsoft Azure Cloud support to provide comprehensive multi-cloud capabilities.

is a comprehensive collection of production-proven software products, including In-Memory Database, StateServer, GeoServer, Digital Twin Streaming Service, StreamServer and more. This integration complements ScaleOut’s existing Amazon EC2 and Microsoft Azure Cloud support to provide comprehensive multi-cloud capabilities.

“We are excited to add Google Cloud Platform support for hosting the ScaleOut Product Suite,” said Dr. William Bain, CEO of ScaleOut Software. “This support further broadens the public cloud options available to our customers for hosting our industry-leading distributed cache and in-memory analytics. Google’s impressive performance enables our distributed cache to deliver the full benefits of automatic throughput scaling to applications.”

Key benefits of ScaleOut’s support for the Google Cloud Platform include:

- Simplified Deployment and Management: Users can take advantage of the ScaleOut Management Console to deploy a distributed cache to Google Cloud using a step-by-step wizard and track its status.

- Automatic Clustering: Distributed caches comprising one or more virtual servers automatically create a single compute cluster to serve client requests with both scalability and high availability.

- Automatic Client Connectivity: Client applications running either within Google Cloud or from remote sites can automatically connect to all caching servers within a cluster just by specifying the cluster’s name.

- Elastic Performance: Using the management console, users can add or remove virtual servers from a distributed cache to meet the needs of application workloads. In addition, users can implement auto-scaling policies based on performance measures, such as memory usage.

Distributed caches, such as the ScaleOut Product Suite, allow applications to store fast-changing data, such as e-commerce shopping carts, stock prices, and streaming telemetry, in memory with low latency for rapid access and analysis. Built using a cluster of virtual or physical servers, these caches automatically scale access throughput and analytics to handle large workloads. In addition, they provide built-in high availability to ensure uninterrupted access if a server fails. They are ideal for hosting on cloud platforms, which offer highly elastic computing resources to their users without the need for capital investments.

For more information, please visit www.scaleoutsoftware.com and follow @ScaleOut_Inc on Twitter.

Additional Resources:

- ScaleOut Google Cloud Platform blog post

- ScaleOut Product Suite information

About ScaleOut Software

Founded in 2003, ScaleOut Software develops leading-edge software that delivers scalable, highly available, in-memory computing and streaming analytics technologies to a wide range of industries. ScaleOut Software’s in-memory computing platform enables operational intelligence by storing, updating, and analyzing fast-changing, live data so that businesses can capture perishable opportunities before the moment is lost. It has offices in Bellevue, Washington and Beaverton, Oregon.

Media Contact

Brendan Hughes

RH Strategic for ScaleOut Software

ScaleOutPR@rhstrategic.com

206-264-0246

The post ScaleOut Software Adds Google Cloud Support Across Products appeared first on ScaleOut Software.

]]>The post Deploying ScaleOut’s Distributed Cache In Google Cloud appeared first on ScaleOut Software.

]]>

by Olivier Tritschler, Senior Software Engineer

Because of their ability to provide highly elastic computing resources, public clouds have become a highly attractive platform for hosting distributed caches, such as ScaleOut StateServer®. To complement its current offerings on Amazon AWS and Microsoft Azure, ScaleOut Software has just announced support for the Google Cloud Platform. Let’s take a look at some of the benefits of hosting distributed caches in the cloud and understand how we have worked to make both deployment and management as simple as possible.

Distributed Caching in the Cloud

Distributed caches, like ScaleOut StateServer, enhance a wide range of applications by offering shared, in-memory storage for fast-changing state information, such as shopping carts, financial transactions, geolocation data, etc. This data needs to be quickly updated and shared across all application servers, ensuring consistent tracking of user state regardless of the server handling a request. Distributed caches also offer a powerful computing platform for analyzing live data and generating immediate feedback or operational intelligence for applications.

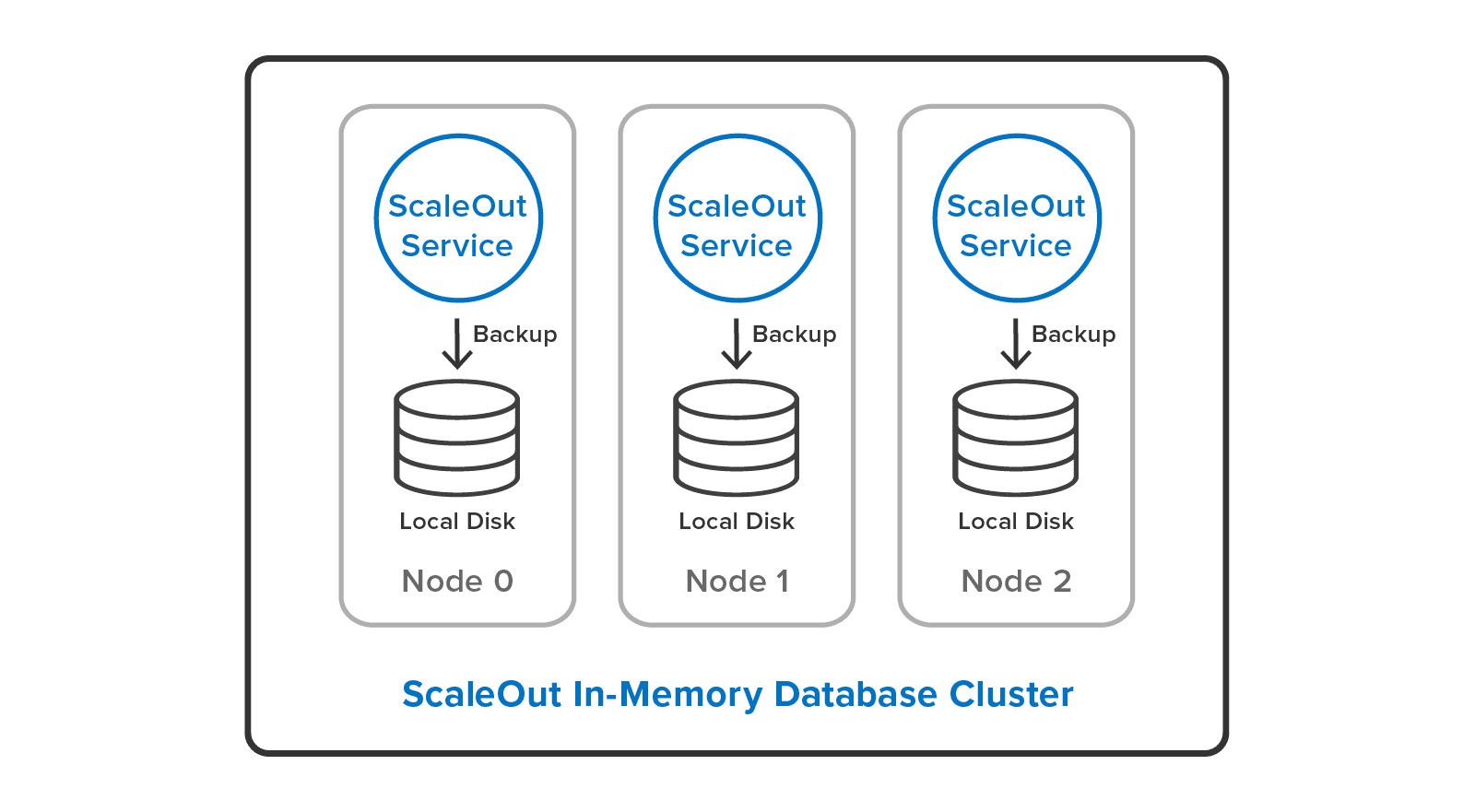

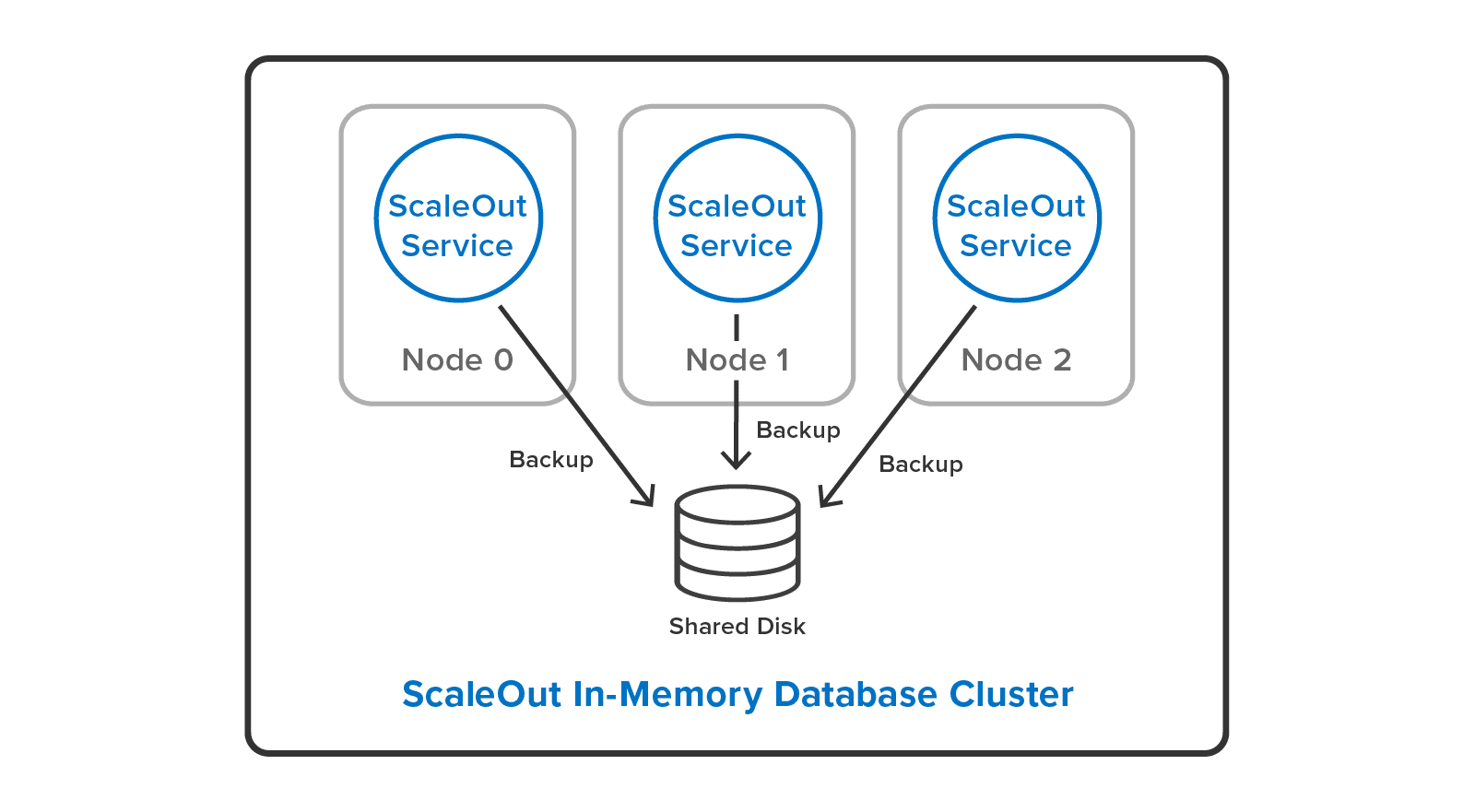

Built using a cluster of virtual or physical servers, distributed caches automatically scale access throughput and analytics to handle large workloads. With their tightly integrated client-side caching, these caches typically provide faster access to fast-changing data than backing stores, such as blob stores and database servers. In addition, they incorporate redundant data storage and recovery techniques to provide built-in high availability and ensure uninterrupted access if a server fails.

To meet the needs of elastic applications, distributed caches must themselves be elastic. They are designed to transparently scale upwards or downwards by adding or removing servers as the workload varies. This is where the power of the cloud becomes clear.

Because cloud infrastructures provide inherent elasticity, they can benefit both applications and distributed caches. As more computing resources are needed to handle a growing workload, clouds can deploy additional virtual servers (also called cloud “instances”). Once a period of high demand subsides, resources can be dialed back to minimize cost without compromising quality of service. The flexibility of on-demand servers also avoids costly capital investments and reduces management costs.

Deploying ScaleOut’s Distributed Cache in the Google Cloud

A key challenge in using a distributed cache as part of a cloud-hosted application is to make it easy to deploy, manage, and access by the application. Distributed caches are typically deployed in the cloud as a cluster of virtual servers that scales as the workload demands. To keep it simple, a cloud-hosted application should just view a distributed cache as an abstract entity and not have to keep track of individual caching servers or which data they hold. The application does not want to be concerned with connecting N application instances to M caching servers, especially when N and M (as well as cloud IP addresses) vary over time. In particular, an application should not have to discover and track the IP addresses for the caching servers.

Even though a distributed cache comprises several servers, the simplest way to deploy and manage it in the cloud is to identify the cache as a single, coherent service. ScaleOut StateServer takes this approach by identifying a cloud-hosted distributed cache with a single “store” name combined with access credentials. This name becomes the basis for both managing the deployed servers and connecting applications to the cache. It lets applications connect to the caching cluster without needing to be aware of the IP addresses for the cluster’s virtual servers.

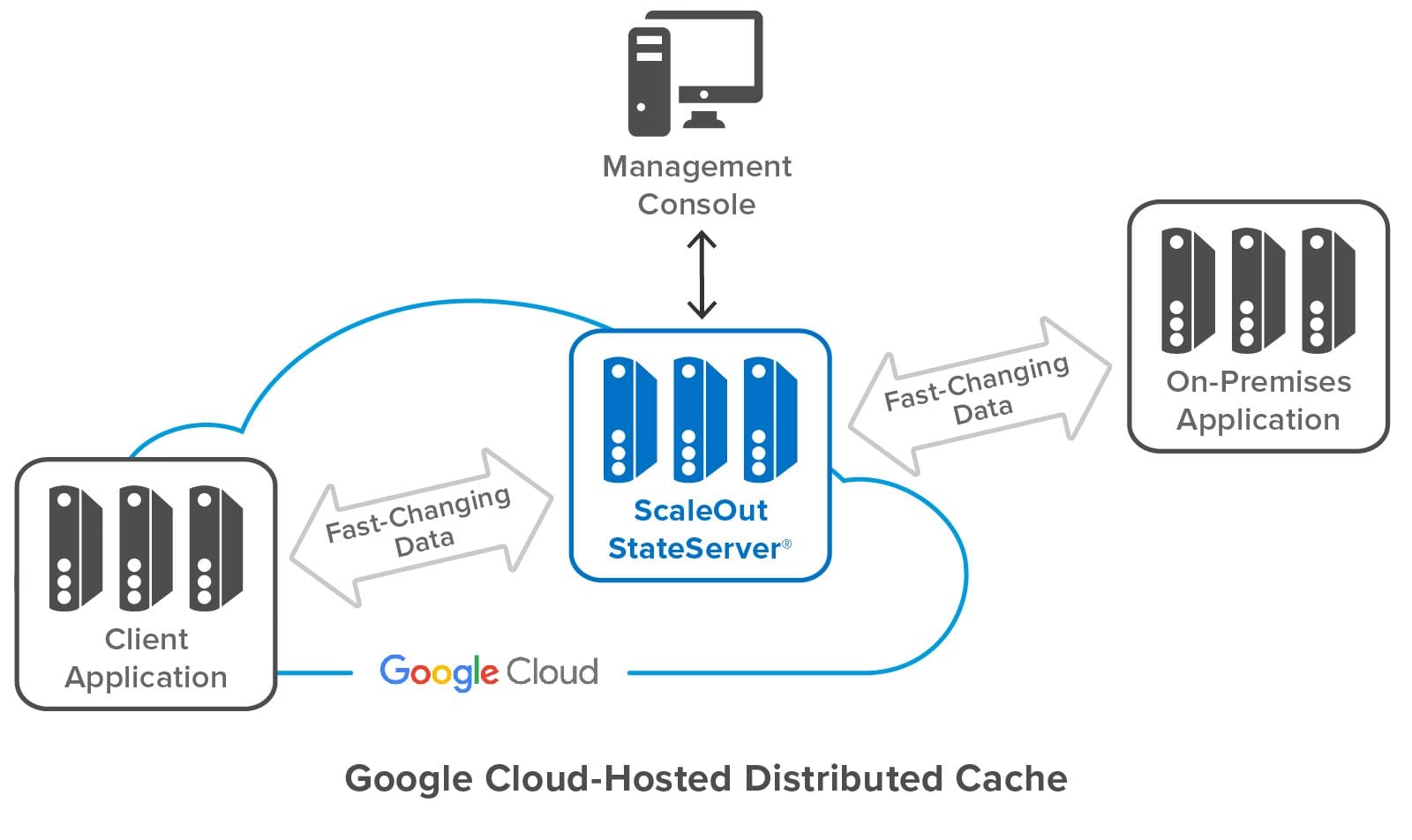

The following diagram shows a ScaleOut StateServer distributed cache deployed in Google Cloud. It shows both cloud-hosted and on-premises applications connected to the cache, as well as ScaleOut’s management console, which lets users deploy and manage the cache. Note that while the distributed cache and applications all contain multiple servers, applications and users can access the cache just by using its store name.

Building on the features developed for the integration of Amazon AWS and Microsoft Azure, the ScaleOut Management Console now lets users deploy and manage a cache in Google Cloud by just specifying a store name and initial number of servers, as well as other optional parameters. The console does the rest, interacting with Google Cloud to start up the distributed cache and configure its servers. To enable the servers to form a cluster, the console records metadata for all servers and identifies them as having the same store name.

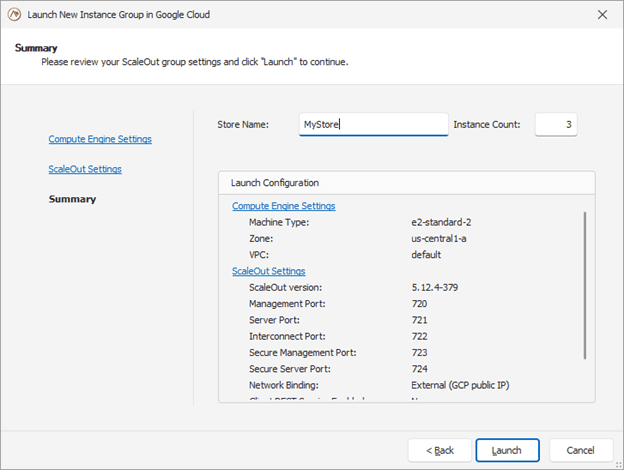

Here’s a screenshot of the console wizard used for deploying ScaleOut StateServer in Google Cloud:

The management console provides centralized, on-premises management for initial deployment, status tracking, and adding or removing servers. It uses Google’s managed instance groups to host servers, and automated scripts use server metadata to guarantee that new servers automatically connect with an existing store. The managed instance groups used by ScaleOut also support defining auto-scaling options based on CPU/Memory usage metrics.

Instead of using the management console, users can also deploy ScaleOut StateServer to Google Cloud directly with Google’s Deployment Manager using optional templates and configuration files.

Simplifying Connectivity for Applications

On-premises applications typically connect each client instance to a distributed cache using a fixed list of IP addresses for the caching servers. This process works well on premises because the cache’s IP addresses typically are well known and static. However, it is impractical in the cloud since IP addresses change with each deployment or reboot of a caching server.

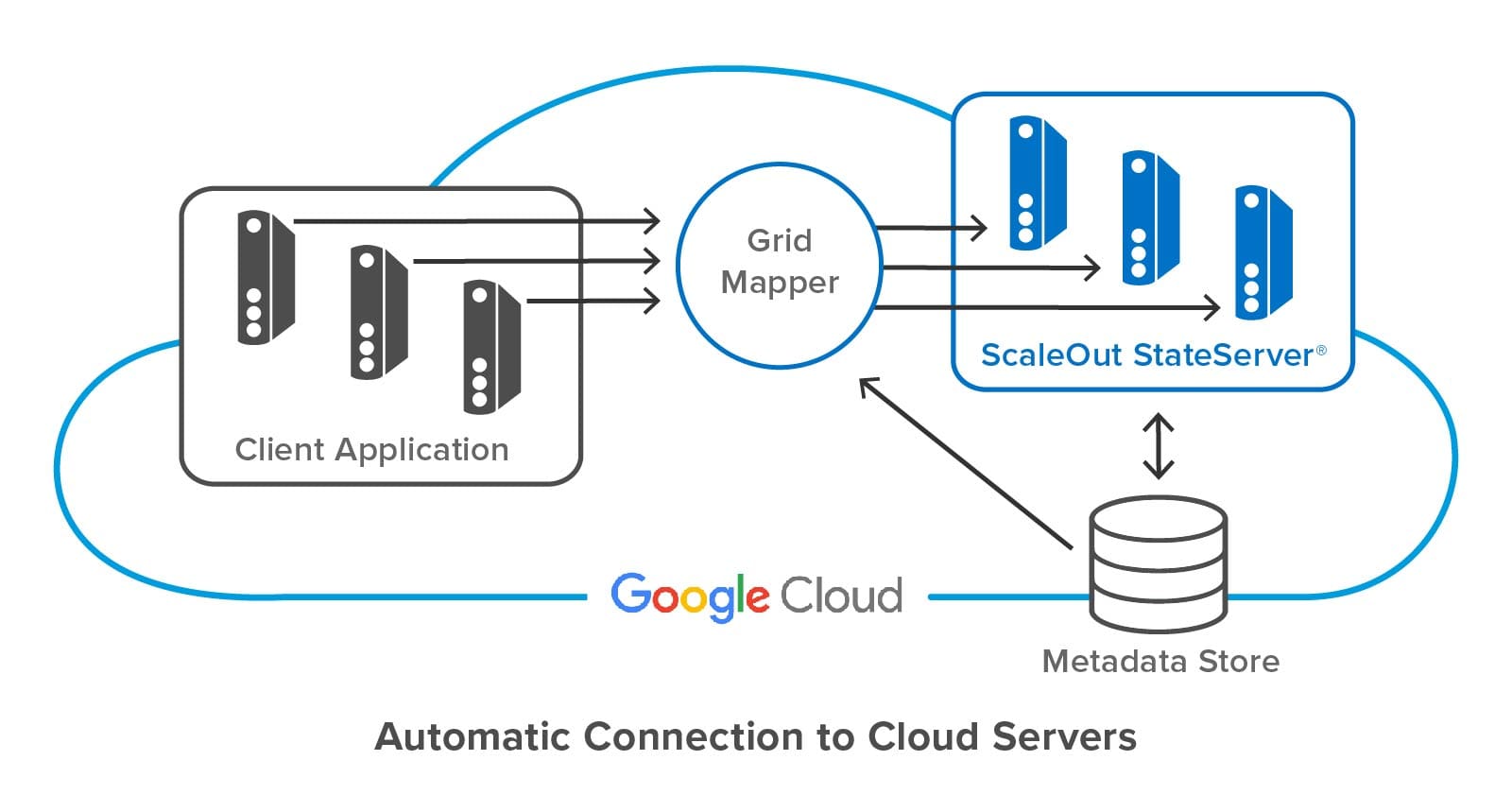

To avoid this problem, ScaleOut StateServer lets client applications specify a store name and credentials to access a cloud-hosted distributed cache. ScaleOut’s client libraries internally use this store name to discover the IP addresses of caching servers from metadata stored in each server.

The following diagram shows a client application connecting to a ScaleOut StateServer distributed cache hosted in Google Cloud. ScaleOut’s client libraries make use of an internal software component called a “grid mapper” which acts as a bootstrap mechanism to find all servers belonging to a specified cache using its store name. The grid mapper accesses the metadata for the associated caching servers and returns their IP addresses back to the client library. The grid mapper handles any potential changes in IP addresses, such as servers being added or removed for scaling purposes.

Summing up

Because they provide elastic computing resources and high performance, public clouds, such as Google Cloud, offer an excellent platform for hosting distributed caches. However, the ephemeral nature of their virtual servers introduces challenges for both deploying the cluster and connecting applications. Keeping deployment and management as simple as possible is essential to controlling operational costs. ScaleOut StateServer makes use of centralized management, server metadata, and automatic client connections to address these challenges. It ensures that applications derive the full benefits of the cloud’s elastic resources with maximum ease of use and minimum cost.

The post Deploying ScaleOut’s Distributed Cache In Google Cloud appeared first on ScaleOut Software.

]]>The post Simulate at Scale with Digital Twins appeared first on ScaleOut Software.

]]>

Digital Twins Can Implement Both Streaming Analytics and Simulations

With the ScaleOut Digital Twin Streaming Service , the digital twin software model has proven its versatility well beyond its roots in product lifecycle management (PLM). This cloud-based service uses digital twins to implement streaming analytics and add important contextual information not possible with other stream-processing architectures. Because each digital twin can hold key information about an individual data source, it can enrich the analysis of incoming telemetry and extracts important, actionable insights without delay. Hosting digital twins on a scalable, in-memory computing platform enables the simultaneous tracking of thousands — or even millions — of data sources.

, the digital twin software model has proven its versatility well beyond its roots in product lifecycle management (PLM). This cloud-based service uses digital twins to implement streaming analytics and add important contextual information not possible with other stream-processing architectures. Because each digital twin can hold key information about an individual data source, it can enrich the analysis of incoming telemetry and extracts important, actionable insights without delay. Hosting digital twins on a scalable, in-memory computing platform enables the simultaneous tracking of thousands — or even millions — of data sources.

Owing to the digital twin’s object-oriented design, many diverse applications can take advantage of its powerful but easy-to-use software architecture. For example, telematics applications use digital twins to track telemetry from every vehicle in a fleet and immediately identify issues, such as lost or erratic drivers or emerging mechanical problems. Airlines can use digital twins to track the progress of passengers throughout an itinerary and respond to delays and cancellations with proactive remedies that smooth operations and reduce stress. Other applications abound, including health informatics, financial services, logistics, cybersecurity, IoT, smart cities, and crime prevention.

Here’s an example of a telematics application that tracks a large fleet of vehicles. Each vehicle has a corresponding digital twin analyzing telemetry from the vehicle in real time:

Applications like these need to simultaneously track the dynamic behavior of numerous data sources, such as IoT devices, to identify issues (or opportunities) as quickly as possible and give systems managers the best possible situational awareness. To either validate streaming analytics code for a complex physical system or model its behavior, it is useful to simulate the devices and the telemetry that they generate. The ScaleOut Digital Twin Streaming Service now enables digital twins to simplify both tasks.

Use Digital Twins to Simulate a Workload for Streaming Analytics

Digital twins can implement a workload generator that generates telemetry used in validating streaming analytics code. Each digital twin models the behavior of a physical data source, such as a vehicle in fleet, and the messages it sends and receives. When running in simulation, thousands of digital twins can then generate realistic telemetry for all data sources and feed streaming analytics, such as a telematics application, designed to track and analyze its behavior. In fact, the streaming service enables digital twins to implement both the workload generator and the streaming analytics. Once the analytics code has been validated in this manner, developers can then deploy it to track a live system.

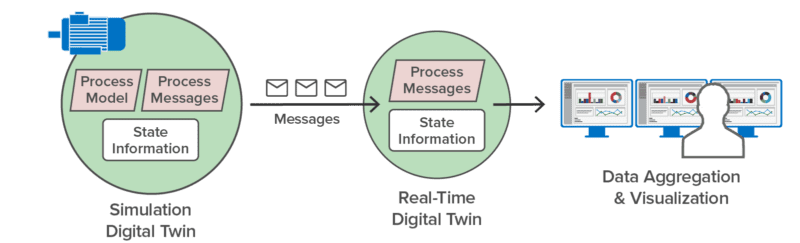

Here’s an example of using a digital twin to simulate the operations of a pump and the telemetry (such as the pump’s temperature and RPM) that it generates. Running in simulation, this simulated pump sends telemetry messages to a corresponding real-time digital twin that analyzes the telemetry to predict impending issues:

Once the simulation has validated the analytics, the real-time digital twin can be deployed to analyze telemetry from an actual pump:

This example illustrates how digital twins can both simulate devices and provide streaming analytics for a live system.

Using digital twins to build a workload generator enables investigation of a wide range of scenarios that might be encountered in typical, real-world use. Developers can implement parameterizable, stateful models of physical data sources and then vary these parameters in simulation to evaluate the ability of streaming analytics to analyze and respond in various situations. For example, digital twins could simulate perimeter devices detecting security intrusions in a large infrastructure to help evaluate how well streaming analytics can identify and classify threats. In addition, the streaming service can capture and record live telemetry and later replay it in simulation.

Use Digital Twins to Simulate a Large System with Many Entities

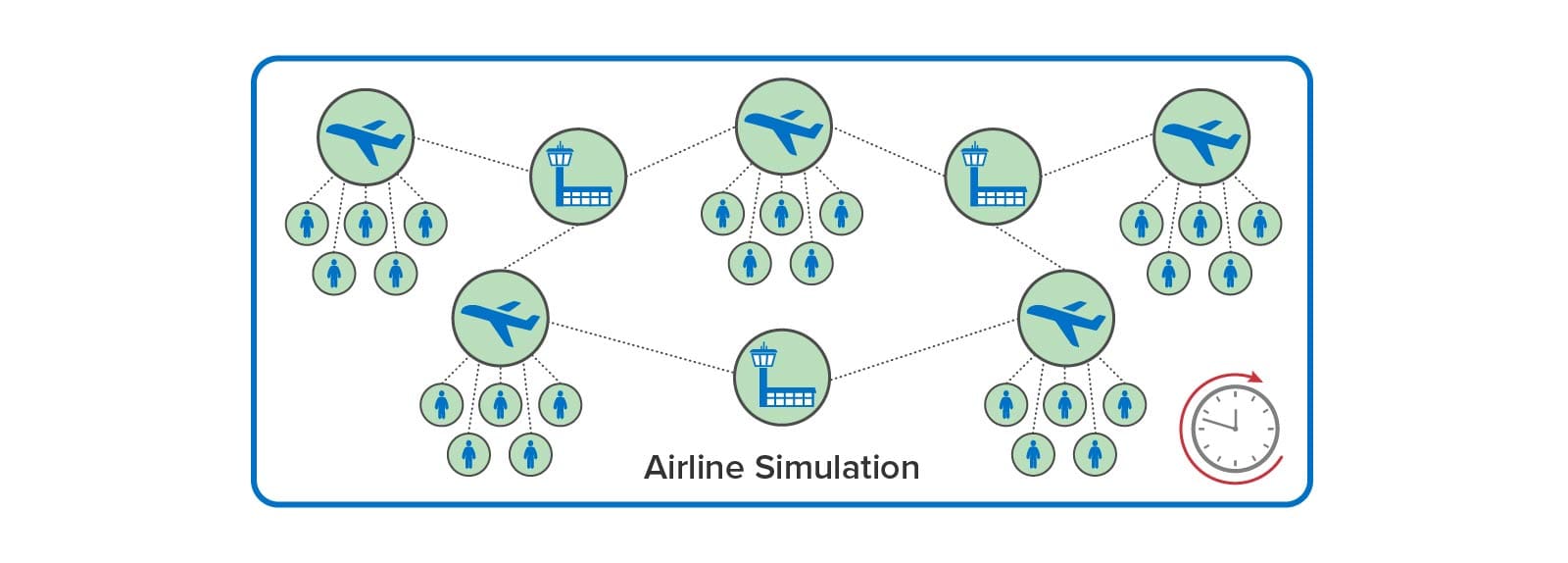

In addition to using digital twins for analyzing telemetry, the ScaleOut Digital Twin Streaming Service enables digital twins to implement time-driven simulations that model large groups of interacting physical entities. Digital twins can model individual entities within a large system, such as airline passengers, aircraft, airport gates, and air traffic sectors in a comprehensive airline model. These digital twins maintain state information about the physical entities they represent, and they can run code at each time step in the simulation model’s execution to update digital twin state over time. These digital twins also can exchange messages that model interactions.

For example, an airline tracking system can use simulation to model numerous types of weather delays and system outages (such as ground stops) to see how their system manages passenger needs. As the simulation model evolves over time, simulated aircraft can model flight delays and send messages to simulated passengers that react by updating their itineraries. Here is a depiction of an airline tracking simulation:

In contrast to the use of digital twins for PLM, which typically embody a complex design within a single digital twin model, the ScaleOut Digital Twin Streaming Service enables large numbers of physical entities and their interactions to be simulated. By doing this, simulations can model intricate behaviors that evolve over time and reveal important insights during system design and optimization. They also can be fed live data and run faster than real time as a tool for making predictions that assist decision-making by managers (such as airline dispatchers).

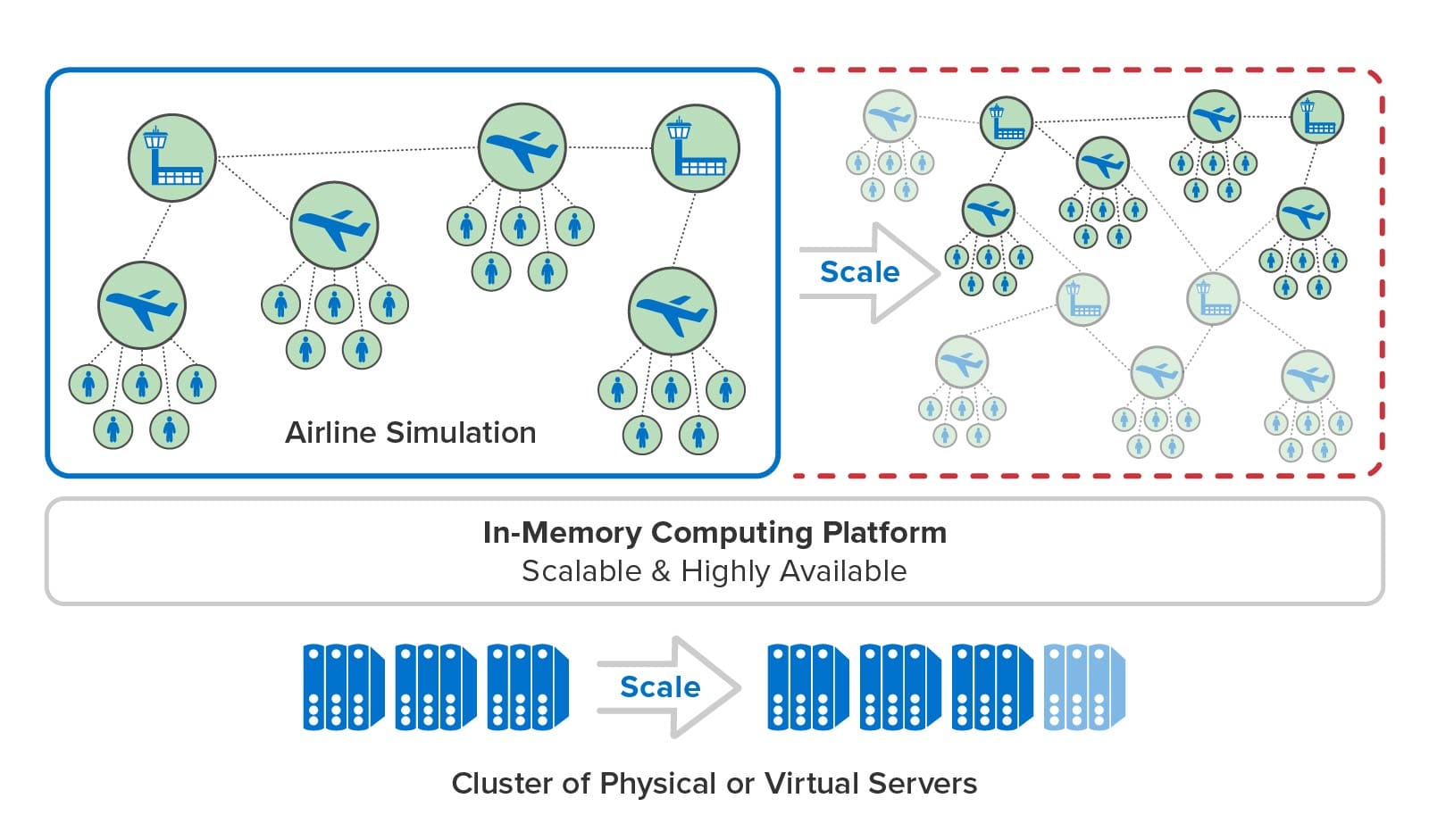

Scalable, In-Memory Computing Makes It Possible

Digital twins offer a compelling software architecture for implementing time-driven simulations with thousands of entities. In a typical implementation, developers create multiple digital twin models to describe the state information and simulation code representing various physical entities, such as trucks, cargo, and warehouses in a telematics simulation. They create instances of these digital twin models (simply called digital twins) to implement all of the entities being simulated, and the streaming service runs their code at each time step being simulated. During each time step, digital twins can exchange messages that represent simulated interactions between physical entities.

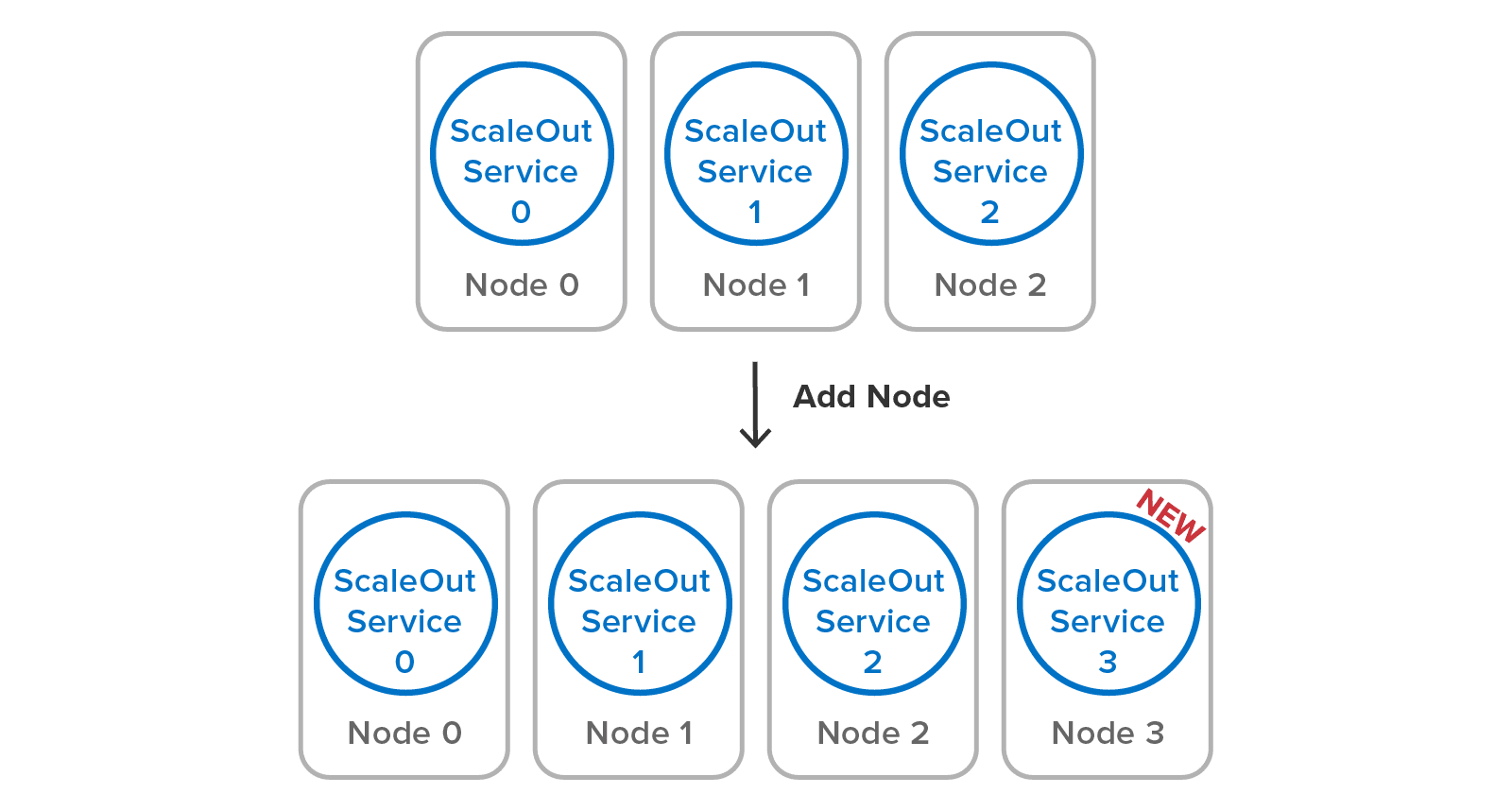

The ScaleOut Digital Twin Streaming Service uses scalable, in-memory computing technology to provide the speed and memory capacity needed to run large simulations with many entities. It stores digital twins in memory and automatically distributes them across a cluster of servers that hosts a simulation. At each time step, each server runs the simulation code for a subset of the digital twins and determines the next time step that the simulation needs to run. The streaming service orchestrates the simulation’s progress on the cluster and advances simulation time at a rate selected by the user.

In this manner, the streaming service can harness as many servers as it needs to host a large simulation and run it with maximum throughput. As illustrated below, the service’s in-memory computing platform can add new servers while a simulation is running, and it can transparently handle server outages should they occur. Users need only focus on building digital twin models and deploying them to the streaming service.

The Next Generation of Simulation with Digital Twins

Digital twins have historically been employed as a tool for simulating increasingly detailed behavior of a complex physical entity, like a jet engine. The ScaleOut Digital Twin Streaming Service takes digital twins in a new direction: simulation of large systems. Its highly scalable, in-memory computing architecture enables it to easily simulate many thousands of entities and their interactions. This provides a powerful new tool for extracting insights about complex systems that today’s managers must operate at peak efficiency. Its analytics and predictive capabilities promise to offer a high return on investment in many industries.

The post Simulate at Scale with Digital Twins appeared first on ScaleOut Software.

]]>The post ScaleOut Software Adds Simulation Capabilities to its Digital Twin Streaming Service appeared first on ScaleOut Software.

]]>BELLEVUE, WASH. — February 21, 2023 — ScaleOut Software today announces new device modeling and simulation capabilities for its ScaleOut Digital Twin Streaming Service . These capabilities extend the service’s ability to perform real-time analytics at scale using digital twins to track devices and other real-world data sources. Users can now simulate the behavior of these data sources using digital twins prior to deployment as a tool to evaluate design choices and improve decision-making. This offers several benefits to data analysts and managers in a wide range of industries, including telematics, logistics, security, healthcare, crime prevention, and financial services.

. These capabilities extend the service’s ability to perform real-time analytics at scale using digital twins to track devices and other real-world data sources. Users can now simulate the behavior of these data sources using digital twins prior to deployment as a tool to evaluate design choices and improve decision-making. This offers several benefits to data analysts and managers in a wide range of industries, including telematics, logistics, security, healthcare, crime prevention, and financial services.

Going beyond product lifecycle management (PLM), which focuses on improving product design, ScaleOut Software’s new simulation capabilities using digital twins also can assist in developing real-time analytics applications. The streaming service now enables digital twins to both generate and analyze telemetry. For example, developers can model a fleet of trucks with digital twins that send telemetry to additional digital twins which implement real-time analytics for tracking the fleet, identifying issues, and evaluating performance. The streaming service also can record telemetry from live devices and replay it in simulation to assist in performing offline analytics.

“We are excited to extend the capabilities of our streaming service to harness digital twins for both simulation and real-time analytics,” said Dr. William Bain, ScaleOut Software’s CEO and founder. “By hosting digital twins using our highly scalable, in-memory computing technology, we enable our customers to simulate large systems with complex interactions and gain insights not previously possible.”

Managers can also use ScaleOut Software’s simulation capabilities to model thousands of interacting data sources and predict likely outcomes faster than real time. For example, they can model the effect of delays for an airline’s flight schedule within a multi-hour, forward-looking window to predict and mitigate impacts on passengers caused by weather and equipment issues. This predictive capability can assist in decision-making during real-time operations.

Key Features and Benefits for ScaleOut Software’s Digital Twin Simulations:

- Build Simulations at Scale: Now developers can construct simulations that model thousands of devices and other data sources. Fast, in-memory computing ensures high throughput and seamless scaling during simulations with built-in high availability.

- Model with Digital Twins: Developers can harness the popular digital twin model to describe various physical entities using a powerful but easy-to-use, object-oriented representation of each device or data source. The ScaleOut Digital Twin Builder Toolkit

lets developers construct digital twin models in both Java and C#.

lets developers construct digital twin models in both Java and C#. - Combine Modeling and Analytics: The ScaleOut Digital Twin Streaming Service now helps managers use digital twins to both analyze telemetry from real-world data sources and to model those data sources. This simplifies the creation of workload generators for evaluating real-time analytics. The platform also allows a single digital twin instance to both simulate a data source’s behavior and analyze its telemetry.

- Capture and Replay Live Telemetry: The streaming service can capture telemetry from live data sources and replay it in simulation. This capability streamlines workload generation for simulations and enables offline analysis of live scenarios.

- Make Predictions with Simulation: The streaming service can configure simulations to run faster than real-time. This aids decision-making for operational managers by enabling simulations to predict future events based on parameterizable scenarios.

For more information, please visit www.scaleoutsoftware.com and follow @ScaleOut_Inc on Twitter.

Additional Resources:

- ScaleOut Digital Twin simulations blog post

- ScaleOut Digital Twin Streaming Service product page

About ScaleOut Software

Founded in 2003, ScaleOut Software develops leading-edge software that delivers scalable, highly available, in-memory computing and streaming analytics technologies to a wide range of industries. ScaleOut Software’s in-memory computing platform enables operational intelligence by storing, updating, and analyzing fast-changing, live data so that businesses can capture perishable opportunities before the moment is lost. It has offices in Bellevue, Washington and Beaverton, Oregon.

Media Contact

Brendan Hughes

RH Strategic for ScaleOut Software

ScaleOutPR@rhstrategic.com

206-264-0246

The post ScaleOut Software Adds Simulation Capabilities to its Digital Twin Streaming Service appeared first on ScaleOut Software.

]]>The post Steve Smith Review: Simplify Redis Clustering with ScaleOut IMDB appeared first on ScaleOut Software.

]]>

Check out the blog post and video from distinguished software architect and .NET guru Steve “ardalis” Smith on the challenges of scaling single-server Redis and how ScaleOut In-Memory Database tackles them with fully automated cluster technology to avoid complex manual configuration steps.

tackles them with fully automated cluster technology to avoid complex manual configuration steps.

Steve Smith is a well-known entrepreneur and software developer. He is passionate about building quality software and spreading his knowledge through training workshops, speaking at developer conferences, and sharing his experience on his blog and podcast. Steve Smith has also been recognized as a Microsoft MVP for over ten consecutive years.

The post Steve Smith Review: Simplify Redis Clustering with ScaleOut IMDB appeared first on ScaleOut Software.

]]>The post ScaleOut Software Introduces New Java Client API for Improved Application Development Flexibility appeared first on ScaleOut Software.

]]>BELLEVUE, WASH. — November 9, 2022 — ScaleOut Software today introduced a new Java client API for its distributed caching platform, ScaleOut StateServer, adding important new features and reliability improvements for developing modern, Java-based web applications. Designed with cloud-based deployments and flexibility in mind, the Java client API can be used within any web application and independent of any framework. This means that organizations can easily incorporate the ScaleOut client API within their existing application architectures.

The ScaleOut Java client API enables applications to store and retrieve POJOs (plain old java objects) from multiple ScaleOut in-memory data grids (IMDGs; also called distributed caches) and provides an easy to use, fast, cloud-ready caching API.

“We are pleased to offer developers our new Java client API for increased flexibility,” said Dr. William Bain, ScaleOut Software’s CEO and founder. “By accessing ScaleOut StateServer with this API, organizations can build modern cloud applications in Java while improving connectivity and application performance.”

New features include:

- Simultaneous connections: Enables simultaneous connections from Java clients to multiple caches across availability zones to ensure data availability in cloud environments.

- Built-in support for connection strings: Simplifies connectivity to multiple distributed caches and incorporates DNS support.

- Asynchronous API access: Enhances flexibility in application design and assists in optimizing performance.

Additional Resources:

- Developer blog post on new ScaleOut Java Client API

- ScaleOut Java Client User Guide

- ScaleOut Java Client API Documentation

- Sample applications using ScaleOut Java API on GitHub

For more information, please visit www.scaleoutsoftware.com and follow @ScaleOut_Inc on Twitter.

About ScaleOut Software

Founded in 2003, ScaleOut Software develops leading-edge software that delivers scalable, highly available, in-memory computing and streaming analytics technologies to a wide range of industries. ScaleOut Software’s in-memory computing platform enables operational intelligence by storing, updating, and analyzing fast-changing, live data so that businesses can capture perishable opportunities before the moment is lost. It has offices in Bellevue, Washington and Beaverton, Oregon.

Media Contact

Brendan Hughes

RH Strategic for ScaleOut Software

ScaleOutPR@rhstrategic.com

206-264-0246

The post ScaleOut Software Introduces New Java Client API for Improved Application Development Flexibility appeared first on ScaleOut Software.

]]>The post Introducing a New ScaleOut Java Client API appeared first on ScaleOut Software.

]]>

by Brandon Ripley, Senior Software Engineer

ScaleOut Software introduces a new Java client API for our distributed caching platform, ScaleOut StateServer®, that adds important new features for Java applications. It was designed with cloud-based deployments in mind, enabling clients to access ScaleOut in-memory data grids (IMDGs also called distributed caches) in multiple availability zones. It introduces the use of connection strings with DNS support for connecting Java clients to IMDGs, and it allows multiple, simultaneous connections to different caches. It also includes asynchronous APIs that add flexibility to application development.

You can download the JAR for the client API from ScaleOut’s Maven repository at https://repo.scaleoutsoftware.com. Simply connect your build tool to the repository and reference the API as a dependency to get started. The online User Guide can help you setup a project. Alternatively, you can download the JAR directly from the repo and then host the JAR with your build tool of choice. You can find an API reference here.

Let’s take a brief tour of the new Java APIs and look at an example using Docker for accessing multiple IMDGs.

A Quick Tour of the Java Client

The ScaleOut client API for Java lets client applications store and retrieve POJOs (plain old java objects) from a ScaleOut IMDG and provides an easy to use, fast, cloud-ready caching API. It can be used within any web application and is independent of any framework. This means that you can use the ScaleOut client API within your existing application architecture.

To simplify the developer experience, the API is logically divided into three primary packages:

Client Package

The client package houses the GridConnection class for connecting to a ScaleOut IMDG via a connection string. Each instance of GridConnection maintains a set of TCP connections to a ScaleOut cache and transparently handles retries, host failures, and load balancing.

The client package is also the place to register for event handling. ScaleOut servers can fire events for objects that are expiring and events for backing store operations (that is, read-through, refresh-ahead, write-behind, and erase-behind). The ServiceEvents class is used to register an event handler for events fired by the grid.

Caching Package

The caching package contains the strongly typed Cache<K,V> class that is used for all caching operations to store and retrieve POJOs of type V using a key of type K from a name space within the IMDG. All caching operations return a CacheResponse that details the result of the cache access.

For example, a successful access that adds a value to the cache using:

cache.add(key, value)

returns a CacheResponse with the status ObjectAdded, which can be obtained by calling the CacheResponse.getStatus() method. However, if the cache already contained an object for the key and the access was called again, CacheResponse.getStatus() would return ObjectAlreadyExists. (See the Javadoc for all possible responses.)

Query Package

The query package lets you perform queries to select objects from the IMDG. Queries are constructed using query filters created using the FilterFactory class. A filter can consist of a simple equality predicate, or it can combine multiple predicates to query with finer granularity.

Sample Applications

The following samples show how the ScaleOut Java client API can be used within a microservices architecture to access cached data and process events. The client API make it easy to develop modern web applications.

In these samples we will:

- Write an application that connects to two ScaleOut IMDGs to store and retrieve objects. (The two caches are configured to replicate data to each other using ScaleOut GeoServer®.)

- Write a second application that registers for and handles ScaleOut expiration events.

- Create four dockerfiles: the caching application, the expiration event handling application, and two ScaleOut IMDGs.

- Use the Docker compose command to spawn all four containers and run the two applications.

You can find the full samples, including the dockerfiles, on GitHub. Let’s look at the code for these two applications.

Accessing Multiple IMDGs

The first application’s goal is to verify ScaleOut GeoServer replication between two IMDGs. It first connects to the two IMDGs, creates an instance of Cache(K,V) for each IMDG, and then performs accesses.

The application connects to the grid using the GridConnection.connect() static method to instantiate a GridConnection object for each IMDG (named store1 and store2 here):

GridConnection store1Connection = GridConnection.connect("bootstrapGateways=store1:2721");

GridConnection store2Connection = GridConnection.connect("bootstrapGateways=store2:3721");

The next step is to create an instance of Cache(K,V) for each IMDG. Caches are instantiated with a GridConnection which associates the instance with a specific IMDG. This allows different instances to connect to different IMDGs.

The Java client API uses a builder pattern to instantiate caches. For applications using dependency injection, the immutable cache guarantees that the defaults we set at build time will stay consistent for the lifetime of the app. This is great for large applications with many caches as it guarantees there will be no unexpected modifications.

On the builder we can specify properties for defaults. Here is an example that sets an object timeout of fifteen seconds and a timeout type of Absolute (versus ResetOnUpdate or Sliding). The string “example” specifies the cache’s name space:

Cache<Integer, String> store1Cache = new CacheBuilder<Integer, String>(store1Connection, "example", Integer.class)

.objectTimeout(Duration.ofSeconds(15))

.timeoutType(TimeoutType.Absolute)

.build();

The Cache(K,V) class has multiple signatures for storing and retrieving objects from the IMDG. These signatures follow traditional Java naming semantics for distributed caching. For example, the add(key,value) method assumes that no key/value object mapping exists in the cache, whereas update(key,value) assumes than a key/value mapping exists in the cache.

This application uses the add method to insert an item into store1Cache and then checks the response for success. Here’s a code sample that adds two items to the cache:

CacheResponse<String, String> addResponse = store1Cache.add(“MyKey”, "SomeValue");

if(addResponse.getStatus() != RequestStatus.ObjectAdded)

System.out.println("Unexpected request status " + response.getStatus());

addResponse = store1Cache.add(“MyFavoriteKey”, "MyFavoriteValue");

if(addResponse.getStatus() != RequestStatus.ObjectAdded)

System.out.println("Unexpected request status " + response.getStatus());

The application’s goal is to verify that ScaleOut GeoServer replicates stored objects from the store1 IMDG to store2. It creates an instance of Cache(K,V) for the same namespace on store2 and then attempts to retrieve the object with the read API:

CacheResponse<String, String> readResponse = store2Cache.read(“Key”);

if(readResponse.getStatus() != RequestStatus.ObjectAdded)

System.out.println("Unexpected request status " + response.getStatus());

Registering for Events

This sample application demonstrates how an application can have fine grain control over which objects will be removed from the IMDG after a time interval elapses. With the object timeout and timeout-type properties established, objects added to the IMDG will be subject to expiration. When an object expires, the ScaleOut grid will fire an expiration event.

Our application can register to handle expiration events by supplying an instance of Cache(K,V) and an appropriate lambda (or implementing class) to the ServiceEvents static method. The following code removes all objects other than a cache entry mapping with the key, “MyFavoriteKey”:

ServiceEvents.setExpirationHandler(cache, new CacheEntryExpirationHandler<Integer, String>() {

@Override

public CacheEntryDisposition handleExpirationEvent(Cache<Integer, String> cache, String key) {

System.out.println("ObjectExpired: " + key);

if(key.compareTo(“MyFavoriteKey”) == 0)

return CacheEntryDisposition.Save;

return CacheEntryDisposition.Remove;

}});

Running the Applications

We’ve created code snippets for connecting to a ScaleOut grid, creating a cache, and registering for ScaleOut expiration events. We can put all these pieces together to create the two applications with two Java classes called CacheRunner and CacheExpirationListener.

CacheRunner connects to two ScaleOut IMDGs that are setup for push replication using ScaleOut GeoServer. (This is handled by the infrastructure via the dockerfiles and not done in code.) It creates an instance of Cache(K,V) associated with one of the IMDG (called store1) that has a very small absolute timeout for each object and another instance for the other IMDG (called store2). It stores an object in store1 and then retrieves it from store2 to verify that the object was pushed from one IMDG to the other.

Here is the code for CacheRunner:

package com.scaleout.caching.sample;

import com.scaleout.client.GridConnectException;

import com.scaleout.client.GridConnection;

import com.scaleout.client.caching.*;

import java.time.Duration;

public class CacheRunner {

public static void main(String[] args) throws CacheException, GridConnectException {

System.out.println("Connecting to store 1...");

GridConnection store1Connection = GridConnection.connect("bootstrapGateways=store1:2721");

System.out.println("Connecting to store 2...");

GridConnection store2Connection = GridConnection.connect("bootstrapGateways=store2:3721");

Cache<String, String> store1Cache = new CacheBuilder<String, String>(store1Connection, "sample", String.class)

.geoServerPushPolicy(GeoServerPushPolicy.AllowReplication)

.objectTimeout(Duration.ofSeconds(15))

.objectTimeoutType(TimeoutType.Absolute)

.build();

Cache<String, String> store2Cache = new CacheBuilder<String, String>(store2Connection, "sample", String.class)

.build();

System.out.println("Adding object to cache in store 1!");

CacheResponse<String, String> addResponse = store1Cache.add("MyKey", "MyValue");

System.out.println("Object " + ((addResponse.getStatus() == RequestStatus.ObjectAdded ? "added" : "not added."))

+ " to cache in store 1.");

addResponse = store1Cache.add("MyFavoriteKey", "MyFavoriteValue");

System.out.println("Object " + ((addResponse.getStatus() == RequestStatus.ObjectAdded ? "added" : "not added."))

+ " to cache in store 1.");

System.out.println("Reading object from cache in store 2!");

CacheResponse<String,String> readResponse = store2Cache.read("foo");

System.out.println("Object " + ((readResponse.getStatus() == RequestStatus.ObjectRetrieved ?

"retrieved" : "not retrieved.")) + " from cache in store 2.");

}

}

CacheExpirationListener connects to one ScaleOut IMDG, create an instance of Cache(K,V), and registers for expiration events. Here is its code:

package com.scaleout.caching.sample;

import com.scaleout.client.GridConnectException;

import com.scaleout.client.GridConnection;

import com.scaleout.client.ServiceEvents;

import com.scaleout.client.ServiceEventsException;

import com.scaleout.client.caching.*;

import java.io.IOException;

import java.time.Duration;

import java.util.concurrent.CountDownLatch;

public class ExpirationListener {

public static void main(String[] args) throws ServiceEventsException, IOException, InterruptedException,

GridConnectException {

GridConnection store1Connection = GridConnection.connect("bootstrapGateways=store1:2721");

Cache<String, String> store1Cache = new CacheBuilder<String, String>(store1Connection, "sample", String.class)

.geoServerPushPolicy(GeoServerPushPolicy.AllowReplication)

.objectTimeout(Duration.ofSeconds(15))

.objectTimeoutType(TimeoutType.Absolute)

.build();

ServiceEvents.setExpirationHandler(store1Cache, new CacheEntryExpirationHandler<String, String>() {

@Override

public CacheEntryDisposition handleExpirationEvent(Cache<String, String> cache, String key) {

CacheEntryDisposition disposition = CacheEntryDisposition.NotHandled;

System.out.printf("Object (%s) expired\n", key);

if(key.equals("MyFavoriteKey"))

disposition = CacheEntryDisposition.Save;

else disposition = CacheEntryDisposition.Remove;

return disposition;

}

});

}

}

To run these applications, we’ll use the Docker compose command to build Docker containers. We will have 4 services, each defined in their own respective dockerfile, which are all provided and available on the GitHub repo. You can clone the repository and then run the deployment with the following command:

docker-compose -f ./docker-compose.yml up -d –build

Here is the expected output for CacheRunner:

Adding object to cache in store 1! Object added to cache in store 1. Object added to cache in store 1. Reading object from cache in store 2! Object retrieved. from cache in store 2.

Here is the output for ExpirationListener:

Connected to store1! Object (MyFavoriteKey) expired Object (MyKey) expired

Summing Up

The new ScaleOut client API for Java adds important features that support the development of modern web and cloud applications. Built-in support for connection strings enables simultaneous connections to multiple IMDGs using DNS entries. Full support for asynchronous accesses also assists in application development. Let us know what you think with your comments on our community forum.

The post Introducing a New ScaleOut Java Client API appeared first on ScaleOut Software.

]]>The post New Video: Automated Clustering for Redis appeared first on ScaleOut Software.

]]> (IMDB) automates Redis clustering so that you can add and remove servers with a single command — starting with just two servers. ScaleOut IMDB also ensures that the cluster implements strong consistency to keep stored data safe and IT costs low.

(IMDB) automates Redis clustering so that you can add and remove servers with a single command — starting with just two servers. ScaleOut IMDB also ensures that the cluster implements strong consistency to keep stored data safe and IT costs low.

Subscribe to ScaleOut’s YouTube channel to see the latest explainer videos, interviews, tech talks and more!

The post New Video: Automated Clustering for Redis appeared first on ScaleOut Software.

]]>The post New Digital Twin Features for Real-World Applications appeared first on ScaleOut Software.

]]>

Using Digital Twins for Streaming Analytics

In the two years since we initially released the ScaleOut Digital Twin Streaming Service , we have applied the digital twin model to numerous use cases, including security alerting, telematics, contact tracing, logistics, device tracking, industrial sensor monitoring, cloned license plate detection, and airline system tracking. Constructing applications for these use cases has demonstrated the power of the digital twin model in creating streaming analytics that track large numbers of data sources.

, we have applied the digital twin model to numerous use cases, including security alerting, telematics, contact tracing, logistics, device tracking, industrial sensor monitoring, cloned license plate detection, and airline system tracking. Constructing applications for these use cases has demonstrated the power of the digital twin model in creating streaming analytics that track large numbers of data sources.

The process of building digital twin applications allowed us to surface both the strengths and shortcomings of our APIs. This has led to a series of new features which enhance the core platform. For example, we created a rules engine for implementing the logic within a digital twin so that new models can be created without the need for programming expertise. We then added machine learning to digital twin models using Microsoft’s ML.NET library. This enables digital twins to look for patterns in telemetry that are difficult to define with code. More recently, we integrated our digital twin model with Microsoft’s Azure Digital Twins to accelerate real-time processing using our in-memory computing technology while providing new visualization and persistence capabilities for digital twins.

With the newly announced version 2, we are adding important new capabilities for real-time analytics to our digital twin APIs. Let’s take a look at some of these new features.

New Support for .NET 6

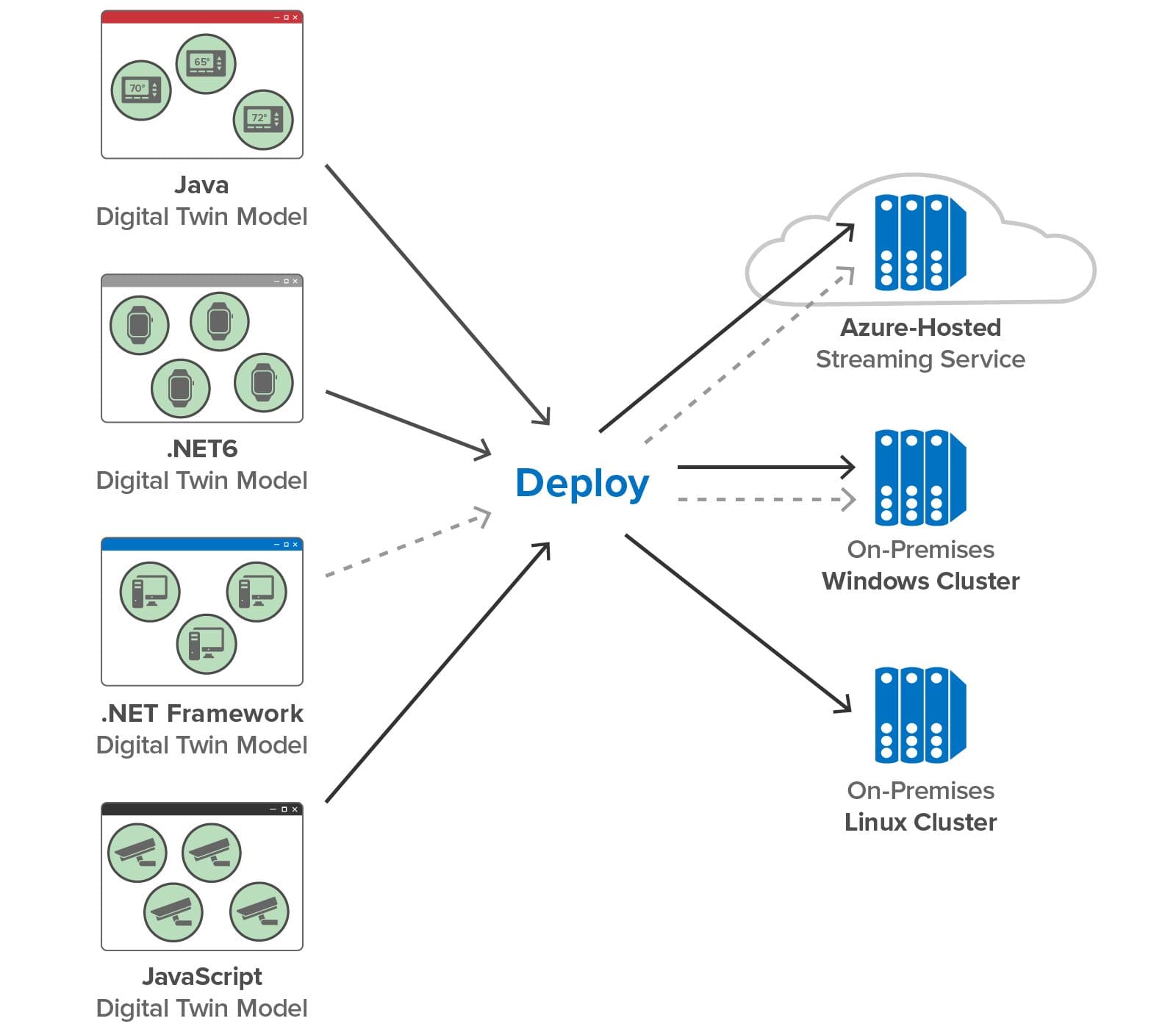

Version 2 expands the target platforms for C#-based digital twin models by supporting .NET 6. With our goal to make the ScaleOut Digital Twin Streaming Service’s feature set and visualization tools uniformly available in the cloud and on-premises, we recognized that we needed to move beyond support for .NET Framework, which can only be deployed on Windows. By adding .NET 6, we can take advantage of its portability across both Windows and Linux. Now C#, Java, JavaScript, and rules-based digital twin models can be deployed on all platforms:

(As illustrated with the dotted lines above, we continue to support .NET Framework on Windows and in the Azure cloud.)

To take maximum advantage of .NET 6, we also re-implemented our Azure cloud service and key portions of the back-end infrastructure in .NET 6. This provides better performance and flexibility for future upgrades.

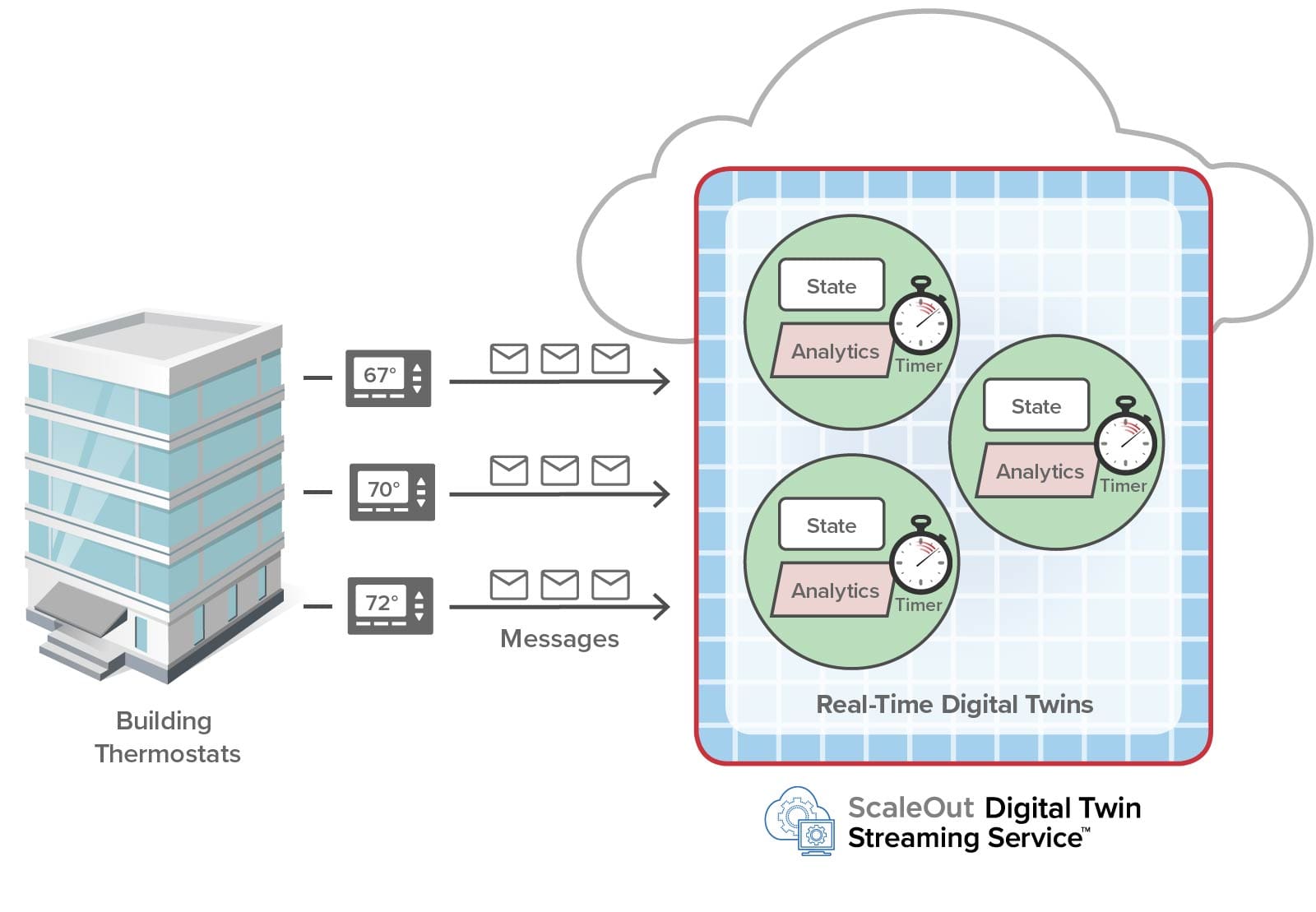

Digital Twin Timers

Using our APIs, digital twins can run analytics code to process incoming messages from their corresponding data sources. In developing a proof-of-concept application for an industrial safety application, we learned that they also need to be able to create timers and run code when the timers expire. This enables digital twins to detect when their data sources fail or become erratic in sending messages.

For example, consider a digital application that tracks periodic telemetry from a collection of building thermostats. Each digital twin looks for abnormal temperature excursions that indicate the need to alert personnel. In addition, a digital twin must determine if its thermostat has failed and is no longer sending periodic temperature readings. By setting a timer and restarting it after each message is received, the digital twin can signal an alert if excessive time elapses between incoming messages:

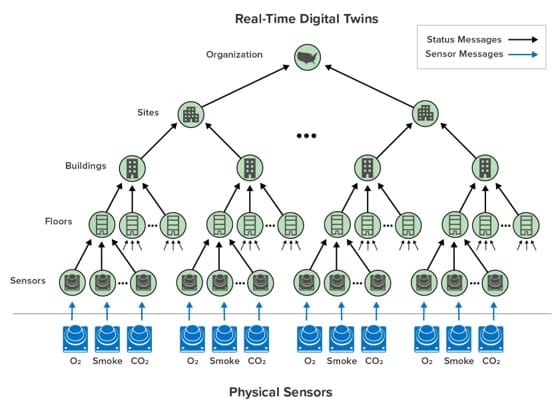

In the actual industrial safety application we built, buildings throughout a site had numerous smoke and gas sensors. Digital twins for the sensors incorporated timers to detect failed sensors. As shown below, they periodically forwarded their status to a hierarchy of digital twins arranged as shown below from the lowest level upwards. The digital twins represented floors within buildings, buildings within a site, sites within the organization, and the overall organization itself. At each level, status information was aggregated to gives personnel immediate information about where problems were occurring. The role of timers was critical in maintaining a complete picture of the organization’s status.

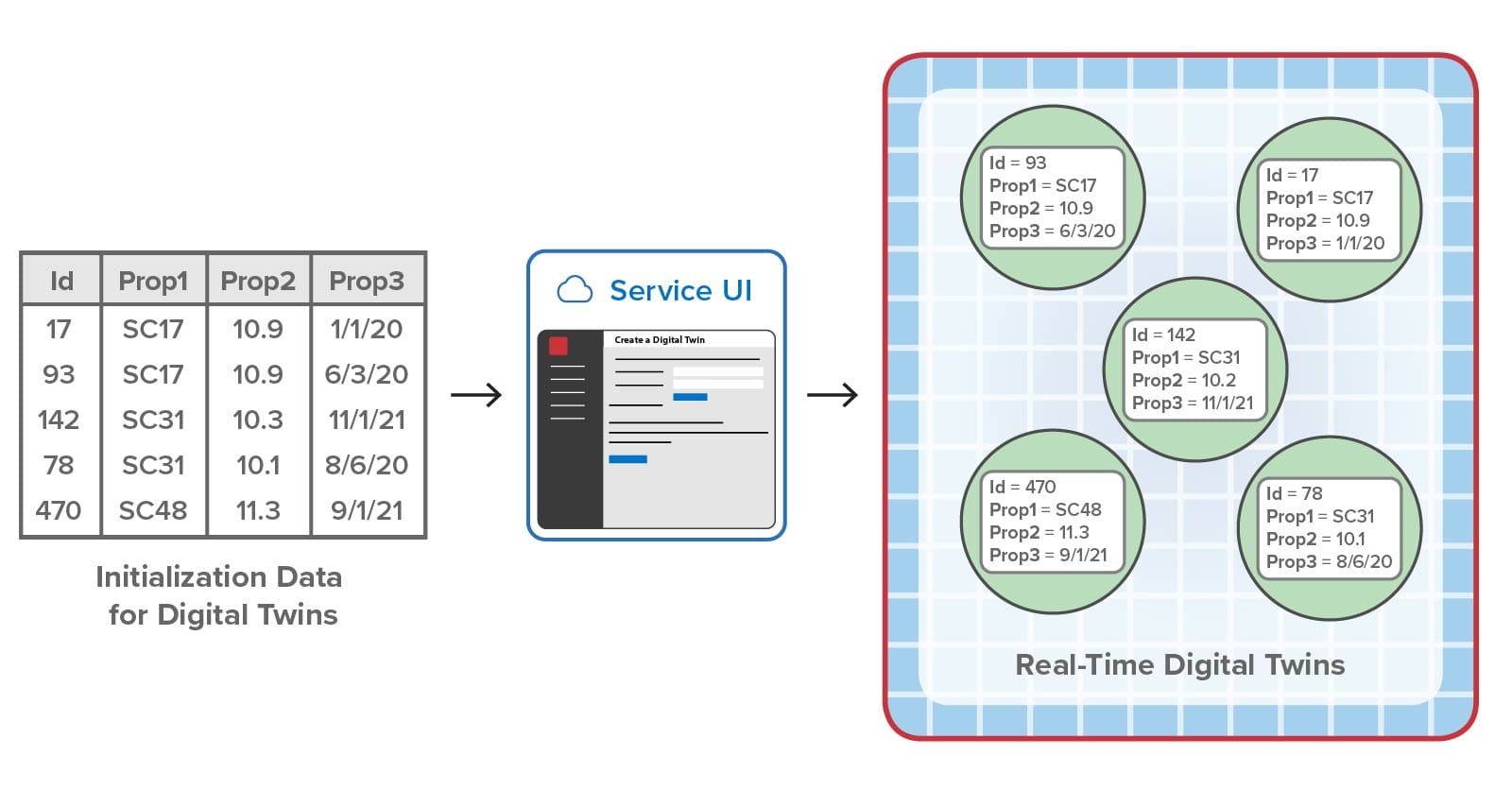

Aggregate Initialization

When we first implemented our digital twin platform, we designed it to automatically create a digital twin instance when the first message from an unknown data source arrives. (The platform determines which type of digital twin to create from the message’s contents.) This technique simplifies deployment by avoiding the need to explicitly create digital twin instances. The user simply develops and deploys a digital twin model, for example, for a gas sensor, and the platform creates a digital twin for each sensor that sends a message to the platform.